The Fastest Way to AGI: LLMs + Tree Search – Demis Hassabis (Google DeepMind CEO)

Summary

TLDRThe speaker believes that large language models will likely form a key component of future AGI systems, but additional planning and search capabilities will need to be layered on top to enable goal-oriented behavior. He argues that leveraging existing data and models as a starting point will allow for quicker progress compared to a purely reinforcement learning approach. However, specifying the right objectives and rewards remains an open challenge when expanding beyond games to real-world applications. Overall the path forward involves improving predictive models of the world and combining them with efficient search to explore massive spaces of possibilities.

Takeaways

- 😀 AGI will likely require large language models as a component, but additional planning and search capabilities will also be needed on top.

- 👍 Using existing knowledge and data to bootstrap learning in models seems more promising than learning purely from scratch.

- 😮 More efficient search methods can reduce compute requirements - improving the world model allows more effective search.

- 🤔 Defining the right rewards and goals is challenging for real world systems compared to games with clear winning conditions.

- 🚀 There is great potential in adding tree search planning capabilities on top of large language models.

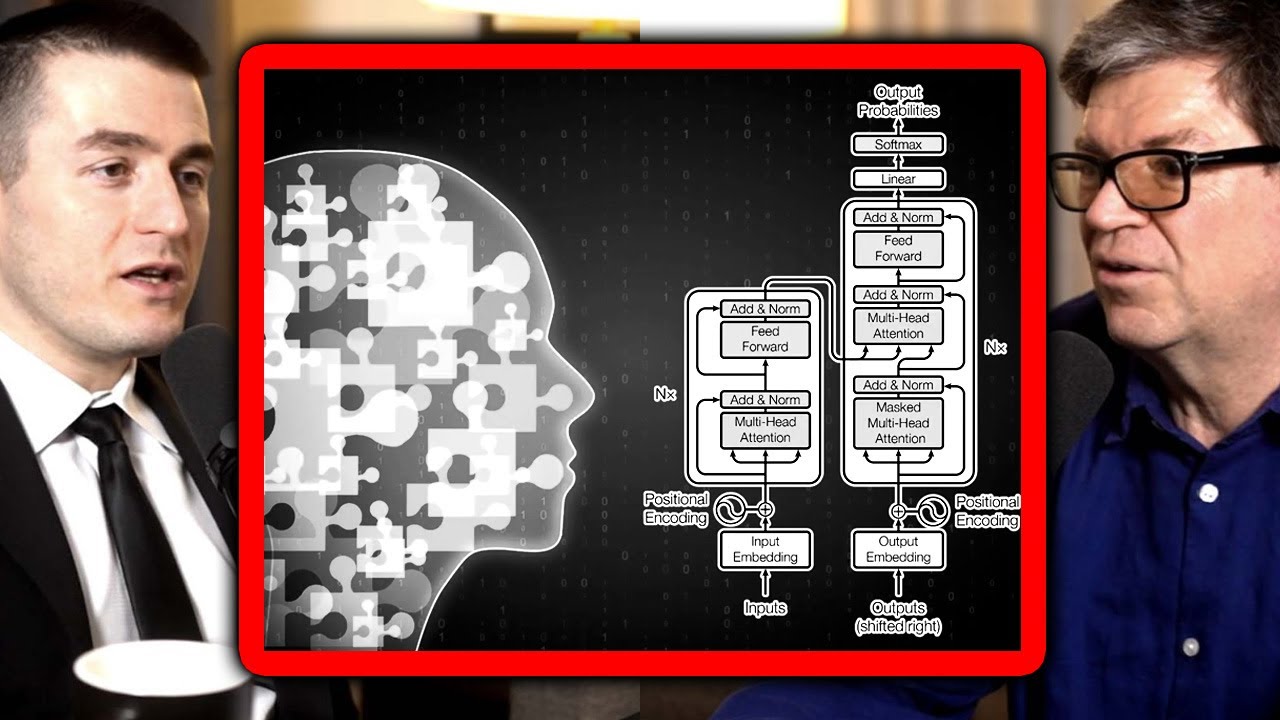

- 🔢 Combining scalable algorithms like Transformers to ingest knowledge, with search and planning holds promise.

- 😥 Current large models may be missing the ability to chain thoughts or reasoning together with search.

- 📈 Keep improving large models to make them better world models - a key piece.

- 👀 Some are exploring building all knowledge purely from reinforcement learning, but this may not be the fastest path.

- 💡 The final AGI system will likely involve both learned prior knowledge and new on-top mechanisms.

Q & A

What systems has DeepMind pioneered that can think through different steps to achieve a goal?

-DeepMind has pioneered systems like AlphaZero that can think through different possible moves in games like chess and Go to try to win the game. It uses a planning mechanism on top of a world model to explore massive spaces of possibilities.

What does the speaker believe are the necessary components of an AGI system?

-The speaker believes the necessary components of an AGI system are: 1) Large models that are accurate predictors of the world, 2) Planning mechanisms like AlphaZero that can make concrete plans to achieve goals using the world model, and 3) Possibly search algorithms to chain lines of reasoning and explore possibilities.

What potential does the speaker see for AGI to come from a pure reinforcement learning approach?

-The speaker thinks theoretically it's possible for AGI to emerge entirely from a reinforcement learning approach with no priors or data given to the system initially. However, he believes the quickest and most plausible path is to use existing knowledge and scalable algorithms like Transformers to ingest information to bootstrap the learning.

How can systems like AlphaZero be more efficient in their search compared to brute force methods?

-By having a richer, more accurate world model, AlphaZero can make strong decisions by searching far fewer possibilities than brute force methods that lack an accurate model. This suggests improving the world model allows more efficient search.

What challenge exists in defining reward functions for real-world systems compared to games?

-Games have clear reward functions like winning the game or increasing the score. But specifying the right rewards and goals in a general yet specific way for real-world systems is more challenging.

What benefit did DeepMind gain from using games as a proving ground for its algorithms?

-Games provided an efficient research domain with clearly defined reward functions in terms of winning or scoring. This made them ideal testbeds before tackling real-world complexity.

How might search mechanisms explore the possibilities generated by large language models?

-They could chain together lines of reasoning produced by the LLMs, using search to traverse trees of possibilities originating from the models' outputs.

Do LLMs have inherent goals and rewards driving their behavior?

-No, LLMs themselves don't have inherent goals and rewards. They produce outputs based on their training, requiring search/planning mechanisms and predefined goals/rewards to drive purposeful, goal-oriented behavior.

What role might hybrid systems play in developing AGI?

-The speaker believes hybrid systems combining large models with search, planning, and reinforcement learning components may provide the most promising path to developing AGI.

Why does the speaker believe starting from existing knowledge will enable quicker progress towards AGI compared to learning 'tabula rasa'?

-Starting tabula rasa forgoes all the progress made in collecting knowledge and developing algorithms for processing it. Building on top of this using hybrid approaches allows bootstrapping rather than starting from scratch.

Outlines

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowMindmap

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowKeywords

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowHighlights

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowTranscripts

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowBrowse More Related Video

5.0 / 5 (0 votes)