Installing Ollama - #2 of the Free Ollama Course

Summary

TLDRThis video tutorial delves into the detailed installation process of Ollama, a technology designed to harness the power of GPUs for various applications. The instructor guides viewers through downloading and setting up Ollama on Windows, Linux, and macOS platforms, emphasizing the ease of use and troubleshooting support available through Discord communities. The video also touches on navigating the command-line interface and hints at future lessons on advanced topics like web UI integration and custom directory setups.

Takeaways

- 😀 The video is part of a free course teaching how to use Ollama technology.

- 📚 A new video is released weekly to progressively teach more about Ollama.

- 👀 It's recommended to watch the video without following along first, then try the steps.

- 💻 The script covers downloading and installing Ollama on Windows, Linux, and macOS.

- 🔗 The video provides links to supported GPUs and Discord communities for support.

- 🖥️ For Windows, the presenter uses a Paperspace instance with a P6000 GPU for demonstration.

- 🔧 If GPU drivers are properly configured, Ollama will automatically use the GPU if supported.

- 📝 Ollama's interface is command-line based, accessed via terminal or PowerShell.

- 🔄 The `ollama run` command is used to start the REPL for interactive question and answer sessions.

- 🛠️ For Linux, a script is provided for installation, which can be reviewed or installed manually.

- 🍎 macOS installation is straightforward with a universal app, but older Intel Macs may lack GPU support.

- 🔄 Common next steps like installing a web UI or changing the default model directory will be covered in future videos.

Q & A

What is the purpose of the Ollama course?

-The Ollama course aims to teach everything one needs to know about using Ollama technology and to help users become proficient with it.

How often are new videos released in the Ollama course?

-A new video in the Ollama course is released each week.

What is the recommended approach to follow along with the Ollama installation video?

-It is suggested to watch the video all the way through without following along, then try the installation and refer back to the video if any issues are encountered.

Where can one find the download link for Ollama?

-The download link for Ollama can be found in the middle of the ollama.com webpage.

What are the three operating systems for which Ollama provides installation options?

-Ollama provides installation options for macOS, Linux, and Windows.

Why is Paperspace mentioned in the script?

-Paperspace is mentioned as a reliable source of Windows machines in the cloud with named GPUs, which is used for demonstrating the Ollama installation on Windows.

What is the significance of the P6000 GPU in the context of the video?

-The P6000 GPU is significant because it is the GPU used in the Windows instance on Paperspace for demonstrating the Ollama installation.

What should one do if their GPU is supported by Ollama but they are not seeing it being used?

-If a supported GPU is not being utilized by Ollama, users can seek help through the course Discord or the Ollama Discord.

What is the user interface like for Ollama without additional installations?

-Without additional installations, Ollama's user interface is at the command line.

How can one start using Ollama after installation?

-After installation, one can start using Ollama by opening the terminal or PowerShell and running the command 'ollama run' followed by the model name.

What is the recommended method for users who want to change the default directory for Ollama models?

-For changing the default directory for Ollama models, users should look into using environment variables as documented in the Ollama documentation.

What is the next step for users who wish to install a web UI for Ollama?

-Users interested in installing a web UI for Ollama should look forward to future videos in the course that will cover this topic.

Why is Apple Silicon mentioned as superior for running Ollama compared to Intel Macs?

-Apple Silicon is mentioned as superior because it offers better performance and compatibility with Ollama, whereas GPU support for older Intel Macs is non-existent.

What is the recommended action if a user encounters difficulties during the Ollama installation on Linux?

-If difficulties are encountered during the Ollama installation on Linux, users can review the script first, follow the manual install instructions, or seek help through the Discord channels.

How can one get help if they run into issues during the Ollama installation process?

-Users can get help by signing up to the two Discord channels mentioned in the script description.

Outlines

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowMindmap

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowKeywords

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowHighlights

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowTranscripts

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowBrowse More Related Video

7. OCR A Level (H446) SLR2 - 1.1 GPUs and their uses

78000/- மானியத்துடன் தடையில்லா மின்சாரம் 365 நாட்களுக்கும் இலவசம்! PM Surya Ghar Scheme Solar Plant

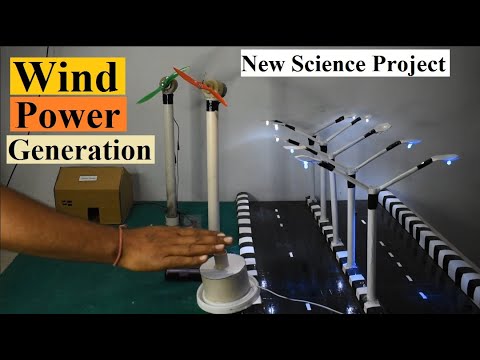

New Science Project, Free Energy Based Science Project, Automatic Street Light Project #science

host ALL your AI locally

How To Fail A Relationship (TMI)

Aprendendo rebobinar um gerador de energia parte 1

5.0 / 5 (0 votes)