Building a self-corrective coding assistant from scratch

Summary

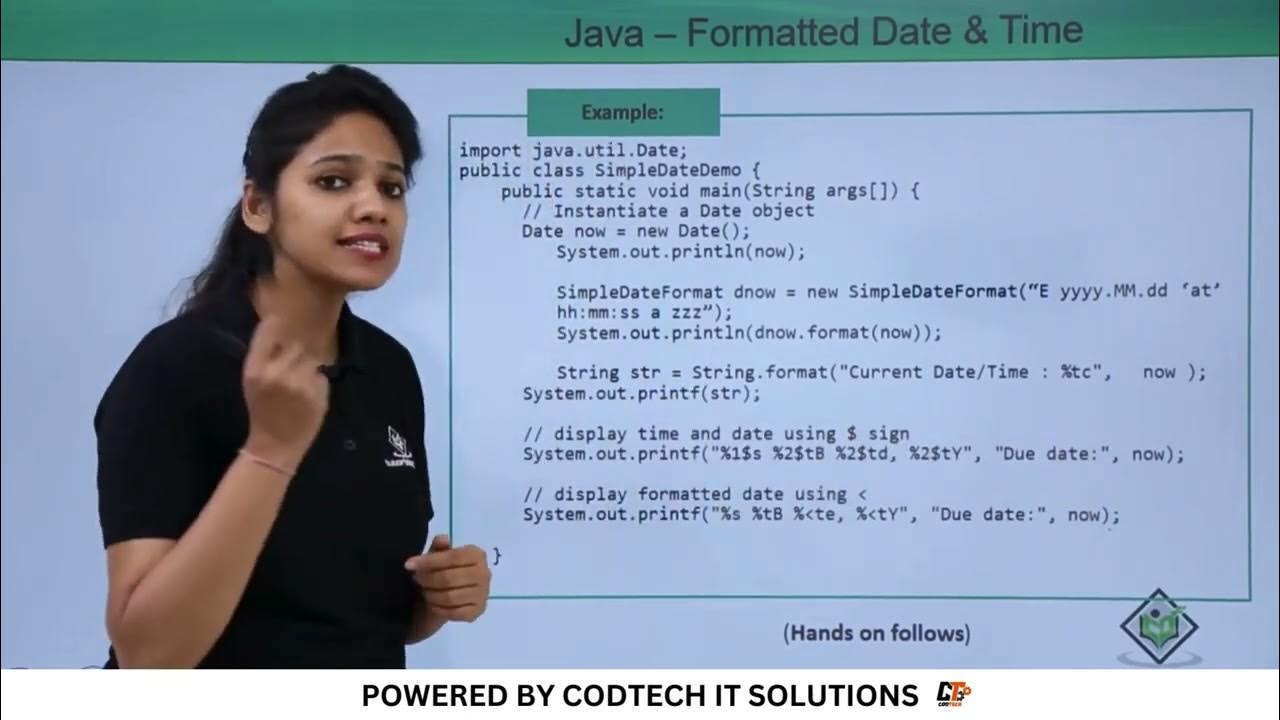

TLDRThe video discusses using LangGraph to implement iterative code generation with error checking and handling, similar to AlphaCode. It shows loading documentation context, structuring LM outputs, defining graph nodes to generate code, check imports and execution, and retry on failure. An example is shown where a coding mistake is fixed via the graph by passing the error back into the context prompt to induce reflection. Experiments found using the graph boosts success rates by 25% on a question set. The video encourages users to try this simple yet effective technique of code generation with tests and reflection themselves.

Takeaways

- 😀 Introduced LangGraph as a way to build arbitrary logic flows and graphs with LLMs

- 👌 Showed how to implement iterative code generation and testing using LangGraph, inspired by Alpha Codium paper

- 💡 Structured LLM outputs using Pydantic for easy testing and iteration on components

- 🔬 Evaluated code generation success rates with vs without LangGraph - saw ~50% improvement

- 📈 LangGraph enables feedback loops and reflection by re-prompting with prior errors

- 🌟 Built an end-to-end example flow for answering coding questions using LangGraph

- 📚 Ingested 60K tokens of documentation for code generation context

- ✅ Checked both imports and execution success of generated code before final output

- ❤️ Emphasized simplicity of idea and approach for reproducing key concepts from sophisticated models like Alpha Codium

- 👍 Encouraged viewers to try out LangGraph flows for their own applications

Q & A

What is the key innovation introduced in the Alpha Codium paper for code generation?

-The Alpha Codium paper introduces the idea of flow engineering for code generation, where solutions are tested on public and AI-generated tests, and then iteratively improved based on the test results.

How does LangGraph allow building arbitrary graphs to represent logical flows?

-LangGraph allows defining nodes as functions in a workflow, specifying conditional edges to determine the next node based on output, and mapping the nodes and edges to logical flows like in the code generation example.

What is the benefit of using a structured output format from the generation node?

-Using a structured output format with distinct components allows easily implementing tests and checks for aspects like imports and code execution, as well as feeding errors back into the regeneration process.

How does the error handling and regeneration process work?

-When an error occurs in checking imports or executing code, it is appended to the prompt to provide context. The regeneration node then produces a new solution attempt, using the prior error information.

What were the results of evaluating the LangGraph method on a 20 question dataset?

-While import checks were similar with and without LangGraph, code execution success improved from 55% to 80%, showing a significant benefit from the retry and reflection mechanism.

How many iterations does the graph allow before deciding to finish?

-The example graph allows up to 3 iteration attempts before deciding to finish, to prevent arbitrarily long execution.

What size context is used for the generation node?

-The generation node ingests around 60,000 tokens of documentation related to Lang expression language to use as context for answering questions.

What model architecture is used for the generation node?

-The example implements the generation node using a 125M parameter GPT-3 style model (Dall-E architecture) tuned on Lang documentation.

What is the purpose of tracking the question iteration count?

-Tracking the number of generation attempts for each question allows implementing logic to finish execution after a certain number of tries.

How could this approach be extended to more complex use cases?

-Possibilities include testing against larger public benchmarks, integrating more sophisticated testing frameworks, and using additional regeneration strategies.

Outlines

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowMindmap

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowKeywords

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowHighlights

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowTranscripts

This section is available to paid users only. Please upgrade to access this part.

Upgrade Now5.0 / 5 (0 votes)