Google and responsible AI

Summary

TLDRThis video script emphasizes the importance of responsible AI development at Google, highlighting the ethical responsibilities and challenges faced by technology providers. It discusses the potential impacts of AI, including fairness, bias, and accountability, and underscores the need for ethical AI practices. Google's approach includes rigorous assessments and reviews to align with AI principles, fostering trust and ensuring successful AI deployment. The script encourages organizations of all sizes to engage in responsible AI practices, stressing community collaboration and a collective value system to guide AI development. Completing the course contributes to advancing responsible AI practices amidst rapid AI adoption and innovation.

Takeaways

- 🌟 Technological innovation significantly improves our daily lives, but comes with great responsibility.

- 🚨 Growing concerns exist about AI's unintended impacts, such as ML fairness, historical biases, AI-driven unemployment, and accountability for AI decisions.

- 🤔 Ethical AI development is crucial to prevent unintended consequences, even in seemingly benign use cases.

- 📈 Responsible AI ensures that technology is beneficial and builds trust with users and stakeholders.

- 💡 Google's approach to AI involves rigorous assessments and reviews aligned with their AI Principles.

- 🔄 Building responsible AI is an iterative process that requires dedication and adaptability.

- 🚀 Small steps and regular reflection on company values are essential for responsible AI development.

- 🤝 Community collaboration is key to tackling the challenges of responsible AI development.

- 🔍 Robust processes are necessary to build trust, even if there are disagreements on decisions.

- 🌐 A culture of collective values and healthy deliberation guides responsible AI development.

Q & A

What is the main concern regarding AI innovation mentioned in the script?

-The main concern is the unintended or undesired impacts of AI innovation, such as ML fairness, perpetuation of historical biases, AI-driven unemployment, and accountability for decisions made by AI.

Why is it important to develop AI technologies with ethics in mind?

-It is important because AI has the potential to impact many areas of society and people's daily lives. Developing ethical AI helps to prevent ethical issues, unintended outcomes, and ensures AI is beneficial.

What does 'Responsible AI' mean in the context of the script?

-'Responsible AI' refers to the practice of ensuring AI systems are designed and deployed with ethics, fairness, and accountability in mind, even in seemingly innocuous or well-intentioned use cases.

How does Google integrate responsibility into AI deployments?

-Google integrates responsibility by building it into AI deployments, which results in better models and builds trust with customers. They also use a series of assessments and reviews to ensure rigor and consistency across product areas and geographies.

What is the relationship between responsible AI and successful AI according to Google's belief?

-Google believes that responsible AI equals successful AI, implying that the integration of ethical considerations and responsible practices leads to more successful AI deployments.

What is the role of AI Principles in Google's AI projects?

-AI Principles at Google serve as a guide to ensure that any project aligns with their ethical and responsible approach to AI development and deployment.

Why is it suggested that even small organizations can benefit from the course on responsible AI?

-The course is designed to guide organizations of any size, emphasizing that responsible AI is an iterative practice that requires dedication, discipline, and a willingness to learn and adjust over time, regardless of resource limitations.

What challenges might smaller organizations face when implementing responsible AI practices?

-Smaller organizations might feel overwhelmed or intimidated by the need to address new philosophical and practical problems, especially when resources are limited.

How does Google view its role in the community of AI users and developers?

-Google views itself as one voice in the community, recognizing that it does not know everything and believes in tackling challenges collectively for the best outcomes in AI development and deployment.

What is the significance of community in ensuring responsible AI development according to the script?

-Community is significant because it represents a collective effort to tackle challenges in AI development. It fosters a culture based on shared values and healthy deliberation, which is crucial for guiding the development of responsible AI.

What is the importance of developing robust processes in AI development as mentioned in the script?

-Developing robust processes is important because it instills trust among people, even if they don't agree with the final decision. It ensures that the process itself is transparent and reliable, which is key to responsible AI development.

Outlines

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowMindmap

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowKeywords

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowHighlights

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowTranscripts

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowBrowse More Related Video

Introduction to Responsible AI

Course introduction

Eski GOOGLE CEO'su Eric Schmidt'in Yasaklanan Röportajı

รู้จัก Multimodal AI ทำงานได้กับข้อมูลหลายประเภท | EP.56 - #TechByTrueDigitalPodcast

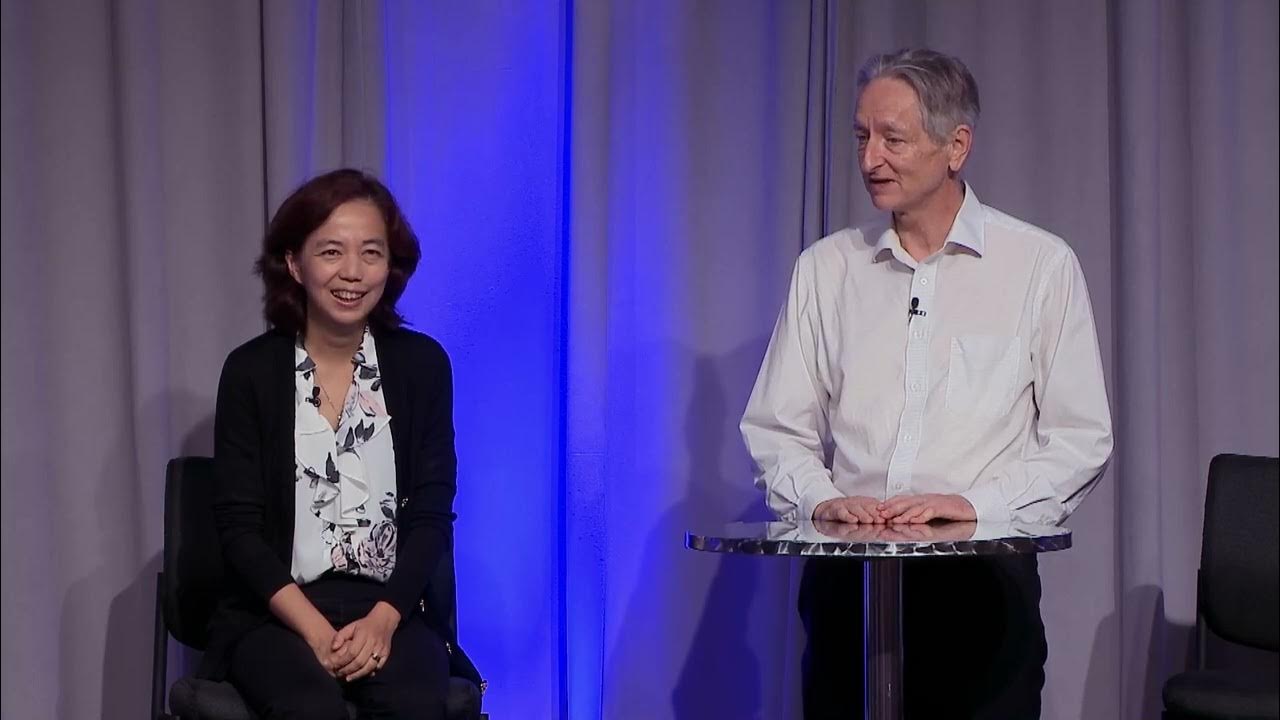

Geoffrey Hinton in conversation with Fei-Fei Li — Responsible AI development

The AI Governance Challenge | PulumiUP 2024

5.0 / 5 (0 votes)