Logit model explained: regression with binary variables (Excel)

Summary

TLDRThis video from Nettle, a platform for distance learning in business and finance, is hosted by Sava who delves into the logistic regression model, a vital tool for estimating regression models with binary dependent variables. The tutorial covers the model's application in credit scoring, predicting loan defaults, and emphasizes the importance of variables like homeownership and full-time employment. It guides viewers through the process of estimating the model, optimizing coefficients, and interpreting results to assess credit risk, concluding with a practical example of scoring a loan application.

Takeaways

- 📚 The script introduces the logit model, also known as logistic regression, as a statistical technique for estimating regression models with a categorical or binary dependent variable.

- 🏦 The logit model is commonly used in finance and economics for applications such as credit scoring, predicting recessions, and analyzing exam success rates.

- 🔢 The model requires a balanced dataset with a significant portion of both zeros (non-defaults) and ones (defaults) to function effectively.

- 🏠 Two important categorical variables considered in credit scoring are homeownership and full-time employment status, which are used as predictors for loan default.

- 💰 Continuous variables like income, expenses, assets, and loan amounts are transformed into interpretable indicators, such as the natural logarithm of the ratio of expenses to income, to assess the likelihood of loan repayment.

- 📈 The logit model uses the logistic distribution function to estimate the probability of the dependent variable, ensuring the output is bounded between 0 and 1, suitable for probability estimation.

- 🔍 The model's coefficients are optimized by maximizing the log likelihood function rather than minimizing the squared sum of residuals, as in ordinary least squares regression.

- 📊 The variance of the estimator is calculated using the inverse of a matrix product that includes the weight matrix, which accounts for the heteroskedasticity inherent in binary outcomes.

- 📉 The significance of the model's predictors is determined by calculating z-statistics and p-values, which help identify variables that are statistically reliable.

- 🏢 The script provides a practical example of how the logit model can be applied in a bank's credit scoring process to assess an individual's creditworthiness.

- 👍 The video concludes by emphasizing the importance of understanding the logit model for various applications in business, finance, and economics.

Q & A

What is the primary purpose of the logit model discussed in the video?

-The primary purpose of the logit model, also known as logistic regression, is to estimate regression models when the response variable is categorical or binary, which is common in fields like finance and economics.

Why is the logit model preferred over multiple linear regression for binary outcomes?

-The logit model is preferred because it restricts the estimated values of the dependent variable to be between zero and one, making it suitable for estimating probabilities, unlike multiple linear regression which can yield values outside this range.

What are some applications of the logit model mentioned in the video?

-Some applications include credit scoring, predicting recessions, and determining success or failure in exams. These applications often involve predicting a binary outcome based on various explanatory variables.

What is the significance of having a balanced sample in the logit model?

-A balanced sample, where there is a roughly equal number of zeros and ones for the binary outcome, is important for the logit model to function properly. An overwhelming majority of one outcome would make the model less effective.

What are the two main categorical variables considered in the video for predicting loan default?

-The two main categorical variables are homeownership and full-time employment status, as these are considered important factors when deciding whether to grant a loan.

How are income, expenses, assets, and loan amount transformed into explanatory variables for the logit model?

-They are transformed into interpretable indicators such as the natural logarithm of the ratio of expenses to income, leverage after the loan is granted, and the natural logarithm of the loan amount over typical income to measure repayment time.

What is the logit transformation used for in the logit model?

-The logit transformation is used to convert the logit value, which is the exponent of the weighted sum of explanatory variables and coefficients, into an estimated probability that ranges from zero to one.

How is the optimal value of coefficients in the logit model determined?

-The optimal values of coefficients are determined by maximizing the log likelihood function, which is done using a solver to find the values that best fit the model to the data.

What is the purpose of calculating the covariance matrix in the context of the logit model?

-The covariance matrix is used to estimate the variance of the estimator for the coefficients, which in turn allows for the calculation of standard errors and z-statistics to test the significance of the coefficients.

How can the logit model be used to predict an individual's likelihood of defaulting on a loan?

-By inputting an individual's specific data into the model, such as homeownership status, employment status, income, expenses, assets, and loan amount, the model can calculate the probability of default, which can then be used to assess creditworthiness.

Outlines

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowMindmap

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowKeywords

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowHighlights

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowTranscripts

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowBrowse More Related Video

Konsep Dasar Regresi Logistik

Lec-5: Logistic Regression with Simplest & Easiest Example | Machine Learning

Statistik Terapan: Regresi Logistik penjelasan singkat

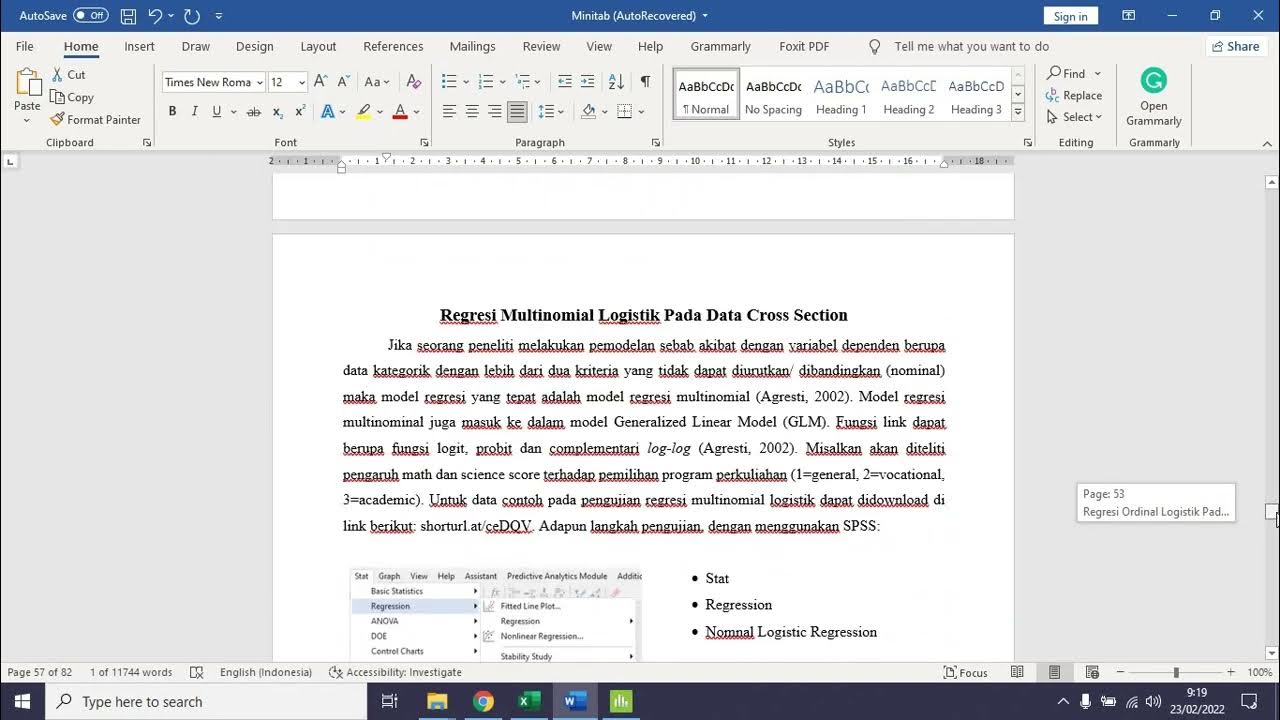

Regresi Ordinal dan Multinomial Logistik Pada Data Crosssection dengan Minitab

Modul 12 (StatSos2) - Konsep Dasar Regresi Linear Sederhana

PENGERTIAN REGRESI LINEAR

5.0 / 5 (0 votes)