Lab Exp07:: Prevention of Race condition using mutex in C programming in multithreaded process

Summary

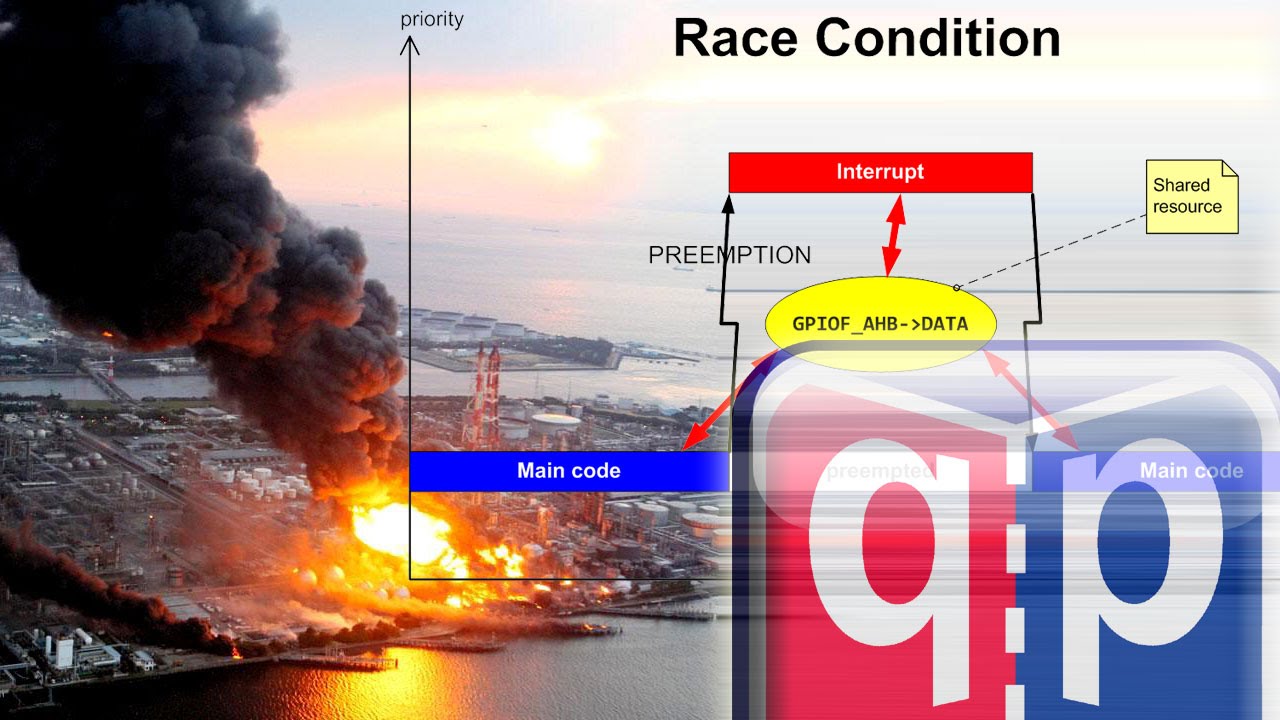

TLDRThe video script discusses the issue of race conditions in multi-threaded programming, demonstrating how a shared variable can be incorrectly incremented due to improper context switching. It then shows how to resolve this using a mutex, a synchronization mechanism that ensures mutual exclusion, thus preventing race conditions by allowing only one thread to access a shared resource at a time. The script concludes with a demonstration that, with mutex in place, the final value of the shared variable consistently reaches the expected two million, regardless of thread execution order.

Takeaways

- 🔒 The script discusses the problem of race conditions in multi-threaded programming.

- 📝 The issue arises when two threads try to increment a shared variable, expecting a final value of two million but getting inconsistent results.

- 👷♂️ The script demonstrates how to resolve race conditions using mutexes, which provide mutual exclusion for shared resources.

- 🔨 Mutexes are declared and initialized using specific pthread functions to ensure safe access to shared data.

- 🔒🔒 Before accessing the shared variable, threads must lock the mutex with `pthread_mutex_lock`.

- 🔓 After updating the shared variable, threads must unlock the mutex with `pthread_mutex_unlock`.

- 🛠️ The script shows the implementation of mutex locking within the threads' increment loop to prevent race conditions.

- 🔄 The use of mutex ensures that only one thread can update the shared variable at a time, blocking others until the lock is released.

- 💡 The script illustrates that with mutex locking, the final value of the shared variable consistently reaches the expected two million.

- 🔄 The script mentions that the order of thread execution may vary, but the use of mutex ensures a consistent outcome.

- 🚀 The script concludes by stating that mutexes and semaphores can effectively address race conditions, with a follow-up session planned to discuss semaphores.

Q & A

What is the primary issue discussed in the video script?

-The primary issue discussed in the video script is the race condition in multi-threaded programming, where two or more threads access a shared variable simultaneously and lead to unpredictable results.

What was the expected outcome if the shared variable was incremented correctly by two threads one million times each?

-The expected outcome would be that the final value of the shared variable should be two million, as each thread increments the value one million times.

What is the actual result observed when the race condition occurs?

-When the race condition occurs, the final value of the shared variable is not consistent and varies with each execution, deviating from the expected two million.

How is a mutex used to solve the race condition problem?

-A mutex (mutual exclusion) is used to ensure that only one thread can access a shared resource at a time. It is used to lock and unlock access to the shared variable, preventing simultaneous access and thus avoiding the race condition.

What function is used to declare a mutex variable in the script?

-The function used to declare a mutex variable in the script is pthread_mutex_t.

What function is used to initialize a mutex variable?

-The function used to initialize a mutex variable is pthread_mutex_init, which takes the address of the mutex variable and an optional attributes argument.

What are the two functions used to control access to the shared variable using a mutex?

-The two functions used to control access to the shared variable using a mutex are pthread_mutex_lock and pthread_mutex_unlock.

What happens when a thread calls pthread_mutex_lock?

-When a thread calls pthread_mutex_lock, it attempts to acquire the lock on the mutex. If the lock is already held by another thread, the calling thread will be blocked until the lock becomes available.

How does using a mutex ensure the correct final value of the shared variable?

-Using a mutex ensures that only one thread can update the shared variable at a time, preventing simultaneous updates that could lead to incorrect values. This sequential execution guarantees that the final value will be the expected two million.

What is the next topic the speaker mentions will be discussed in the next session?

-The next topic the speaker mentions will be discussed in the next session is semaphores, which are another synchronization mechanism to handle similar issues as mutexes.

Outlines

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowMindmap

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowKeywords

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowHighlights

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowTranscripts

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowBrowse More Related Video

#20 Race Conditions: What are they and how to avoid them?

Python Intermediate Tutorial #6 - Queues

Node.js is a serious thing now… (2023)

PROCESS AND THREAD - OPERATING SYSTEM - PART 4

L-3.2: Producer Consumer Problem | Process Synchronization Problem in Operating System

Introduction to RTOS Part 6 - Mutex | Digi-Key Electronics

5.0 / 5 (0 votes)