Feedforward Explained - Neural Networks From Scratch Part 1

Summary

TLDRThis video introduces neural networks from scratch, focusing on understanding and implementing them using Python. It explains the basic structure of neural networks, including inputs, hidden layers, and outputs, and details the feed-forward process where inputs are propagated through the network to produce outputs. Key concepts like artificial neurons, weights, biases, activation functions, and matrix-based computations are covered, highlighting how vectorization enables efficient calculations for multiple inputs and neurons. The video also clarifies notations, weight matrix shapes, and the importance of proper dimensions. This foundational knowledge sets the stage for learning cost functions, gradient descent, and backpropagation in subsequent videos.

Takeaways

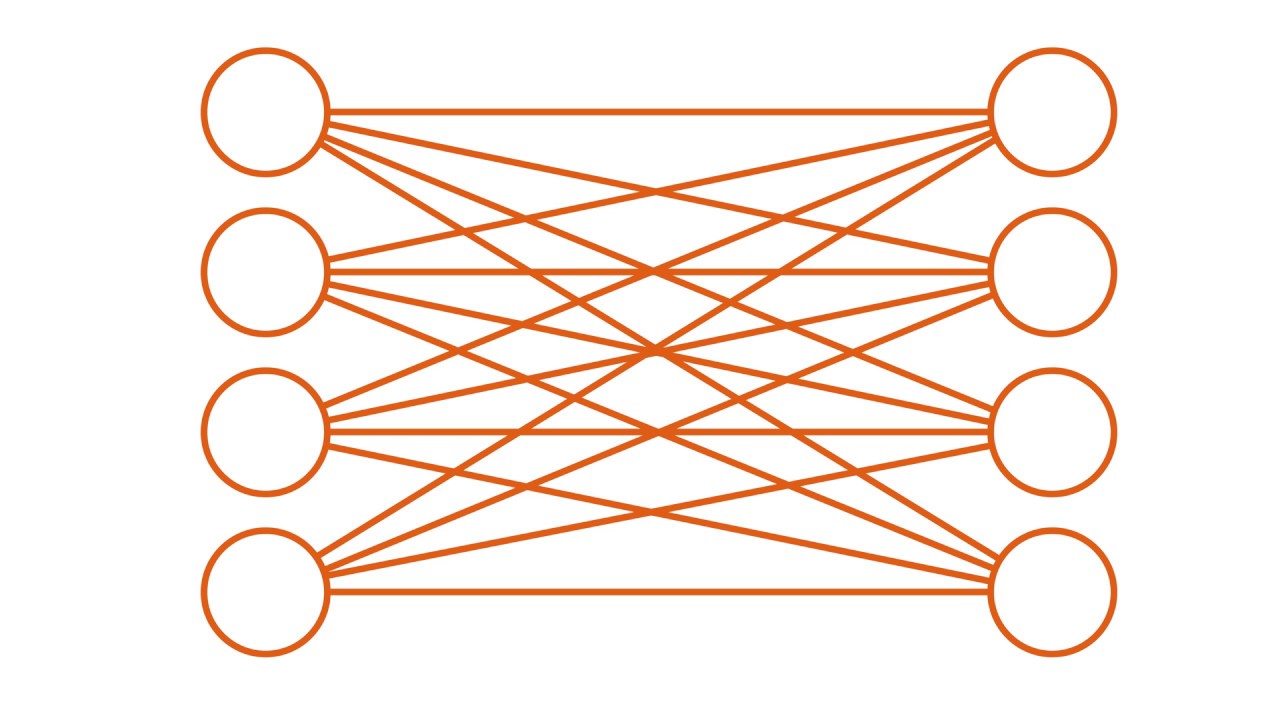

- 🧠 Neural networks consist of input layers, hidden layers, and output layers, and learn by minimizing the difference between predicted and actual outputs.

- ⚡ The feed-forward process involves propagating inputs through the network to compute outputs layer by layer.

- 🔢 A single neuron calculates a weighted sum of inputs plus a bias and applies an activation function to determine its output.

- 📏 Notation conventions: superscripts denote layer numbers, and subscripts denote indices within a layer (e.g., weights Wⁱⱼ).

- 🔗 For multiple inputs, neuron output can be efficiently computed using the dot product of weight and input vectors plus the bias.

- 📊 For multiple neurons in a layer, weights are represented as a matrix, biases as a vector, and outputs as a column vector.

- 💡 The general feed-forward equation for any layer l is: Z^[l] = W^[l]·a^[l-1] + B^[l], and a^[l] = activation(Z^[l]).

- 📐 Proper weight matrix dimensions are critical: rows = number of neurons, columns = number of inputs to the layer.

- 🚀 Vectorized computations allow multiple input samples to be processed simultaneously without loops, improving efficiency.

- 🔍 Understanding matrix shapes and dot products is essential for debugging and implementing neural networks from scratch.

- 🎯 The goal of training is to adjust weights and biases via backpropagation so the network’s output approaches the actual output.

Q & A

What is the goal of this series on neural networks?

-The goal of this series is to explain how neural networks work and to implement one from scratch using only Python. The series covers the basic concepts, followed by practical implementation.

What happens during the feed-forward process in a neural network?

-In the feed-forward process, inputs are propagated through the network from the input layer through the hidden layers and finally to the output layer. The network computes an output that is compared to the actual output, and the difference (cost) is used to adjust the weights during training.

How does an artificial neuron compute its output?

-An artificial neuron computes its output by calculating a weighted sum of its inputs, adding a bias term, and passing the result through an activation function. The activation function determines whether the neuron 'fires' or not.

What notation is used to represent layers, weights, and biases in the series?

-Superscripts are used to denote the layer number (e.g., W^1 for weights in layer 1), and subscripts are used for indexing (e.g., W_11 for the weight from input 1 to neuron 1 in layer 1). This notation helps in clearly distinguishing between different layers and neurons.

How is the dot product used in the feed-forward process?

-The dot product is used to simplify the computation of the weighted sum of inputs. Instead of performing element-wise multiplication for each input and weight, the dot product of the weight vector and input vector is used, making the computation more efficient.

What is the benefit of using matrices in neural network computations?

-Using matrices allows for efficient computation, especially when dealing with multiple inputs and neurons. Matrix operations eliminate the need for loops over individual samples and enable vectorized calculations, making the process faster and more scalable.

What is the significance of the shape of the weight matrix?

-The shape of the weight matrix is critical for valid matrix multiplication. The number of rows corresponds to the number of neurons in the layer, and the number of columns corresponds to the number of inputs to the layer. This ensures that the dot product can be computed correctly.

How does the network handle multiple input samples simultaneously?

-For multiple input samples, the input is represented as a matrix where each column corresponds to one sample. The network can process all samples at once using matrix multiplication, which speeds up the computation and avoids the need for loops.

What is the key equation for feed-forward in a neural network with multiple layers?

-The key equation for feed-forward is: Z^L = W^L A^{L-1} + B^L, where Z^L is the weighted sum of inputs for layer L, W^L is the weight matrix for layer L, A^{L-1} is the output from the previous layer, and B^L is the bias term for layer L. The output is then computed as A^L = σ(Z^L), where σ is the activation function.

What are the challenges in implementing neural networks and how are they addressed?

-One common challenge in implementing neural networks is ensuring that matrix dimensions align correctly for dot product operations. This is addressed by carefully managing the shapes of weight matrices, input vectors, and bias vectors, ensuring they match the necessary conditions for matrix multiplication.

Outlines

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowMindmap

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowKeywords

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowHighlights

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowTranscripts

This section is available to paid users only. Please upgrade to access this part.

Upgrade Now5.0 / 5 (0 votes)