Deep Learning(CS7015): Lec 1.4 From Cats to Convolutional Neural Networks

Summary

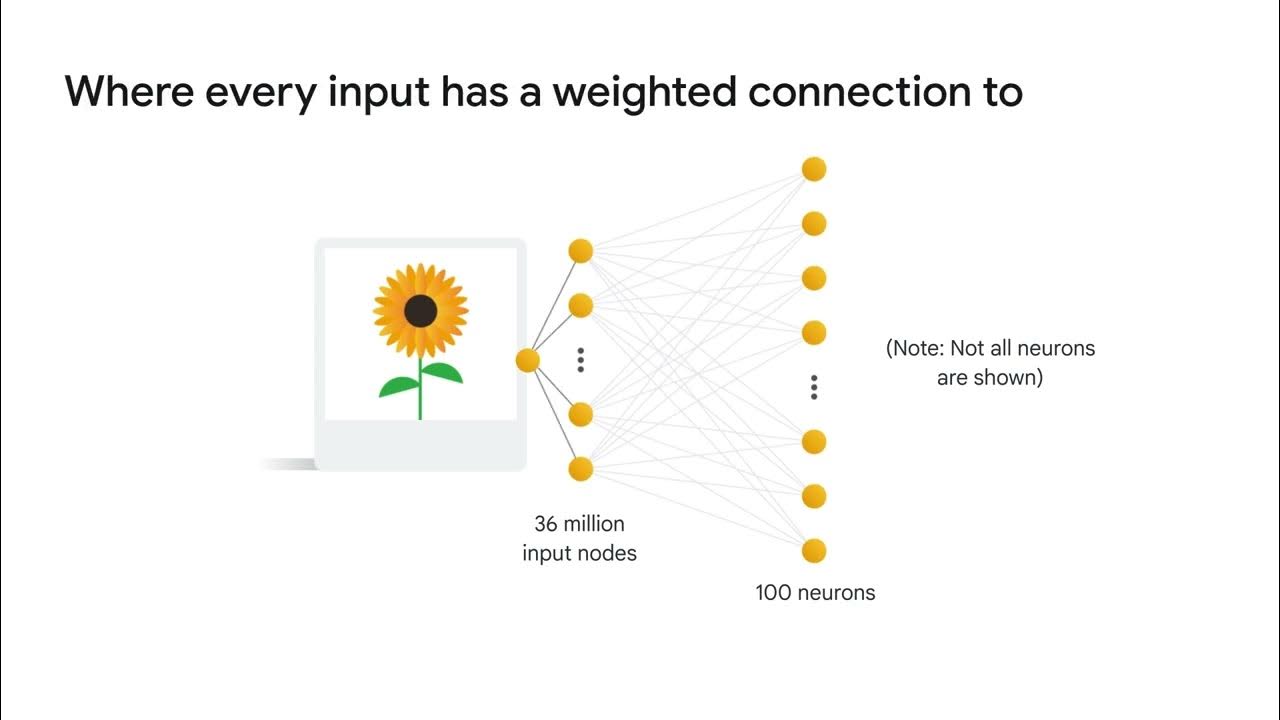

TLDRThis script delves into the history of Convolutional Neural Networks (CNNs), humorously dubbed 'cats' due to its origins from a 1959 experiment observing cats' brain responses to visual stimuli. The experiment by Hubel and Wiesel laid the groundwork for the Neocognitron in 1980, a precursor to modern CNNs. The concept was further developed by Yan LeCun in 1989 for handwritten digit recognition, particularly for postal services. The script highlights the evolution of CNNs, their initial use in digit recognition, and their significance in the MNIST dataset, which is still pivotal in neural network education and experimentation.

Takeaways

- 🧠 The script discusses the history of Convolutional Neural Networks (CNNs), starting with the famous experiment by Hubel and Wiesel in 1959, which involved observing a cat's brain responses to different visual stimuli.

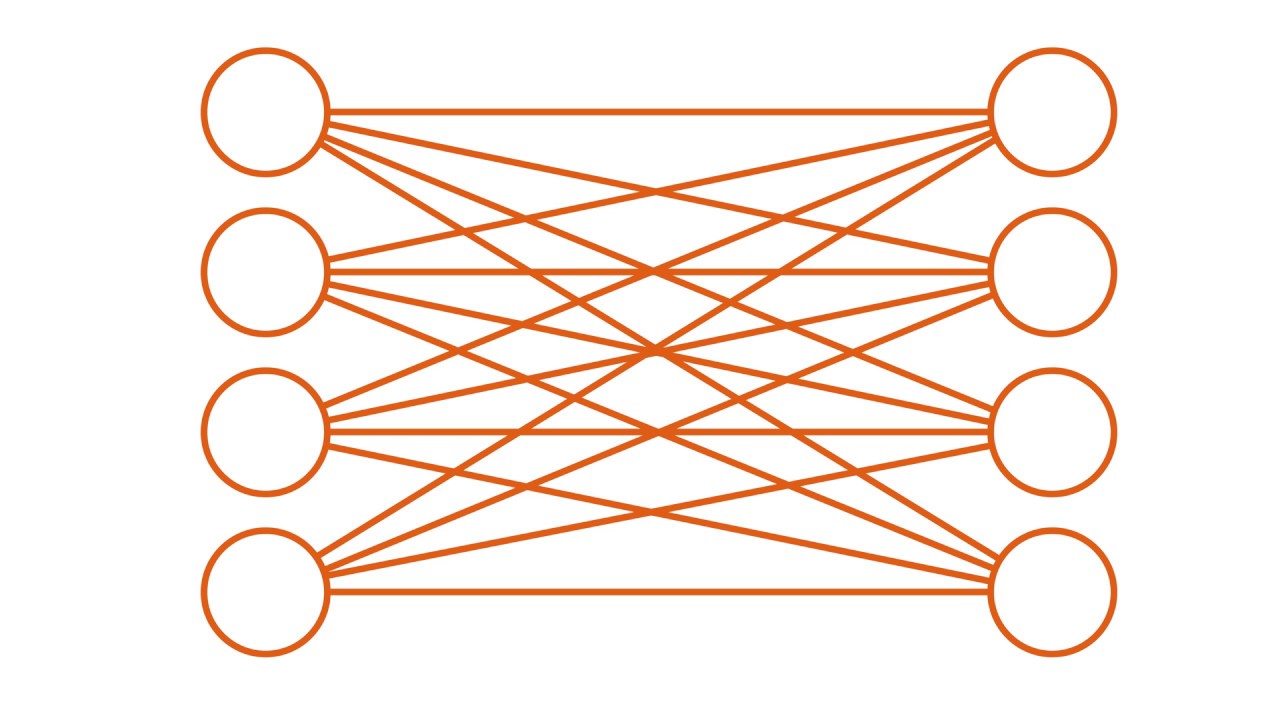

- 🔍 The experiment revealed that different neurons in the brain respond to different types of visual stimuli, laying the groundwork for the concept of feature detection in CNNs.

- 📚 The Neocognitron, proposed in 1980, is considered a precursor to modern CNNs, representing an early attempt at mimicking the brain's visual processing system.

- 👨🏫 Yan LeCun is credited with proposing the modern form of CNNs in 1989, with the initial application aimed at recognizing handwritten digits for postal services automation.

- 📬 The need for automatic recognition of postal codes and phone numbers on postcards led to the development and application of CNNs in practical scenarios.

- 📈 Over the years, there have been several improvements to CNNs, enhancing their capabilities and applications in various fields.

- 📊 The MNIST dataset, released in 1998, has become a cornerstone for teaching and experimenting with deep neural networks, including CNNs.

- 🔧 The script humorously notes that an algorithm inspired by an experiment on cats is now used to detect cats in videos, highlighting the evolution and versatility of CNNs.

- 🌐 The script implies the wide-ranging applications of CNNs beyond just cat detection, indicating their importance in various domains such as image and video analysis.

- 🎓 The historical context provided in the script is crucial for understanding the development and significance of CNNs in the field of artificial intelligence.

- 🔬 The script underscores the interdisciplinary nature of CNNs, drawing inspiration from neuroscience, computer science, and practical applications.

Q & A

What significant experiment was conducted by Hubel and Wiesel in 1959 involving a cat?

-Hubel and Wiesel conducted an experiment where they displayed lines of different orientations to a cat and measured which parts of the brain responded to these visual stimuli using electrodes. This experiment helped to understand that different neurons in the brain fire in response to different types of stimuli.

What was the outcome of the Hubel and Wiesel experiment that influenced the development of Convolutional Neural Networks (CNNs)?

-The experiment revealed that different neurons in the brain respond to different types of visual stimuli, which is the fundamental concept behind the development of Convolutional Neural Networks.

When was the Neocognitron proposed and what was its significance in the history of CNNs?

-The Neocognitron was proposed in 1980 and can be considered a primitive version of what we now know as Convolutional Neural Networks.

Who is credited with proposing modern Convolutional Neural Networks and for what purpose?

-Yann LeCun is credited with proposing modern Convolutional Neural Networks in 1989, initially for the task of handwritten digit recognition.

In what context were Convolutional Neural Networks first used according to the script?

-Convolutional Neural Networks were first used in the context of postal delivery services to automatically read and categorize handwritten pin codes and phone numbers on postcards.

When was the MNIST dataset released and what is its relevance today?

-The MNIST dataset was released in 1998 and remains relevant today as it is a popular dataset used for teaching deep neural networks and for initial experiments with various neural network-based models.

What is the MNIST dataset commonly used for?

-The MNIST dataset is commonly used for training and testing deep neural networks, particularly in the field of image recognition for handwritten digits.

How has the script humorously connected the origin of CNNs to their current applications?

-The script humorously notes that an algorithm inspired by an experiment on cats is now used to detect cats in videos, highlighting the evolution and application of CNNs.

What improvements have been made to Convolutional Neural Networks over the years as mentioned in the script?

-The script does not detail specific improvements but mentions that several enhancements have been made to Convolutional Neural Networks since their initial proposal in 1989.

Why were electrodes used in the Hubel and Wiesel experiment?

-Electrodes were used to measure the brain's response to different visual stimuli, helping to identify which parts of the brain's neurons fire in response to specific types of visual input.

What is the significance of the year 1989 in the context of Convolutional Neural Networks mentioned in the script?

-The year 1989 is significant because it marks when Convolutional Neural Networks were first proposed for use in handwritten digit recognition, specifically for postal services.

Outlines

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowMindmap

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowKeywords

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowHighlights

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowTranscripts

This section is available to paid users only. Please upgrade to access this part.

Upgrade Now5.0 / 5 (0 votes)