Understanding LIME | Explainable AI

Summary

TLDRThis video introduces LIME (Local Interpretable Model-agnostic Explanations), a widely used method for explaining black-box machine learning models. Developed by Marco Tulio Ribeiro in 2016, LIME generates interpretable explanations for individual predictions by creating simpler, locally faithful models. The video explores how LIME works through an abstract approach and mathematical optimization, showcasing its applications in image and text classification. It also discusses the advantages and limitations of LIME, emphasizing its model-agnostic nature and the challenges of defining the right neighborhood and handling non-linearity in complex models.

Takeaways

- 😀 LIME (Local Interpretable Model-agnostic Explanations) helps explain individual predictions of any machine learning model.

- 😀 Introduced in 2016 by Marco Tulio Ribeiro, LIME has become one of the most impactful papers in Explainable AI (XAI).

- 😀 LIME aims for local fidelity, meaning explanations reflect the model's behavior in the vicinity of the instance being predicted.

- 😀 The explanations generated by LIME are interpretable, making them understandable to humans, regardless of the model's complexity.

- 😀 LIME is model-agnostic, meaning it can be applied to any machine learning model, regardless of its internal workings.

- 😀 An example of LIME in action is a classifier for recognizing wolves vs huskies in images, where LIME helps identify what the model actually focused on (snow rather than the animal).

- 😀 LIME uses simplified, binary vectors (like super-pixels or words) as interpretable representations to explain complex features used by models.

- 😀 The LIME process involves four main steps: selecting the instance, perturbing data, weighting based on proximity, and training a surrogate model for explanation.

- 😀 LIME’s optimization process balances model fidelity and interpretability, using a surrogate model that approximates the complex model's behavior in the local area.

- 😀 Despite its strengths, LIME faces challenges like defining the right neighborhood, non-linearity in complex models, generating improbable instances, and instability in explanations.

- 😀 LIME is beneficial for explaining individual predictions to non-experts but might not be suitable for situations requiring detailed, legal explanations.

Q & A

What is LIME and who introduced it?

-LIME, or Local Interpretable Model-agnostic Explanations, is a method used to explain the predictions of black-box machine learning models. It was introduced in 2016 by Marco Tulio Ribeiro in the paper titled 'Why Should I Trust You?'

What does the acronym LIME stand for?

-LIME stands for Local Interpretable Model-agnostic Explanations. Each part of the name reflects the desired features in an explanation: 'Local' refers to the need for local fidelity, 'Interpretable' means the explanation should be understandable, and 'Model-agnostic' indicates it can be applied to any model.

How does LIME ensure interpretability in explanations?

-LIME ensures interpretability by transforming the original input data into a simpler, interpretable representation, such as a binary vector. This allows humans to understand the explanation, regardless of the model's internal features.

Can LIME be applied to different types of data?

-Yes, LIME can be applied to various types of data, including images and text. For example, in image classification, it might highlight specific areas of the image that influenced the model's decision, while in text classification, it may highlight specific words.

What is the key challenge in using LIME with tabular data?

-The key challenge in using LIME with tabular data is defining the correct neighborhood around an instance. This is a significant limitation of the method, as the wrong definition of the neighborhood can lead to misleading explanations.

How does LIME work at a high level?

-LIME works by selecting an instance to explain, perturbing instances around it, and training a surrogate model (usually a simpler one like linear regression) to approximate the black-box model in the local region of the instance. The explanation is then derived from this surrogate model.

What is the role of the surrogate model in LIME?

-The surrogate model in LIME is used to approximate the behavior of the black-box model in the neighborhood of the instance being explained. It is usually a simpler, interpretable model like a linear model or decision tree.

What are the limitations of LIME?

-Some limitations of LIME include: the difficulty in defining the neighborhood, issues with non-linearity in the underlying model, the generation of improbable instances due to perturbation, and the instability of explanations, where explanations for similar instances may vary significantly.

What advantages does LIME have over other interpretability methods?

-LIME’s main advantage is its model-agnostic nature, meaning it can be used with any machine learning model. Additionally, its explanations are brief and human-understandable, making it useful when the recipient has limited time or is not a technical expert.

How does LIME address the complexity of surrogate models?

-LIME manages the complexity of surrogate models through the use of regularization techniques, such as LASSO, to ensure the surrogate model remains simple enough for human interpretation while maintaining local fidelity to the original model.

Outlines

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowMindmap

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowKeywords

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowHighlights

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowTranscripts

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowBrowse More Related Video

Explainable AI explained! | #3 LIME

Explainable AI explained! | #1 Introduction

XAI-SA 2024, Opening talk

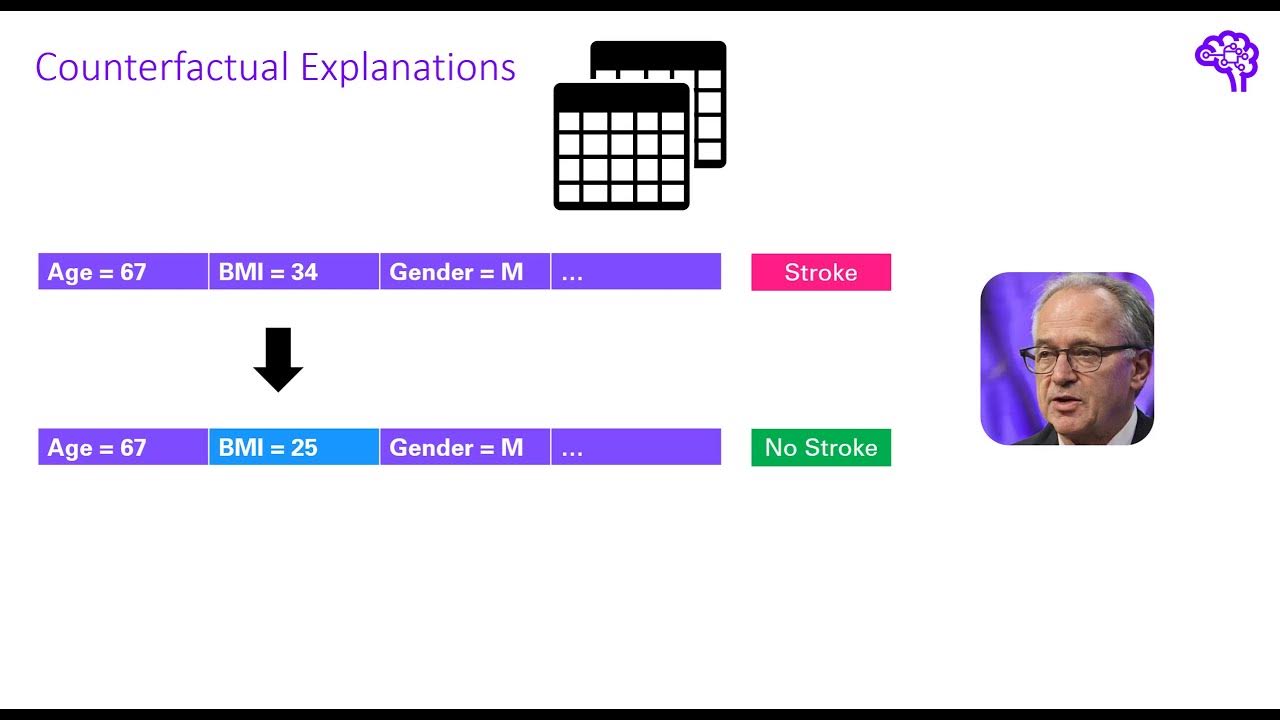

Explainable AI explained! | #5 Counterfactual explanations and adversarial attacks

Explainable AI using SHAP | Explainable AI for deep learning | Explainable AI for machine learning

Unit 1.4 | The First Machine Learning Classifier | Part 2 | Making Predictions

5.0 / 5 (0 votes)