Chunking Strategies Explained

Summary

TLDRIn this video, the importance of chunking in large language model (LLM) applications is explored, particularly in relation to vector databases. Chunking refers to breaking down large text into smaller, contextually relevant pieces to enhance the accuracy and efficiency of search results, question answering, and summarization. The video explains various chunking strategies such as fixed-size, context-aware, recursive, and semantic chunking, emphasizing the need to tailor chunk sizes based on content type and embedding models. The key takeaway is that optimizing chunking improves the relevance and quality of LLM-based applications, benefiting user experience.

Takeaways

- 😀 Chunking is the process of breaking down large pieces of text into smaller segments for embedding into a vector database, which enhances the quality of LLM applications.

- 😀 Proper chunking ensures minimal noise and maintains semantic relevance, which is crucial for applications like semantic search and conversational agents.

- 😀 Short content like sentences may excel at precise matching, but it may miss broader context, while long content captures overall themes but can dilute specific details.

- 😀 Your query length should ideally match the chunk size: short queries work best with sentence-level embeddings, while complex queries are better suited for paragraph-level embeddings.

- 😀 Consider the content nature, embedding model characteristics, user query expectations, and application purpose when deciding on a chunking strategy.

- 😀 Fixed-size chunking is simple and computationally inexpensive, making it a good choice for many common use cases.

- 😀 Context-aware chunking leverages the natural structure of content, such as sentence-level splitting, to optimize chunking for embedding models.

- 😀 Recursive chunking intelligently divides text using separators until the desired chunk size is achieved, making it a more advanced approach.

- 😀 Specialized chunking is useful for structured content like markdown or LaTeX, where unique content structure demands specialized splitting.

- 😀 Semantic chunking identifies natural topic boundaries, ensuring thematic coherence and providing chunks based on content meaning rather than token count.

- 😀 To find the optimal chunk size, preprocess your data, experiment with different chunk sizes, and evaluate the performance using metrics like relevance and retrieval speed.

Q & A

What is chunking in the context of LLM applications?

-Chunking is the process of breaking down large pieces of text into smaller, manageable segments before embedding them into a vector database. This is done to ensure that the embedded context is accurate and relevant while minimizing noise.

Why is chunking important for LLM applications?

-Chunking ensures that data is embedded in a way that maintains semantic relevance and allows applications like semantic search and conversational agents to provide accurate, contextually relevant responses.

What impact does chunk size have on LLM applications?

-Chunk size significantly impacts the accuracy, relevancy, and performance of LLM applications. Too small or too large chunks can result in missed context or unnecessary noise, making it essential to choose the right chunk size for the task.

How do short content and long content differ when embedding data?

-Short content, like sentences, is focused on precise meanings and works well for specific matching, but may miss broader context. Long content, like paragraphs, captures overall themes and relationships but may lose some focus on specific details.

What are some factors to consider when deciding on a chunking strategy?

-Key factors include the nature of the content (e.g., short text or long documents), the embedding model's characteristics (e.g., token size preference), the query expectations (e.g., short or complex queries), and the intended application (e.g., semantic search, question answering, or summarization).

What is fixed-size chunking, and when is it useful?

-Fixed-size chunking is the most straightforward approach where chunks are divided into a set number of tokens. It is computationally inexpensive and works well for many common use cases, but it may not always preserve context effectively.

What is context-aware chunking?

-Context-aware chunking takes advantage of the natural structure of content, like splitting text at sentence boundaries. This approach helps maintain contextual integrity and is especially useful for embedding models optimized for sentence-level content.

What is recursive chunking and how does it work?

-Recursive chunking divides text into chunks using separators (like punctuation) until the desired chunk size is reached. It adapts to the content structure and ensures that chunks are appropriately sized.

What is semantic chunking, and why is it more computationally expensive?

-Semantic chunking identifies natural topic boundaries and groups content accordingly, maintaining thematic coherence. It is more computationally expensive because it requires analyzing the content's meaning and context rather than just splitting by token counts.

How should chunk size be determined for embedding data in a vector database?

-To determine the optimal chunk size, preprocess data by removing noise, test a range of chunk sizes (e.g., 128-256 tokens for granular detail, or 512-1024 tokens for broader context), and evaluate performance based on relevancy, accuracy, and retrieval speed.

Outlines

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowMindmap

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowKeywords

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowHighlights

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowTranscripts

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowBrowse More Related Video

LLM Module 3 - Multi-stage Reasoning | 3.2 Module Overview

RAG Explained

Build your own RAG (retrieval augmented generation) AI Chatbot using Python | Simple walkthrough

[RAG Series #1] Hanya 10 menit paham bagaimana konsep dibalik Retrieval Augmented Generation (RAG)

LangChain Explained in 13 Minutes | QuickStart Tutorial for Beginners

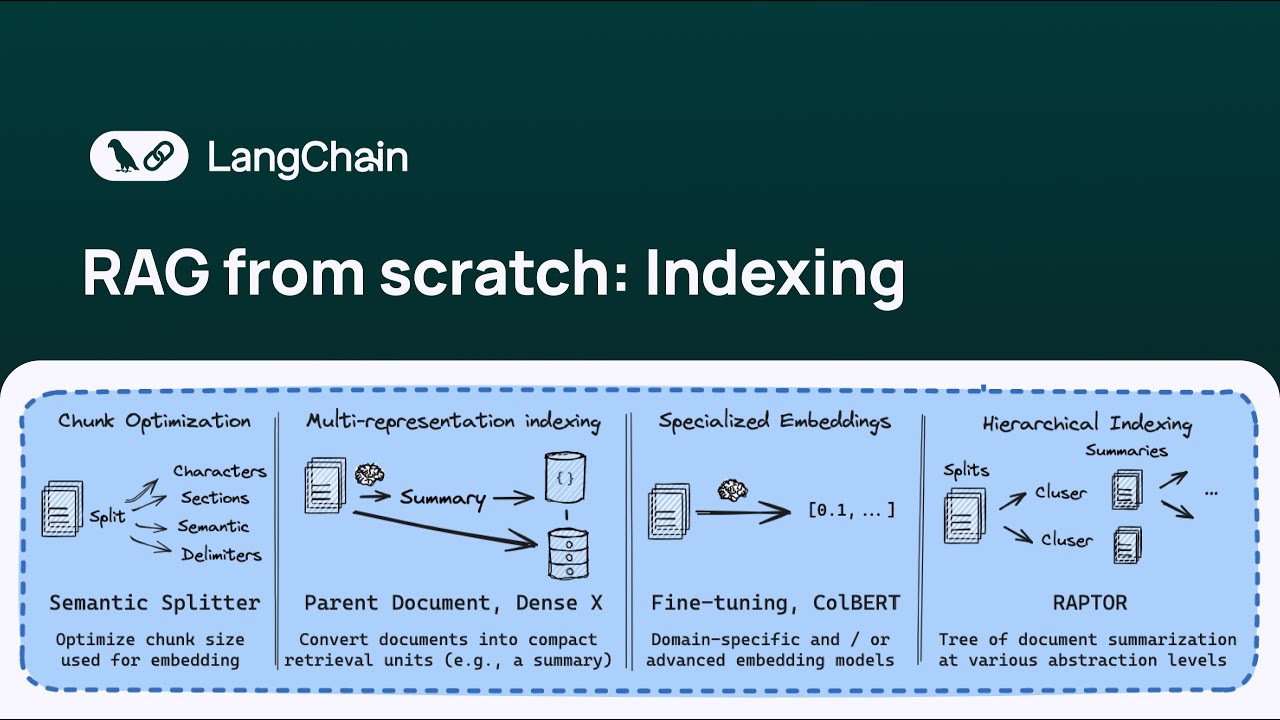

RAG from scratch: Part 12 (Multi-Representation Indexing)

5.0 / 5 (0 votes)