Rate Limiter - System Design Interview Question

Summary

TLDRThis video explains rate limiting, a technique used to control the number of requests a user or system can make within a specific time frame. It discusses the purpose of rate limiting, including preventing overload, mitigating DDoS attacks, and ensuring fair resource usage. The video covers different implementation strategies, including client-side, server-side, and middleware rate limiting, along with popular algorithms like Token Bucket, Leaky Bucket, Fixed Window, and Sliding Window. Real-world examples such as Stripe’s use of the token bucket algorithm are provided, along with additional strategies for burst handling and logging to monitor system performance.

Takeaways

- 😀 Rate limiting is a mechanism that controls the number of requests or actions a user/system can perform within a specified time frame to prevent overload and abuse.

- 😀 It helps ensure the stability of systems by preventing overload, mitigating DoS attacks, and promoting fair usage among all users.

- 😀 Client-side rate limiting prevents excessive requests from the user’s device, but it can be bypassed and lead to inconsistent enforcement.

- 😀 Server-side rate limiting ensures centralized control, better security, and consistent enforcement, though it increases server load and can pose scalability challenges.

- 😀 Middleware-based rate limiting offers scalable traffic management and flexible policies, but adds complexity and the potential for failure if not scaled properly.

- 😀 Popular rate limiting algorithms include Token Bucket, Leaky Bucket, Fixed Window, and Sliding Window, each with unique characteristics in managing requests.

- 😀 Token Bucket algorithm allows a steady rate of tokens to be added to a bucket, and requests are granted as long as tokens are available.

- 😀 Leaky Bucket algorithm processes requests at a constant rate and discards or delays excess requests, making it ideal for smooth playback experiences like streaming.

- 😀 Fixed Window algorithm divides time into fixed intervals, but can cause bursts of traffic at the boundary of windows, potentially overwhelming systems.

- 😀 Sliding Window algorithm provides more granularity by continuously tracking requests in a moving time window, preventing bursts at the boundaries seen in Fixed Window.

- 😀 A typical rate limiting architecture involves using an API Gateway to enforce limits, Redis to store tokens, and an admin dashboard to dynamically adjust rate limiting rules.

- 😀 Real-world examples like Stripe use Token Bucket algorithms to manage rate limits, with each user having a dedicated token bucket that is decremented with each request.

Q & A

What is the primary purpose of a rate limiter?

-The primary purpose of a rate limiter is to control the number of requests or actions a user or system can perform within a specific time frame, preventing overload and ensuring smooth system operation.

What are some key use cases for implementing rate limiting?

-Rate limiting is used to prevent system overload, protect against DDoS attacks, ensure fair usage among users, and manage costs for services that charge based on usage.

What are the pros and cons of implementing rate limiting on the client side?

-Pros of client-side rate limiting include immediate feedback to users and reduced server load. However, it can be easily bypassed, and inconsistent enforcement may occur if different clients implement it differently.

How does server-side rate limiting differ from client-side rate limiting?

-Server-side rate limiting is enforced on the backend server, offering centralized control and enhanced security. The downside is that it increases the server load and presents scalability challenges compared to client-side rate limiting.

What are the advantages of using middleware for rate limiting?

-Middleware provides scalable management of high traffic volumes and flexible rate limiting policies. However, it adds complexity to the system architecture and can become a point of failure if not properly scaled.

Can you explain the Token Bucket algorithm in rate limiting?

-The Token Bucket algorithm uses a bucket to hold a fixed number of tokens, refilled at a steady rate. Each incoming request requires a token, and if no tokens are available, the request is denied or queued until tokens become available.

What is the main issue with the Fixed Window algorithm for rate limiting?

-The Fixed Window algorithm divides time into fixed intervals and allows a set number of requests per interval. The issue is that bursts of requests can overwhelm the system at the boundaries of the window, leading to spikes in traffic.

How does the Sliding Window algorithm improve upon the Fixed Window algorithm?

-The Sliding Window algorithm continuously tracks requests within a defined time period, allowing for more granular control. It avoids the burst issue seen in Fixed Window by evenly distributing requests over time.

What role does Redis play in a rate limiting architecture?

-Redis is used to store the token buckets for each user, offering high performance and low latency. It helps verify if a user has available tokens for their requests, allowing efficient rate limiting in real-time.

What are some advanced rate limiting concepts mentioned in the video?

-Advanced concepts include burst handling, where temporary spikes in traffic are allowed without immediate rejection, distributed rate limiting using Redis clusters for better scalability, and logging/alarming with CloudWatch to monitor rate limiting events.

Outlines

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowMindmap

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowKeywords

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowHighlights

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowTranscripts

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowBrowse More Related Video

Understanding OpenAI's API Rate Limits: Best Practices For AI SaaS Developers

Distribusi Poisson | Materi dan Contoh Soal

User stories

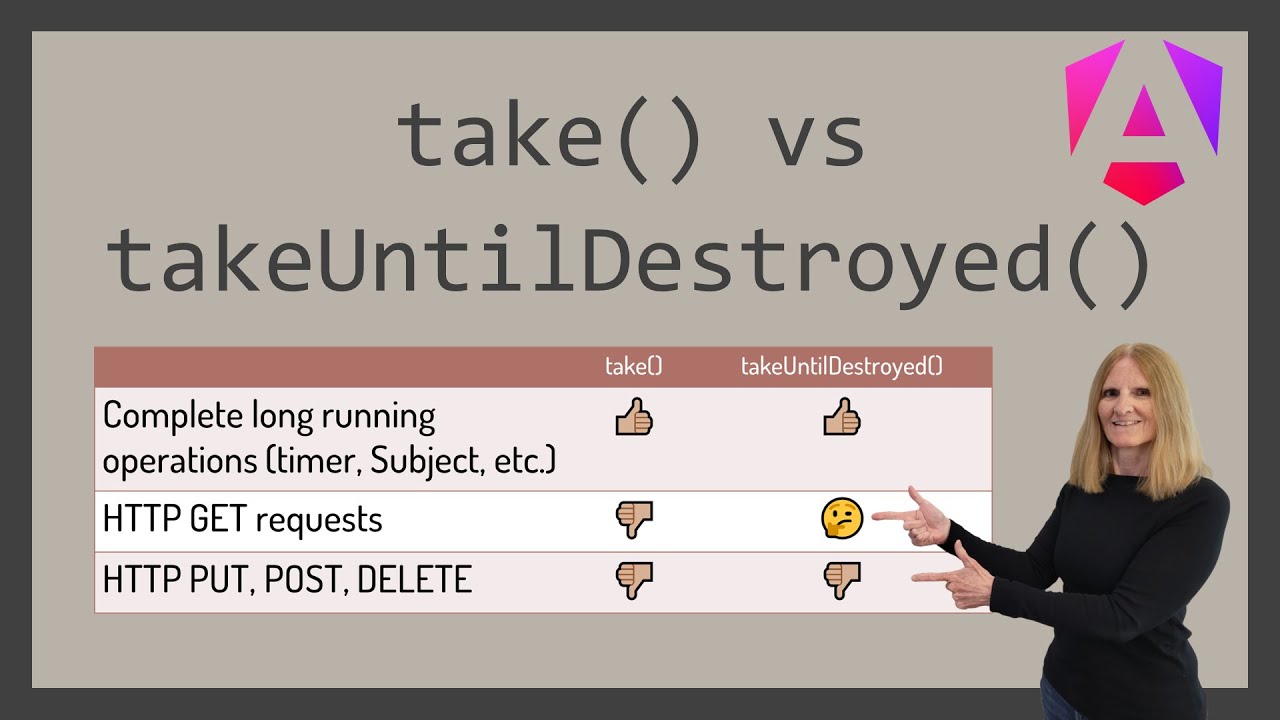

When to use take() vs takeUntilDestroyed()?

Unrestricted Resource Consumption - 2023 OWASP Top 10 API Security Risks

MyInvois Portal User Guide (Chapter 10) - User Representative Management

5.0 / 5 (0 votes)