Caching in distributed systems: A friendly introduction

Summary

TLDRThis chapter delves into the concept of caching in computer science, focusing on its benefits, challenges, and strategies for optimization. Caching reduces latency by storing frequently accessed data in memory, improving system performance. The script explains various cache policies, such as Least Recently Used (LRU) and Least Frequently Used (LFU), and discusses issues like cache misses, eventual consistency, and memory management. It also highlights how different types of caches—local, database, and distributed—can be employed in large-scale systems to enhance efficiency, with an emphasis on making the right decisions based on system requirements.

Takeaways

- 😀 Caching reduces latency by storing frequently accessed data in fast, temporary storage, improving overall system performance.

- 😀 Caching helps avoid repetitive database queries, saving time and resources, especially in large-scale distributed systems.

- 😀 By grouping similar users, you can cache their data and reuse it, cutting down on redundant database queries.

- 😀 Caches are faster to query than databases, often reducing the time needed to fetch data from 200ms to just a few milliseconds.

- 😀 Large-scale systems can't cache the entire database due to memory limitations, so it's important to cache frequently accessed data.

- 😀 Cache placement and eviction strategies (like Least Recently Used or Least Frequently Used) play a critical role in managing cache effectively.

- 😀 Cache misses occur when requested data isn't available in the cache, requiring additional database queries, which can increase latency.

- 😀 Caching introduces the challenge of eventual consistency, meaning cached data might become outdated, leading to potential discrepancies.

- 😀 Managing cache updates and ensuring the cache is synchronized with the database is a key concern when implementing caching systems.

- 😀 For large-scale systems, using distributed caches helps scale independently, ensuring that multiple services can share the same cache without redeploying code.

- 😀 A good cache policy (eviction and update strategies) is essential to avoid unnecessary cache evictions and maintain high system efficiency.

Q & A

What is caching, and why is it important in distributed systems?

-Caching is a technique used to store frequently accessed data in a faster, more accessible location (like memory) to reduce the time needed to retrieve that data. In distributed systems, it significantly reduces latency and computational overhead, improving performance by avoiding repeated database queries.

How does caching improve the user experience on platforms like Instagram?

-On platforms like Instagram, caching helps reduce the time it takes to load a user's news feed. Instead of querying the database every time, once the feed is generated, it is stored in memory, so subsequent requests can be served faster, improving the overall user experience.

What are the key benefits of caching?

-The key benefits of caching include reduced latency, efficient resource usage, and improved response times. By serving frequently requested data from cache instead of querying a database, systems can deliver faster responses and save computational resources.

What is the difference between client-to-server and server-to-database communication in caching?

-Client-to-server communication refers to the time it takes for a user's device to request data from a server, while server-to-database communication refers to the time it takes for the server to retrieve data from the database. In caching, optimization typically focuses on reducing server-to-database communication to minimize latency.

Why doesn't caching always involve placing the entire database in memory?

-Placing an entire database in memory is impractical for large-scale systems due to memory limitations and the high cost of large memory. Instead, caching focuses on storing only the most frequently accessed data, which improves efficiency without overwhelming system resources.

What are some common cache eviction policies, and why are they important?

-Common cache eviction policies include Least Recently Used (LRU) and Least Frequently Used (LFU). These policies determine which data to remove when the cache is full. They are important because they ensure that the cache remains efficient by prioritizing the most relevant data and avoiding unnecessary recomputations.

What is cache trashing, and how can it negatively affect performance?

-Cache trashing occurs when the system frequently evicts and reloads cache data due to an inefficient cache eviction policy. This leads to wasted computation and memory usage, reducing the effectiveness of caching and increasing latency.

What does 'eventual consistency' mean in the context of caching?

-Eventual consistency refers to the delay between when data is updated in the database and when the cached version reflects that update. While the cache eventually becomes consistent with the database, there can be a period of time when cached data is stale, which can cause issues, especially in critical systems like financial applications.

How does cache placement differ, and which type is best for large-scale systems?

-Cache placement can be in-memory (on servers), within the database, or in an external distributed cache. For large-scale systems, a distributed cache is typically the best choice because it can scale independently, support multiple services, and avoid redeployment when cache algorithms are updated.

Why is it important for engineers to predict which data to store in cache?

-Predicting which data to store in the cache is crucial for optimizing performance. By identifying and caching the most frequently accessed data, engineers can ensure that the cache is used effectively, minimizing the need for repeated database queries and reducing overall system latency.

Outlines

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowMindmap

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowKeywords

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowHighlights

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowTranscripts

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowBrowse More Related Video

What are Distributed CACHES and how do they manage DATA CONSISTENCY?

How does Caching on the Backend work? (System Design Fundamentals)

19# Всё о кешированиии в битриксе | Видеокурс: Создание сайта на 1С Битрикс

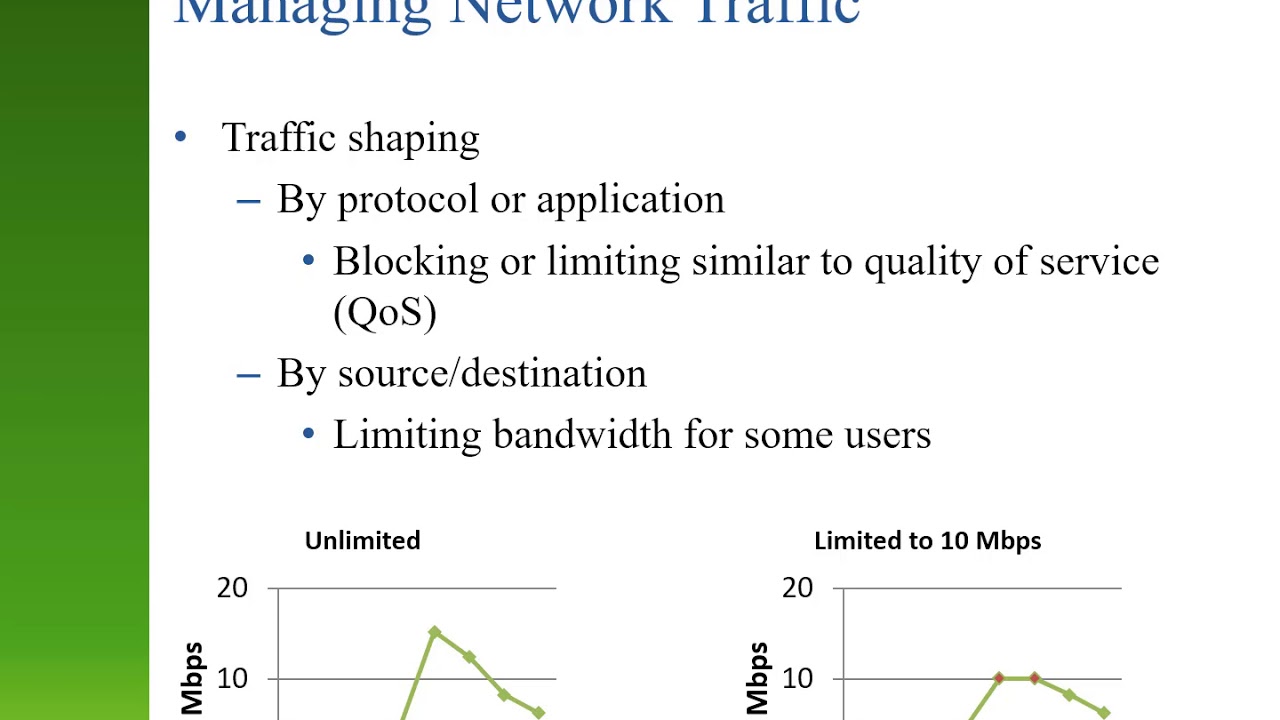

Chapter 12 - Network Management

Everything you need to know about HTTP Caching

Map of Computer Science

5.0 / 5 (0 votes)