Diagonalisasi Matriks

Summary

TLDRIn this linear algebra lesson, the topic of matrix diagonalization is explored as an extension of eigenvalues and eigenvectors. The video explains the definition of diagonalization and the necessary conditions for a square matrix to be diagonalizable. It covers the steps involved in diagonalizing a matrix, such as finding eigenvalues and eigenvectors, constructing the matrix P, and verifying the diagonal matrix. The lesson also highlights key theorems on diagonalization, emphasizing the importance of linearly independent eigenvectors and how different eigenvalues lead to a diagonal matrix. Examples are provided for better understanding of the concepts.

Takeaways

- 😀 The topic of the lecture is matrix diagonalization, a continuation of previous discussions on eigenvalues and eigenvectors.

- 😀 A square matrix is said to be diagonalizable if there exists a matrix 'P' such that 'P' can transform the original matrix into a diagonal matrix.

- 😀 The script introduces a theorem that connects diagonalizability to the existence of linearly independent eigenvectors.

- 😀 The procedure for diagonalizing a matrix involves finding its eigenvalues, then determining eigenvectors corresponding to these eigenvalues.

- 😀 The first step in diagonalization is to solve the characteristic equation to find the eigenvalues.

- 😀 Once eigenvalues are found, the next step is to find eigenvectors corresponding to each eigenvalue, ensuring they form a linearly independent set.

- 😀 The matrix 'P' for diagonalization is formed by using the eigenvectors as its columns.

- 😀 The diagonal matrix 'D' is created with eigenvalues placed along the diagonal, in the same order as the corresponding eigenvectors in 'P'.

- 😀 A worked example demonstrates the diagonalization process, including finding the eigenvalues, eigenvectors, and constructing the matrices 'P' and 'D'.

- 😀 A theorem ensures that if the eigenvectors corresponding to distinct eigenvalues are linearly independent, the matrix is diagonalizable.

Q & A

What does it mean for a matrix to be diagonalizable?

-A matrix is said to be diagonalizable if there exists a matrix P, with an inverse, such that the product of P, the matrix, and the inverse of P results in a diagonal matrix. This means that the matrix can be transformed into a diagonal form.

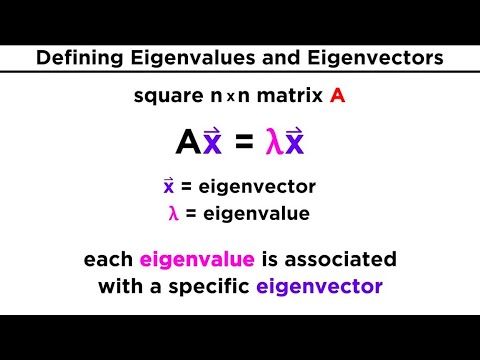

What is the relationship between eigenvalues and diagonalization?

-Eigenvalues are crucial for diagonalization. When a matrix is diagonalized, its eigenvalues form the diagonal elements of the resulting diagonal matrix. Each eigenvalue corresponds to a specific eigenvector that contributes to the matrix's diagonal structure.

What are the steps involved in diagonalizing a matrix?

-The steps include: 1) Finding the eigenvalues by solving the characteristic equation, 2) Finding the corresponding eigenvectors, 3) Forming the matrix P with the eigenvectors as columns, and 4) Constructing the diagonal matrix with the eigenvalues on the diagonal.

What is the significance of the matrix P in diagonalization?

-The matrix P is composed of the eigenvectors of the original matrix. If P is invertible, the matrix can be diagonalized by transforming it into a diagonal matrix. P is essential because it links the original matrix to its diagonalized form.

How do you find eigenvalues of a matrix?

-To find the eigenvalues, you need to solve the characteristic equation, which is det(A - λI) = 0, where A is the matrix, λ is the eigenvalue, and I is the identity matrix. The solutions to this equation give the eigenvalues.

What is the characteristic equation of a matrix?

-The characteristic equation of a matrix is given by det(A - λI) = 0, where A is the matrix, λ represents the eigenvalue, and I is the identity matrix. This equation is used to find the eigenvalues of the matrix.

What does it mean for eigenvectors to be linearly independent?

-Eigenvectors are linearly independent if no eigenvector can be written as a linear combination of the others. In the context of diagonalization, the matrix P can only be formed with linearly independent eigenvectors, ensuring that the matrix is diagonalizable.

Why is it important for the eigenvectors to be linearly independent in diagonalization?

-The eigenvectors must be linearly independent because only then can they form the columns of the matrix P. If the eigenvectors are not independent, the matrix cannot be diagonalized, as there would not be a full set of linearly independent eigenvectors.

What happens if the eigenvalues of a matrix are not distinct?

-If the eigenvalues are not distinct, it does not necessarily prevent diagonalization, but it complicates the process. A matrix with repeated eigenvalues can still be diagonalizable if there is a full set of linearly independent eigenvectors corresponding to those eigenvalues.

Can a matrix always be diagonalized?

-Not every matrix can be diagonalized. A matrix can be diagonalized if and only if it has a full set of linearly independent eigenvectors. If the matrix does not have enough independent eigenvectors (e.g., in the case of defective matrices), it cannot be diagonalized.

Outlines

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowMindmap

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowKeywords

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowHighlights

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowTranscripts

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowBrowse More Related Video

Diagonalization | Eigenvalues, Eigenvectors with Concept of Diagonalization | Matrices

Finding Eigenvalues and Eigenvectors

Eigen values and Eigen Vectors in Tamil | Unit 1 | Matrices | Matrices and Calculus | MA3151

Nilai dan Vektor Eigen part 1

Matrix DIAGONALIZATION | FREE Linear Algebra Course

Eigenvalues and Eigenvectors | Properties and Important Result | Matrices

5.0 / 5 (0 votes)