Stanford XCS224U: NLU I Contextual Word Representations, Part 1: Guiding Ideas I Spring 2023

Summary

TLDRThis video delves into the evolution of neural language models, from early word embeddings like word2vec to the rise of massive pretraining with models like BERT and GPT-3. It explores the importance of positional encoding, the transition from static to contextual representations, and the significance of fine-tuning models for specific tasks. The video also emphasizes the transformative impact of large-scale pretraining and the growing reliance on fine-tuning models via APIs, while highlighting the continued value of custom code in understanding and leveraging these powerful systems.

Takeaways

- 😀 The challenge of capturing word order in sequences is addressed through positional encoding, which combines word embeddings with position information.

- 😀 Positional encoding can be as simple as adding a vector representing word positions, but it has its limitations, which need further exploration.

- 😀 Massive scale pretraining has become a key concept in NLP, enabled by the distributional hypothesis, which suggests learning from word co-occurrence patterns in large corpora.

- 😀 Early models like word2vec and GloVe laid the foundation for learning word relationships at scale, which was expanded upon by more advanced models like ELMo.

- 😀 ELMo demonstrated the potential of learning contextual word representations and transferring this knowledge to specific tasks through fine-tuning.

- 😀 GPT and BERT further pushed the limits of contextual learning, with models like GPT-3 marking a significant phase change in NLP research by enabling task performance from minimal training data.

- 😀 The introduction of the BERT era shifted NLP from static word embeddings to fine-tuning contextual models on large-scale data.

- 😀 Pretraining in NLP evolved from using static word representations and RNNs to contextual models like BERT, which allowed for more powerful and adaptable fine-tuning.

- 😀 Fine-tuning practices are increasingly happening with large models via APIs, removing the need for custom code to modify model parameters directly.

- 😀 The future of NLP may see less custom coding for fine-tuning, but there remains hope that custom code will continue to play an important role in specific, detailed analyses.

- 😀 The use of large-scale pretraining in models like GPT-3 allows them to perform tasks with minimal task-specific training, showing the power of transfer learning in NLP.

Q & A

What is positional encoding, and why is it important in transformers?

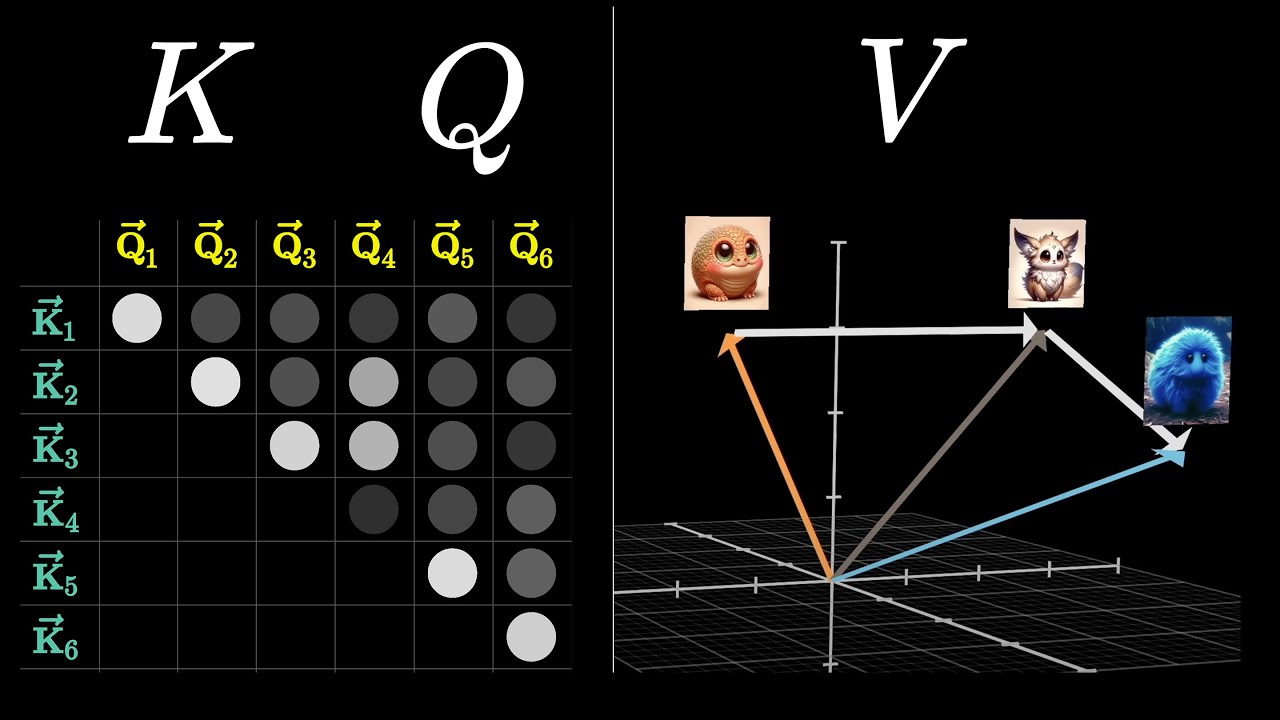

-Positional encoding is a method used to inject information about the position of words in a sequence into the transformer model, as transformers do not inherently capture word order. It involves adding a fixed vector to the word embedding based on the word's position in the sentence, allowing the model to understand the order of words.

How do transformers handle word order if they don’t inherently account for it?

-Transformers handle word order through positional encoding, which adds information about the position of words in a sequence to their embeddings. This allows the model to capture sequential relationships despite its architecture not processing words in order as RNNs do.

What is the main limitation of using positional encoding in transformers?

-The main limitation of positional encoding is that it is a fixed, predefined method that doesn't adapt to the specific needs of different tasks. The course mentions that the limitations of this approach will be explored in more detail later, suggesting that more flexible and dynamic alternatives may be necessary.

What was the breakthrough idea introduced by the distributional hypothesis in NLP?

-The distributional hypothesis suggested that the meaning of a word can be inferred from the words that appear around it in a large corpus. This concept led to the development of models like word2vec and GloVe, which rely on vast, unlabeled data to learn word representations, bypassing the need for manually crafted features.

How did ELMo revolutionize the approach to word representations?

-ELMo (Embeddings from Language Models) marked a breakthrough by offering *contextual* word representations. Unlike previous methods, ELMo's embeddings change depending on the context of the word in the sentence, making it more accurate for tasks where word meanings are dependent on their surroundings.

How did the GPT-3 paper contribute to the advancement of NLP research?

-GPT-3 demonstrated the power of massive-scale pretraining, using a model trained on an enormous amount of data and fine-tuned with very few task-specific examples. This approach introduced a 'phase change' in NLP, showing that large, pre-trained models could excel across various tasks with minimal task-specific data.

What does fine-tuning mean in the context of language models like BERT and GPT?

-Fine-tuning refers to the process of taking a pre-trained language model, which has learned general language patterns, and then adjusting it on a specific task (e.g., sentiment analysis, translation). Fine-tuning adapts the model's general knowledge to perform well on the desired task with minimal additional data.

How has the approach to fine-tuning changed from the 2016-2018 era to the BERT era?

-Between 2016-2018, fine-tuning involved using static word embeddings like word2vec and combining them with models like RNNs. In the BERT era (2018), fine-tuning shifted to contextual models, where pre-trained embeddings are fine-tuned for specific tasks, enabling more effective and flexible use of NLP models.

What is the significance of models like GPT and BERT in the evolution of NLP?

-GPT and BERT represent a shift to larger, more powerful language models that are pre-trained on vast datasets and then fine-tuned for specific tasks. This shift allows for much more accurate and flexible handling of language, offering a new paradigm for NLP tasks that rely less on task-specific features and more on generalizable, context-driven learning.

Why might we be moving toward an era where most fine-tuning occurs via APIs?

-As language models grow larger and more complex, direct access to their underlying code becomes more difficult. Instead, many developers will likely rely on APIs, where they can fine-tune models indirectly through services provided by companies, without needing to modify the core model parameters themselves.

Outlines

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowMindmap

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowKeywords

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowHighlights

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowTranscripts

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowBrowse More Related Video

Stanford CS25: V1 I Transformers in Language: The development of GPT Models, GPT3

But what is a GPT? Visual intro to Transformers | Deep learning, chapter 5

The History of Natural Language Processing (NLP)

Visualizing Attention, a Transformer's Heart | Chapter 6, Deep Learning

Transformers, explained: Understand the model behind GPT, BERT, and T5

Text Classification Using BERT & Tensorflow | Deep Learning Tutorial 47 (Tensorflow, Keras & Python)

5.0 / 5 (0 votes)