But what is a GPT? Visual intro to Transformers | Deep learning, chapter 5

Summary

TLDRThis video delves into the inner workings of transformer-based language models, focusing on key concepts such as word embeddings, context, and probability prediction. It explains how models like GPT-3 encode and refine word meanings through layers, using attention mechanisms and Softmax to predict the most likely next word. Additionally, the role of the Unembedding matrix and temperature in generating diverse outputs is explored. The goal is to build a solid understanding of these foundational elements to prepare for deeper insights into attention mechanisms and the overall architecture of AI models.

Takeaways

- 😀 The embedding dimension in a transformer model (e.g., GPT-3) is large, such as 12,288, representing a complex vector space with millions of parameters.

- 😀 Initially, word embeddings encode the meaning of individual words, but as the data passes through the network, embeddings evolve to reflect richer, contextual meaning.

- 😀 The model uses a fixed context size (e.g., 2048 for GPT-3), meaning it can only consider a limited amount of text at a time for making predictions.

- 😀 Longer conversations in early chatbots like GPT-3 could lead to the model 'losing the thread' due to this limited context size.

- 😀 The goal of the transformer network is to allow each word vector to 'soak up' context and nuance, turning simple word representations into context-aware embeddings.

- 😀 The output of a transformer model is a probability distribution over all possible next tokens, generated by applying the Softmax function to the logits.

- 😀 The Unembedding matrix (WU) plays a critical role in converting the final vector into a prediction over the vocabulary, adding millions of parameters to the model.

- 😀 The Softmax function normalizes logits into a probability distribution, ensuring that the output sums to 1, with larger values receiving higher probability.

- 😀 Temperature in the Softmax function can be adjusted to control the diversity of predictions, with a low temperature making predictions more deterministic and high temperatures adding randomness.

- 😀 Understanding word embeddings, Softmax, and matrix multiplication is foundational for grasping the attention mechanism, which is key to transformer models like GPT.

Q & A

What is the embedding dimension and how does it relate to the number of weights in a transformer model?

-The embedding dimension in a transformer model is the size of the vector used to represent each word or token in the model's internal space. In this case, the embedding dimension is 12,288, meaning that each word is represented by a 12,288-dimensional vector. When multiplied by the weights, this results in around 617 million parameters, which contribute to the model's ability to learn word relationships and context.

Why are embeddings not limited to just representing individual words in a transformer model?

-Embeddings in transformers are designed to encode more than just the meaning of individual words. They also capture contextual information, such as the position of words in a sentence. As words pass through the model's layers, their embeddings are refined and adjusted to absorb context, so the final vector may represent not just a word but a more nuanced meaning based on surrounding text.

What is the significance of the context size in a transformer model like GPT-3?

-The context size refers to the number of tokens that the model can process at once. For GPT-3, the context size is 2048 tokens, meaning the model can only consider a fixed window of 2048 tokens when making predictions. This limits how much of the preceding text can influence the model's next word prediction, which is why early versions of chatbots might struggle to maintain long-term coherence in conversations.

How does a transformer model make a prediction for the next word?

-To predict the next word, the transformer uses a matrix (called the Unembedding matrix) to map the final vector from the context to a list of possible words in the vocabulary. The model then applies the Softmax function to these values to turn them into a probability distribution. The word with the highest probability is chosen as the next word in the sequence.

What role does the Softmax function play in predicting the next word in a transformer model?

-The Softmax function converts the raw output from the Unembedding matrix into a valid probability distribution. It does this by exponentiating each value and then normalizing them so that they sum to 1. This ensures that each token has a probability between 0 and 1, and the most likely tokens have higher probabilities while less likely ones have lower probabilities.

How does temperature affect the output of a transformer model's prediction?

-Temperature controls the randomness of the model's predictions. A lower temperature makes the model more deterministic, causing it to choose the most probable word. A higher temperature makes the model's predictions more diverse and less predictable. By adjusting the temperature, you can make the model's output more creative or more conservative, depending on the desired result.

What are logits in the context of transformer models?

-Logits are the raw, unnormalized outputs from the model before they are passed through the Softmax function. These values are typically not probabilities and can be negative or larger than 1. The Softmax function is then used to convert logits into a probability distribution, which allows the model to predict the next word.

What is the purpose of the Unembedding matrix in transformer models?

-The Unembedding matrix is used to map the final vector from the model's context to a list of values representing all possible tokens in the vocabulary. Each row of the matrix corresponds to a word in the vocabulary, and the matrix helps to calculate the probability distribution over all tokens that might be the next word in the sequence.

Why is the temperature in the Softmax function sometimes referred to as a 'spice'?

-The temperature is sometimes referred to as a 'spice' because it adds an extra layer of variability or randomness to the output of the model. By adjusting the temperature, you can control how predictable or creative the model’s output is. A higher temperature makes the output more uniform and varied, while a lower temperature makes the predictions more deterministic.

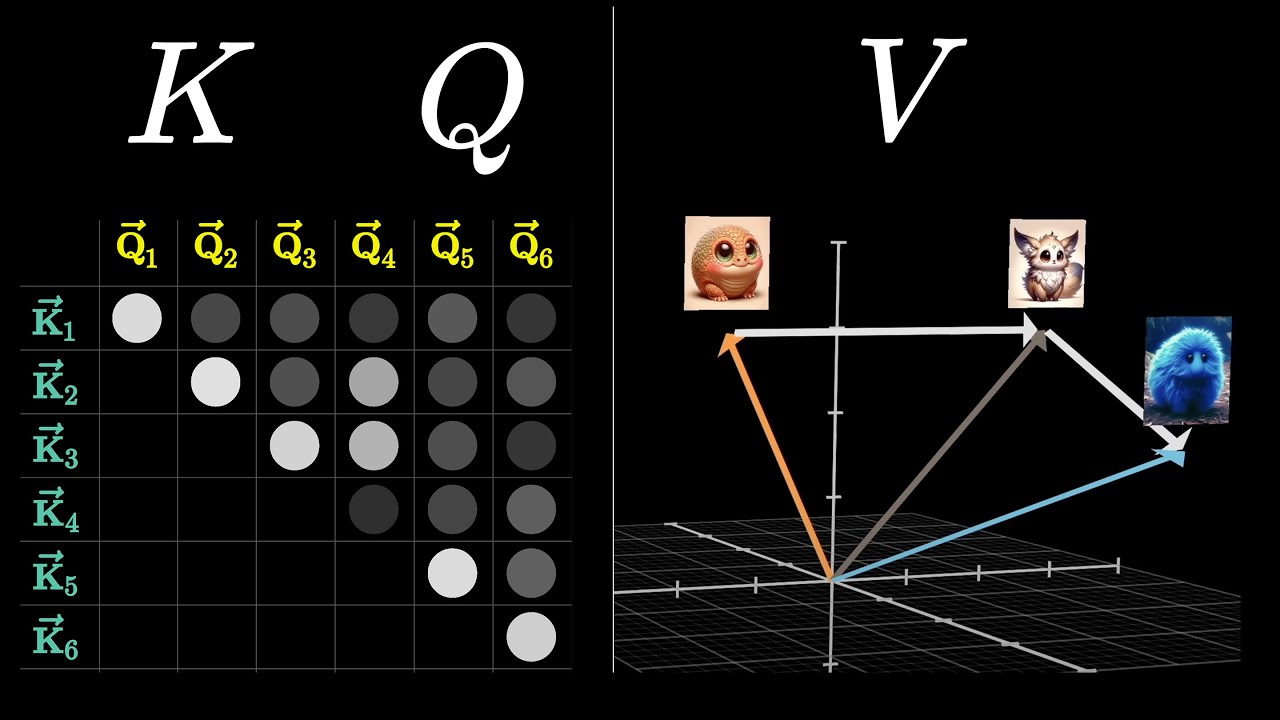

What is the connection between embedding vectors and the attention mechanism in transformer models?

-Embedding vectors are the starting point for understanding how transformers process language. The attention mechanism builds on these embeddings by allowing the model to focus on different parts of the input sequence when making predictions. The attention mechanism enables the model to dynamically adjust which parts of the input are most important, allowing it to capture long-range dependencies and relationships between words in a sequence.

Outlines

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowMindmap

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowKeywords

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowHighlights

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowTranscripts

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowBrowse More Related Video

Generative AI: Output & Probabilities

RoPE (Rotary positional embeddings) explained: The positional workhorse of modern LLMs

AI Has a Fatal Flaw—And Nobody Can Fix It

Visualizing Attention, a Transformer's Heart | Chapter 6, Deep Learning

The History of Natural Language Processing (NLP)

ChatGPT in 3 Minuten erklärt

5.0 / 5 (0 votes)