Numeric Transformers

Summary

TLDRIn this video, we explore numeric transformers in data pre-processing, focusing on feature scaling, polynomial transformations, and discretization techniques. Feature scaling ensures numerical features are on the same scale, enhancing model performance with methods like Standard Scalar, Min-Max Scalar, and Max Absolute Scalar. Polynomial transformers generate non-linear feature relationships, while KBinsDiscretizer divides continuous variables into discrete bins. By applying these techniques, we improve convergence for machine learning models and ensure efficient feature transformation for better predictions.

Takeaways

- 😀 Feature scaling is essential for improving convergence in iterative optimization algorithms like gradient descent.

- 😀 Standard Scaler transforms data to have a mean of 0 and standard deviation of 1 using the formula: (x - mean) / standard deviation.

- 😀 Min-Max Scaler resizes data to fit within a range of 0 to 1 by subtracting the minimum value and dividing by the feature range.

- 😀 Max-Absolute Scaler scales data between -1 and +1 by dividing each feature by the maximum absolute value.

- 😀 Polynomial transformations create new features by combining existing ones to capture non-linear relationships between features and labels.

- 😀 KBinsDiscretizer helps convert continuous variables into discrete categories by splitting them into equal bins, which can be represented using one-hot or ordinal encoding.

- 😀 Scaling numerical features ensures faster convergence in machine learning models that rely on optimization algorithms like gradient descent.

- 😀 Polynomial transformers can generate higher-degree features that help the model learn complex, non-linear relationships.

- 😀 The fit_transform method in scikit-learn is a common API used across transformers to both fit parameters and apply the transformation in a single step.

- 😀 Feature scaling with transformers like the Standard Scaler, Min-Max Scaler, and Max-Absolute Scaler standardizes the range of input data for better model performance.

- 😀 KBinsDiscretizer allows for uniform splitting of continuous data into bins, which is useful in converting numerical features into discrete features for certain machine learning models.

Q & A

What are numeric transformers and why are they important in machine learning?

-Numeric transformers are applied to numerical features during data pre-processing to modify the scale, form, or distribution of the data. They are important because they help improve the performance of machine learning models by making features more suitable for learning algorithms, particularly for iterative optimization methods like gradient descent.

What are the three main types of numeric transformers discussed in the script?

-The three main types of numeric transformers discussed are feature scaling, polynomial transformers, and discretization transformers.

Why is feature scaling important in machine learning?

-Feature scaling is important because numerical features with different scales can lead to slower convergence of iterative optimization procedures. Scaling ensures that all features are on the same scale, which helps improve the efficiency and convergence of optimization algorithms like gradient descent.

What are the three feature scaling methods available in sklearn?

-The three feature scaling methods available in sklearn are StandardScaler, MinMaxScaler, and MaxAbsScaler.

How does the StandardScaler work in scaling features?

-The StandardScaler transforms the feature by subtracting the mean (μ) and dividing by the standard deviation (σ) of the feature. This results in a feature vector with a mean of 0 and a standard deviation of 1.

What is the main difference between MinMaxScaler and StandardScaler?

-The MinMaxScaler transforms features so that all values fall within a specified range, typically 0 to 1, by subtracting the minimum value and dividing by the range of the feature. In contrast, StandardScaler standardizes the feature values by subtracting the mean and dividing by the standard deviation, resulting in a mean of 0 and a standard deviation of 1.

What does the MaxAbsScaler do to the features?

-The MaxAbsScaler scales the features such that all values fall within the range of -1 to +1. It divides each feature value by the maximum absolute value of the feature, which helps preserve the sparsity of the data while normalizing the range.

What is the purpose of polynomial transformations in machine learning?

-Polynomial transformations are used to capture non-linear relationships between features and labels. By generating new feature combinations based on the original features, polynomial transformations help improve the model's ability to learn complex patterns.

How does the PolynomialFeatures transformer work in sklearn?

-The PolynomialFeatures transformer generates new features that are polynomial combinations of the original features up to the specified degree. For example, with degree 2, it generates the original features, as well as their square and interaction terms (e.g., x1², x2², and x1*x2).

What does the KBinsDiscretizer do, and when would it be useful?

-The KBinsDiscretizer divides a continuous variable into discrete bins and represents each bin using one-hot encoding or ordinal encoding. This transformation is useful when converting continuous features into categorical features for algorithms that work better with categorical data, such as decision trees or certain ensemble methods.

Outlines

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowMindmap

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowKeywords

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowHighlights

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowTranscripts

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowBrowse More Related Video

Sculpt - Edit a Form

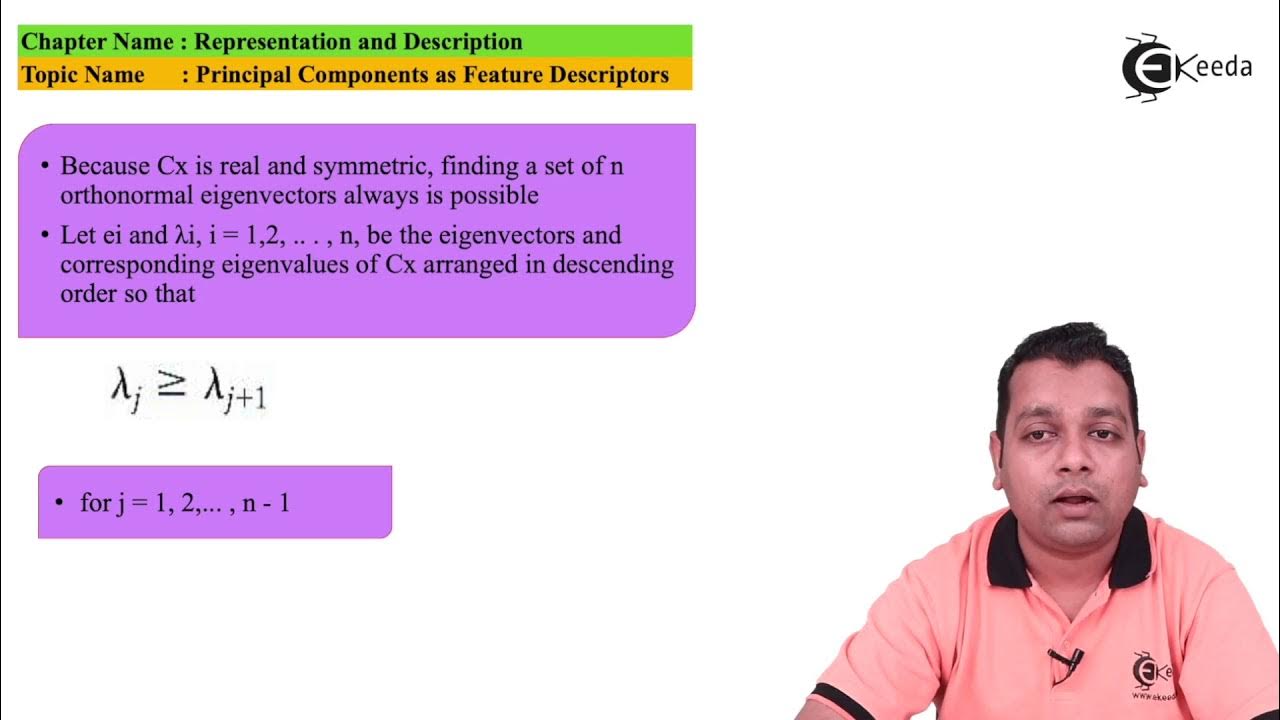

Principal Components as Feature Descriptors - Representation and Description - Image Processing

🤖AWS Data Wrangler - What's it, and How to use it? (beginner friendly)

The latest LLM research shows how they are getting SMARTER and FASTER.

What are Transformers (Machine Learning Model)?

012-Spark RDDs

5.0 / 5 (0 votes)