Multithreading Models & Hyperthreading

Summary

TLDRThis lecture delves into multi-threading, contrasting single-threaded with multi-threaded processes and highlighting the advantages of the latter. It introduces user and kernel threads, and the critical relationship between them in operating systems. The three primary multi-threading models—many-to-one, one-to-one, and many-to-many—are explored, each with its benefits and limitations. The many-to-one model is efficient but can block the entire process with a single blocking call. The one-to-one model allows for more concurrency but can be costly in terms of performance due to kernel thread creation. The many-to-many model offers flexibility and better utilization of multiprocessor systems. Additionally, the lecture covers hyper-threading, or simultaneous multi-threading, a technique by Intel that allows a single physical core to handle multiple threads, enhancing performance. Practical methods to check for hyper-threading on a system are also provided, offering viewers a deeper understanding of modern computing technology.

Takeaways

- 🧵 Multi-threading involves the use of multiple threads within a single process to improve performance and resource utilization.

- 📚 There are two types of threads: user-level threads, managed by the application, and kernel-level threads, directly managed by the operating system.

- 🔗 The relationship between user and kernel threads can be established in three models: many-to-one, one-to-one, and many-to-many.

- 🚫 In the many-to-one model, multiple user threads are mapped to a single kernel thread, which can lead to blocking issues and limit parallel execution on multiprocessors.

- 🔄 The one-to-one model maps each user thread to a unique kernel thread, allowing for better concurrency but can be costly due to the overhead of creating kernel threads.

- ➡️ The many-to-many model offers flexibility by allowing many user threads to be mapped to a smaller or equal number of kernel threads, improving concurrency and parallel execution.

- 💡 Hyper-threading, also known as simultaneous multi-threading, is a technology that allows a single physical processor core to handle two or more threads simultaneously, improving performance.

- 🔧 Hyper-threading can be identified by checking the number of logical processors against the number of physical cores; more logical processors indicate hyper-threading.

- 🛠️ The many-to-many model is often considered the best for establishing the relationship between user and kernel threads, as it combines the advantages of the other models while minimizing their drawbacks.

- 🔎 To determine if a system supports hyper-threading, one can use the 'wmic cpu get number of cores, number of logical processors' command in the command prompt on Windows.

- 🌟 Hyper-threading is a proprietary term by Intel, but the concept of simultaneous multi-threading can be found in various processor technologies to enhance performance.

Q & A

What are the two types of threads discussed in the lecture?

-The two types of threads discussed are user threads and kernel threads. User threads operate at the user level and are managed without direct kernel support, while kernel threads are managed directly by the operating system.

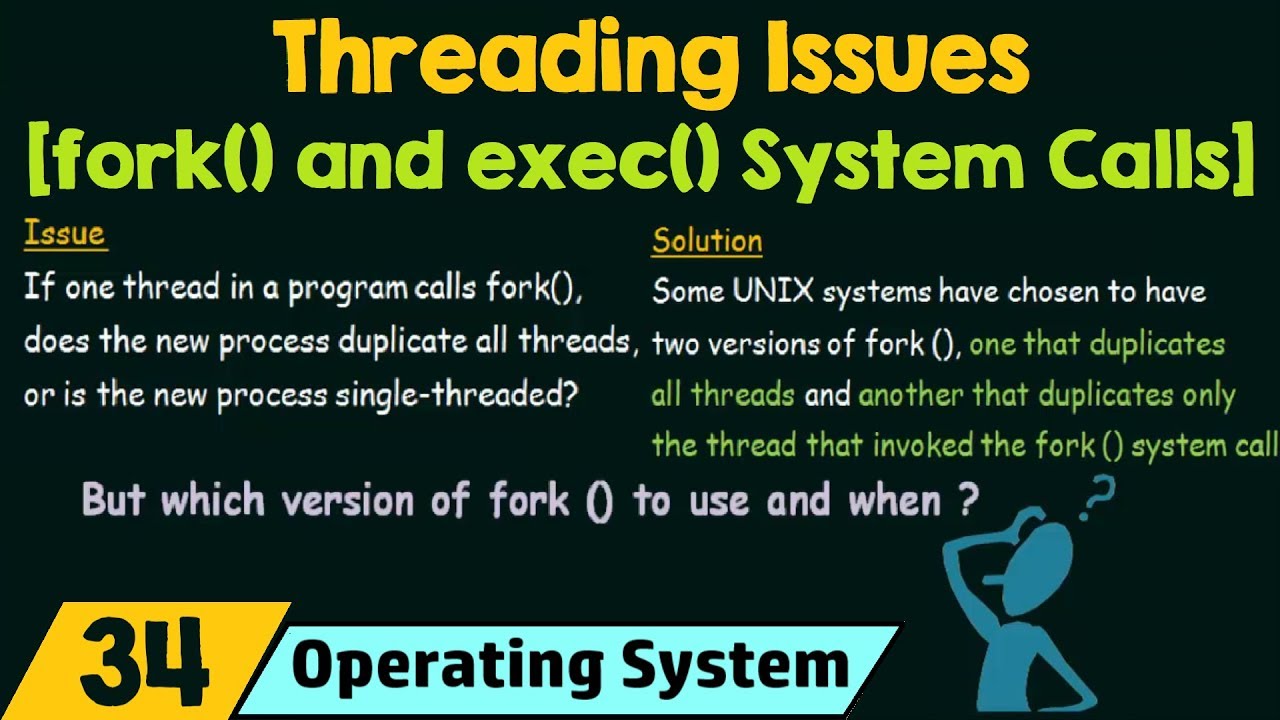

What is the definition of a many-to-one threading model?

-In a many-to-one threading model, many user-level threads are mapped to a single kernel thread. This model allows for efficient thread management in user space but has limitations such as blocking the entire process if a single thread makes a blocking system call.

What are the limitations of the many-to-one threading model?

-The limitations of the many-to-one model include blocking the entire process if one thread makes a blocking system call and the inability to run multiple threads in parallel on a multiprocessor system due to the one-to-one mapping with kernel threads.

How does the one-to-one threading model differ from the many-to-one model?

-The one-to-one threading model maps each user thread to a separate kernel thread, providing more concurrency than the many-to-one model. It allows other threads to continue running even if one thread makes a blocking system call and enables the use of multiprocessor systems.

What is the main disadvantage of the one-to-one threading model?

-The main disadvantage of the one-to-one model is the overhead associated with creating kernel threads. This can be costly and may burden the performance of an application, leading to a restriction on the number of threads supported by the system.

Can you explain the many-to-many threading model?

-The many-to-many threading model multiplexes many user-level threads to a smaller or equal number of kernel threads. It allows developers to create as many user threads as necessary, which can then run in parallel on a multiprocessor system and continue execution even when one thread performs a blocking system call.

What is hyper-threading, and how does it relate to multi-threading?

-Hyper-threading, also known as simultaneous multi-threading, is a technology that allows a single processor core's resources to be virtually divided into multiple logical processors, enabling the execution of multiple threads at the same time. It is a proprietary name given by Intel for this technology.

How does hyper-threading improve system performance?

-Hyper-threading improves system performance by allowing multiple logical processors to execute threads in parallel. This can make efficient use of a processor's resources and increase throughput, as it is almost like having multiple separate processors working together.

How can you determine if your system supports hyper-threading?

-You can determine if your system supports hyper-threading by checking the number of logical processors compared to the number of physical cores. If the number of logical processors is greater than the physical cores, hyper-threading is enabled.

What command can be used in Windows to check the number of cores and logical processors?

-In Windows, you can use the 'wmic cpu get number of cores' command to check the number of physical cores and 'wmic cpu get number of logical processors' to check the number of logical processors, which will help you determine if hyper-threading is enabled.

Outlines

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowMindmap

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowKeywords

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowHighlights

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowTranscripts

This section is available to paid users only. Please upgrade to access this part.

Upgrade Now5.0 / 5 (0 votes)