90% of Data Engineers doesn't know this - PySpark Azure Data Engineering Interview Question Tutorial

Summary

TLDRThis video explains an Apache Spark interview question related to data transformations using Spark SQL, specifically addressing how to perform transformations without creating temporary views. The presenter demonstrates how data engineers typically create temporary views from DataFrames for SQL operations but shows a more efficient method of referencing a DataFrame directly in a Spark SQL command. By eliminating the need for temporary views, this approach simplifies the code, reduces overhead, and leads to more efficient data transformations. This technique is crucial for data engineers looking to optimize their workflows and improve performance.

Takeaways

- 😀 Apache Spark is widely used in data engineering for big data transformations, and it supports multiple programming languages for the same task.

- 😀 In Spark SQL, data engineers typically create a temporary view from a DataFrame before performing transformations.

- 😀 The interview question focuses on performing data transformation using Spark SQL without creating a temporary view.

- 😀 Using Spark SQL with temporary views can be time-consuming and inefficient, especially when working with complex transformations.

- 😀 A common Spark SQL operation is referring to a temporary view (e.g., VW_sales) in the SQL query to perform transformations.

- 😀 The key challenge in the interview question is performing Spark SQL transformations directly on a DataFrame without creating an intermediate view.

- 😀 Spark provides a way to reference DataFrames directly inside a Spark SQL query using variable substitution with curly braces `{}`.

- 😀 To reference a DataFrame directly, assign it to a variable (e.g., `table`), then use that variable in the SQL query by placing it in curly braces.

- 😀 This method helps save code by eliminating the need for creating a temporary view, thus simplifying the transformation process.

- 😀 A key advantage of referencing DataFrames directly in SQL queries is reduced complexity and fewer lines of code in Spark workflows.

- 😀 The approach shown in the video has been asked in multiple data engineering interviews, making it an important skill to learn for Spark SQL users.

Q & A

What is the main focus of the interview question discussed in the video?

-The interview question focuses on how to perform data transformations in Apache Spark SQL without creating temporary views from DataFrames, aiming to reduce unnecessary steps and optimize the process.

Why do data engineers usually create temporary views when using Spark SQL?

-Data engineers typically create temporary views to reference in Spark SQL commands because Spark SQL queries require a table-like structure, and temporary views serve as these tables for querying.

How does Apache Spark allow flexibility in performing data transformations?

-Apache Spark allows flexibility by supporting multiple programming languages (e.g., PySpark, Spark SQL) in the same notebook, so data engineers can choose the language they're most comfortable with for data transformation.

What is the typical workflow for data engineers when using Spark SQL for data transformation?

-The typical workflow involves creating a DataFrame, converting it into a temporary view using the `createOrReplaceTempView()` function, and then referencing the view in a Spark SQL query for transformation.

How can you minimize the creation of temporary views during data transformation in Spark?

-By using DataFrames directly in Spark SQL queries, without the need to create temporary views, you can minimize the overhead of creating and managing these views.

What is the alternative method to using temporary views when performing transformations with Spark SQL?

-The alternative method involves directly referencing the DataFrame in the Spark SQL query by assigning it to a variable and using that variable within curly braces inside the SQL query.

What is the syntax for referencing a DataFrame directly in a Spark SQL query?

-The syntax involves assigning the DataFrame to a variable (e.g., `sales_table`) and then referencing the variable inside the SQL query using curly braces, like `{sales_table}`.

Can you provide an example of the code used to perform data transformation without creating a temporary view?

-Yes, here is an example: `salesDF` is assigned to a variable, and in the SQL query, it’s referenced as `{sales_table}`: `spark.sql('SELECT category, SUM(price) FROM {sales_table} GROUP BY category', {'sales_table': salesDF})`.

What does the use of curly braces around a variable name signify in Spark SQL?

-The curly braces around a variable name signify that Spark should reference the variable directly within the SQL query, rather than looking for a temporary view with the same name.

Why is it important to minimize the creation of temporary views in Spark?

-Minimizing the creation of temporary views reduces the overhead in terms of memory usage and processing time, especially in complex data transformations, making the process more efficient.

Outlines

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowMindmap

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowKeywords

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowHighlights

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowTranscripts

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowBrowse More Related Video

1. What is PySpark?

05 Understand Spark Session & Create your First DataFrame | Create SparkSession object | Spark UI

012-Spark RDDs

What exactly is Apache Spark? | Big Data Tools

Aditya Riaddy - Apa itu Apache Spark dan Penggunaanya untuk Big Data Analytics | BukaTalks

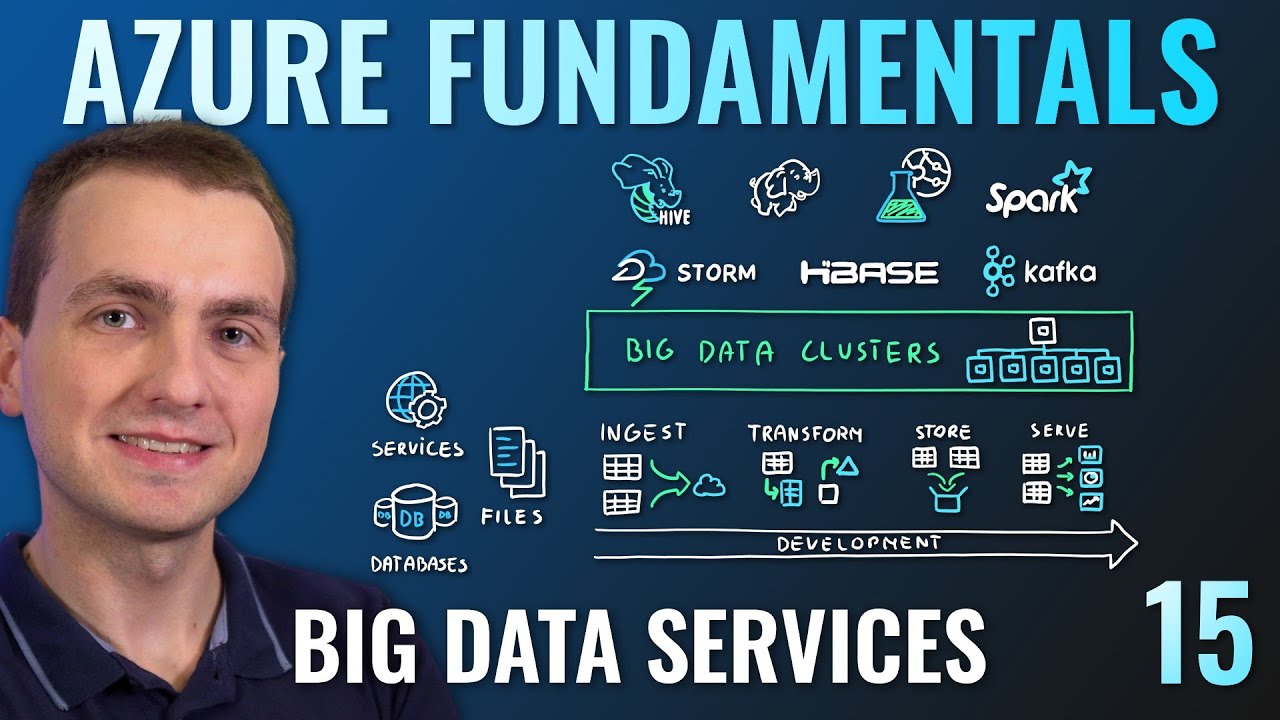

AZ-900 Episode 15 | Azure Big Data & Analytics Services | Synapse, HDInsight, Databricks

5.0 / 5 (0 votes)