Acoustic Camera - Explanations

Summary

TLDRThis video explains the workings of an acoustic camera, highlighting its three main components: an array of 96 microphones, an FPGA for processing data, and an Ethernet transceiver for communication with a computer. The FPGA processes the microphone data efficiently using parallel handling, similar to a group of people tackling separate homework problems. The data is then transmitted through a MAC and PHY. The video also covers beamforming, a technique for determining sound source locations by analyzing time delays across microphones. Ultimately, this process generates an image to visualize the sound's direction, providing insight into sound mapping technology.

Takeaways

- 😀 The acoustic camera uses a 96-microphone array to capture sound data.

- 😀 The FPGA processes microphone data in parallel, making it faster than using a traditional computer.

- 😀 The FPGA's functionality is compared to doing homework with friends, where each friend handles a single problem simultaneously.

- 😀 The MAC (Media Access Control) adds addressing information to the data, while the PHY (Physical Layer) sends it over Ethernet to the computer.

- 😀 The FPGA uses a 25 MHz clock, which is boosted to 125 MHz to synchronize with the microphone data for faster processing.

- 😀 Microphones output data in a PDM (Pulse Density Modulation) format, which is processed and organized by the FPGA.

- 😀 The beamforming process calculates where the sound sources are by analyzing the time it takes for sound waves to reach different microphones in the array.

- 😀 Beamforming relies on the speed of sound and the relative positioning of microphones to estimate the direction of sound sources.

- 😀 The data from the microphones is analyzed by multiplying it with sine and cosine waves to calculate phase and magnitude information.

- 😀 The final output is an image that represents the sound sources' locations, with brighter pixels indicating higher likelihoods of sound presence.

Q & A

What are the three main components of the acoustic camera?

-The three main components of the acoustic camera are an array of 96 digital microphones, an FPGA to capture and forward the data, and an Ethernet transceiver to send the data to a computer.

Why is an FPGA used in the acoustic camera system?

-An FPGA is used because it can handle the data from all 96 microphones simultaneously, a task that would be too complex and slow for a computer to manage.

How does the analogy of homework explain the function of the FPGA?

-The FPGA works like a group of friends doing homework together, where each friend (or FPGA component) handles one problem (microphone) at the same time, speeding up the process compared to doing it individually.

What is the role of the MAC and PHY in the acoustic camera system?

-The MAC adds the 'to' and 'from' addresses to the data, while the PHY puts it in an 'envelope' and sends it to the computer over the network.

What is the significance of the 125 MHz clock in the FPGA?

-The 125 MHz clock is used to synchronize the data flow, providing the necessary speed for processing the microphone data effectively.

What is beamforming in the context of the acoustic camera, and how does it work?

-Beamforming is the process of determining the direction from which sound is coming by analyzing the time at which sound hits each microphone in the array. It helps in constructing a directional image of the sound sources.

How does the system track the direction of sound sources based on microphone data?

-The system analyzes the arrival times of sound at each microphone, and by applying specific rules and calculations, it determines the most likely direction of the sound source, building up an image of its location.

What happens when two microphones register the same sound signal in the beamforming process?

-When two microphones register the same sound signal, the corresponding points on the graph are combined to enhance the certainty of the sound's direction, with the sum of the points giving a higher score for that direction.

Why does the system use sine and cosine waves in the beamforming process?

-The sine and cosine waves are used to filter the microphone data and extract the phase and magnitude of the sound at specific frequencies, which are then used to calculate the direction of arrival of the sound.

How is the final image of sound locations generated in the acoustic camera system?

-The final image is generated by calculating the phase and magnitude for each microphone, correlating this data with the expected signal from each pixel's direction, and then summing the results. The magnitude of the sum is used to assign a brightness to each pixel, which forms the image of the sound's location.

Outlines

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowMindmap

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowKeywords

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowHighlights

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowTranscripts

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowBrowse More Related Video

FPGA Architecture | Configurable Logic Block ( CLB ) | Part-1/2 | VLSI | Lec-75

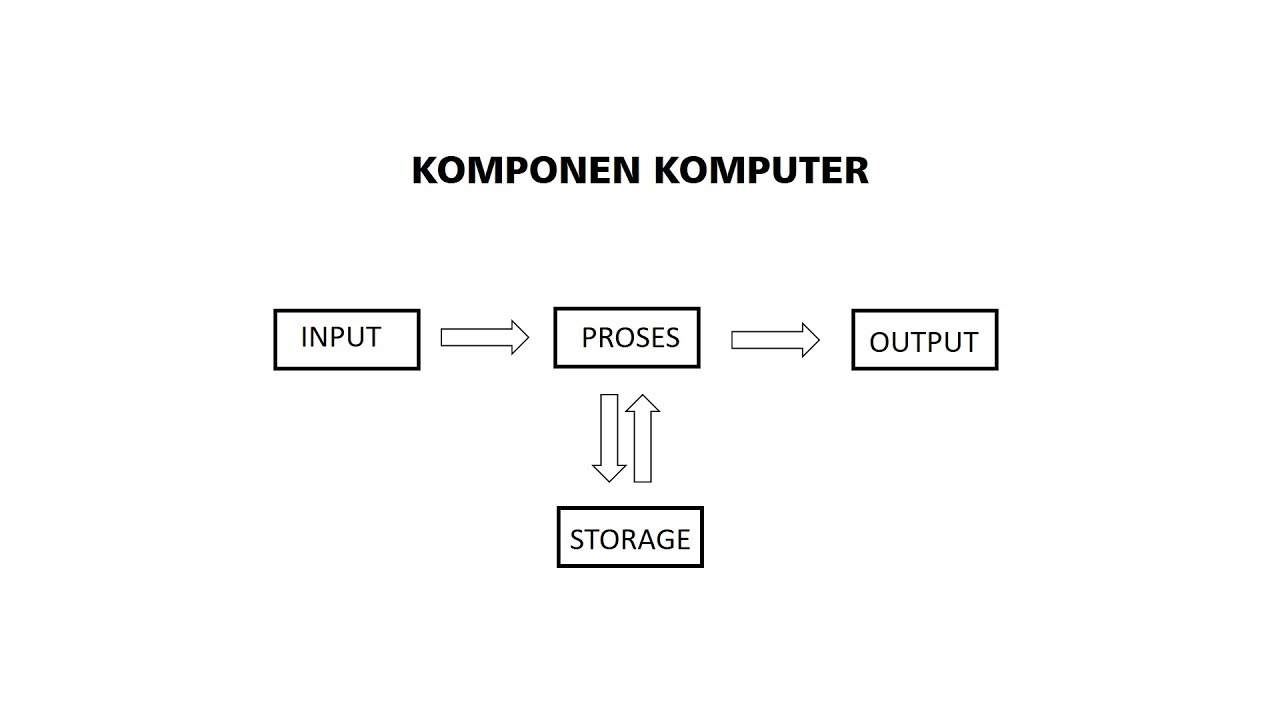

PENGERTIAN KOMPONEN KOMPUTER INPUT PROSES OUTPUT STORAGE

Definition of a Computer; What is Computer Literacy? What are the different Parts of the Computer?

Technische Automatisering 1: Blokschema

Ch - 1 What is Computer ? - Fundamentals of Computer.

Kurikulum Merdeka Informatika Kelas 8 Bab 4: Sistem Komputer

5.0 / 5 (0 votes)