Overview of Adversarial Machine Learning

Summary

TLDRThis video delves into adversarial machine learning, highlighting how adversaries can manipulate machine learning models to produce incorrect outcomes. Using examples like the deceptive stop sign and model inversion attacks, it illustrates the vulnerabilities of ML systems and the significant threat posed by adversarial tactics. The discussion emphasizes the need for robust defenses and continuous research to safeguard these systems against potential exploits. With an active focus on developing effective strategies, the video aims to raise awareness about the complexities of protecting AI models in an increasingly interconnected digital landscape.

Takeaways

- 🚗 Adversarial machine learning involves manipulating models to produce incorrect predictions or reveal sensitive information.

- 🛑 Simple modifications, like adding stickers to stop signs, can deceive self-driving cars into misinterpreting signals.

- 🔍 Data poisoning attacks involve injecting misleading data into training sets, resulting in incorrect model learning.

- 🖼️ Evasion attacks add imperceptible noise to images, causing models to misclassify them while remaining indistinguishable to humans.

- 🔒 Model extraction attacks allow adversaries to duplicate models by querying them, posing a threat to proprietary information.

- 👤 Model inversion attacks can reconstruct sensitive training data, compromising privacy and security.

- 🛡️ Defending against adversarial attacks is challenging, requiring robust methods and ongoing research efforts.

- 📊 Evaluations of defenses should consider adversary capabilities and be reproducible to validate their effectiveness.

- 🔬 The AI division at the SEI focuses on understanding machine learning vulnerabilities to develop robust models.

- 💡 Continuous research in adversarial machine learning is crucial for enhancing the security and reliability of AI systems.

Q & A

What is adversarial machine learning?

-Adversarial machine learning is a field that studies how adversaries can manipulate machine learning models and data to produce incorrect or unexpected outcomes.

How can adversarial attacks influence machine learning systems?

-Adversarial attacks can manipulate model training data or parameters, leading to incorrect learning, misclassification, or revealing unintended information about the model or its training data.

What is a data poisoning attack?

-A data poisoning attack occurs when an adversary deliberately alters the training data to influence the machine learning model's behavior, causing it to learn incorrect associations.

What example illustrates a supply chain attack in machine learning?

-An example of a supply chain attack is when an adversary uses data poisoning to create a malicious model that misclassifies traffic signs, similar to the stop sign being misinterpreted as a speed limit sign due to added stickers.

What is an evasion attack?

-An evasion attack involves introducing adversarial patterns to input data, causing a classification model to misclassify the data despite it appearing normal to human observers.

What is model extraction in the context of adversarial attacks?

-Model extraction is an attack where an adversary queries a machine learning model to create a duplicate of it, thereby gaining access to its functionality and potentially sensitive information.

How does a model inversion attack work?

-In a model inversion attack, an adversary leverages outputs from a machine learning model to infer information about the training data, potentially revealing private information.

What challenges exist in defending against adversarial machine learning?

-Defending against adversarial machine learning is challenging due to the complexity of the attacks, the variety of potential vulnerabilities, and the need for ongoing research to develop effective, generalizable defenses.

What considerations are important for evaluating the robustness of machine learning models?

-Evaluating the robustness of machine learning models involves defining realistic adversarial capabilities, conducting adaptive evaluations that build on prior tests, and ensuring that results are scientifically valid and reproducible.

What is the mission of the adversarial machine learning lab at the SEI?

-The mission of the adversarial machine learning lab at the SEI is to research and develop methods for understanding and creating robust machine learning models, focusing on modes of failure and model behavior.

Outlines

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowMindmap

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowKeywords

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowHighlights

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowTranscripts

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowBrowse More Related Video

'How neural networks learn' - Part II: Adversarial Examples

AZ-900 Episode 16 | Azure Artificial Intelligence (AI) Services | Machine Learning Studio & Service

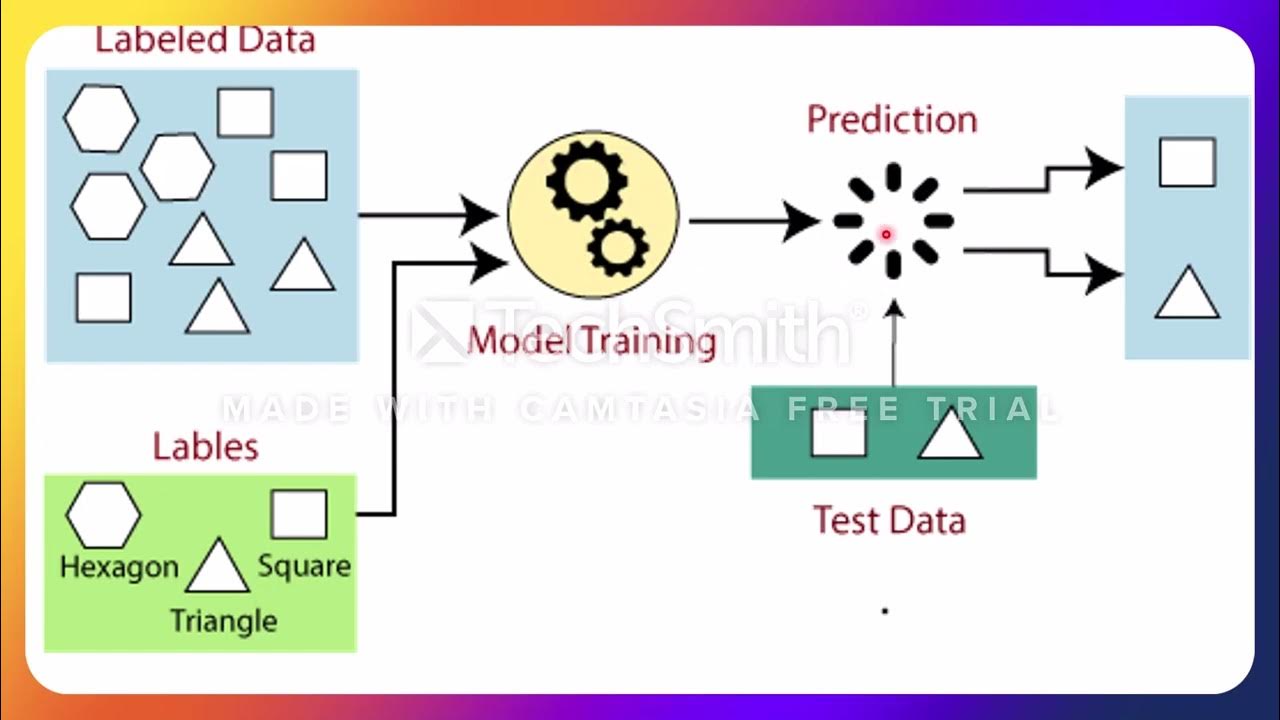

L8 Part 02 Jenis Jenis Learning

Classification & Regression.

Challenges in Machine Learning | Problems in Machine Learning

What is the ideal team structure for machine learning? - Question of the Week

5.0 / 5 (0 votes)