'How neural networks learn' - Part II: Adversarial Examples

Summary

TLDRThis video explores the fascinating world of adversarial examples in machine learning, demonstrating how slight, human-unrecognizable alterations to images can trick neural networks into making wrong predictions. The narrator explains how adversarial examples pose a real-world threat to systems like self-driving cars and facial recognition. By using techniques like gradient descent and the fast gradient sign method, adversarial examples can be crafted to manipulate models. The video also discusses defenses, such as proactive and reactive strategies, and emphasizes the need for solutions to unlock safer and more advanced AI applications.

Takeaways

- 😀 Adversarial examples are specially crafted inputs that can fool neural networks into making incorrect classifications, even when the image looks normal to humans.

- 😀 Neural networks can be easily tricked by seemingly minor modifications to images, such as altering a panda image to be classified as an ostrich.

- 😀 Real-world applications like self-driving cars, facial recognition systems, and traffic cameras are vulnerable to adversarial attacks, which can have serious consequences.

- 😀 Adversarial examples are not limited to computer vision tasks; they can also be applied in natural language processing, affecting sentiment analysis systems.

- 😀 The Fast Gradient Sign Method (FGSM) is a popular technique for generating adversarial examples by calculating the gradient of the loss with respect to input pixels and adjusting the image.

- 😀 Adversarial attacks work across different neural network architectures, meaning an adversarial example for one model can often fool another, a property known as cross-model generalization.

- 😀 Both white-box and black-box adversarial attacks exist. In white-box attacks, the attacker has access to the model’s internals, while in black-box attacks, the attacker can only interact with the model through input and output.

- 😀 The phenomenon of adversarial examples is a major problem for the robustness of machine learning models, highlighting that current models behave differently from human perception.

- 😀 One potential defense strategy against adversarial examples involves training a secondary classifier to detect and reject adversarial examples, but this doubles the infrastructure needed.

- 😀 A proactive defense approach, including adversarial training, improves a model's robustness against adversarial attacks by incorporating adversarial examples into the training process.

- 😀 Despite the existence of adversarial attacks, there is optimism that solving these challenges could unlock new possibilities for machine learning, such as more robust AI-driven design and drug discovery.

Q & A

What is an adversarial example in the context of neural networks?

-An adversarial example is an image that has been intentionally modified to deceive a neural network into making an incorrect classification, even though it may appear unchanged to humans. These modifications are often subtle but can significantly impact the model's prediction.

How does the 'Fast Gradient Sign Method' (FGSM) work in generating adversarial examples?

-The FGSM works by calculating the gradient of the neural network's output with respect to the input image, and then making small adjustments to the image by adding a perturbation. This perturbation is based on the gradient and can trick the network into misclassifying the image while keeping it visually similar to the original.

Why is the existence of adversarial examples problematic for machine learning models?

-Adversarial examples are problematic because they can cause machine learning models, including those in critical applications like self-driving cars or facial recognition, to make incorrect predictions. This vulnerability raises safety and security concerns, especially when such models are deployed in real-world scenarios.

Can adversarial examples be generated for different types of machine learning models?

-Yes, adversarial examples can be generated for any machine learning model, whether it's a simple model like linear regression or complex architectures like deep neural networks, convolutional networks, or recurrent networks.

What does 'cross-model generalization' mean in the context of adversarial examples?

-Cross-model generalization refers to the ability of an adversarial example to fool different types of models. For instance, an adversarial example that works on one network architecture (e.g., a convolutional neural network) is often able to fool other architectures (e.g., linear classifiers or other deep learning models) as well.

Can adversarial examples also affect natural language processing (NLP) models?

-Yes, adversarial examples are not limited to computer vision. They can also be applied to NLP models, where small changes in the text input, such as altering characters or words, can flip the model's classification, such as sentiment analysis.

What are the two main types of adversarial attacks?

-The two main types of adversarial attacks are white-box attacks and black-box attacks. In a white-box attack, the attacker has access to the model's internal gradients, whereas in a black-box attack, the attacker does not have direct access to the model but can generate adversarial examples through the model's output.

What defense mechanisms are proposed to protect models from adversarial examples?

-Two primary defense mechanisms are reactive strategies and proactive strategies. Reactive strategies involve training a second model to detect adversarial examples and rejecting them, while proactive strategies involve including adversarial examples during the training process to make the model more robust.

How does adversarial training help defend against adversarial attacks?

-Adversarial training involves including adversarial examples in the training set so that the model learns to recognize and correctly classify these modified inputs. Surprisingly, this not only improves the model's ability to handle adversarial examples but also enhances its classification performance on normal, unmodified images.

What real-world implications do adversarial examples have on applications like self-driving cars?

-Adversarial examples pose a significant risk to self-driving cars, where a seemingly normal stop sign could be modified to look like an infinite speed limit sign, causing the vehicle to misinterpret the traffic signal and potentially lead to dangerous situations. Such vulnerabilities need to be addressed to ensure the safety and reliability of autonomous systems.

Outlines

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowMindmap

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowKeywords

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowHighlights

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowTranscripts

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowBrowse More Related Video

Neural Network - Pengantar Kecerdasan Buatan

How computers are learning to be creative | Blaise Agüera y Arcas

PENGEMBANGAN APLIKASI MOBILE DENGAN LIBRARY KECERDASAN ARTIFISIAL

Why is Linear Algebra Useful?

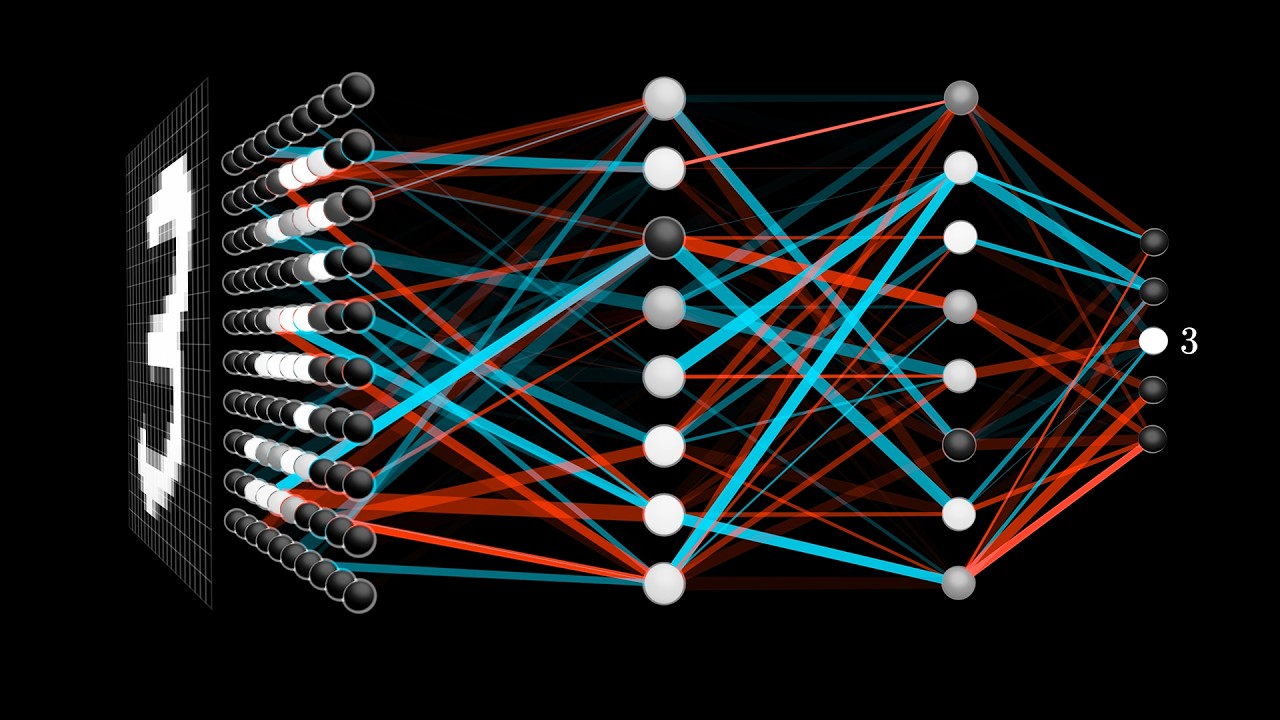

But what is a neural network? | Chapter 1, Deep learning

Machine Learning Fundamentals A - TensorFlow 2.0 Course

5.0 / 5 (0 votes)