1.2.7 Variable-length Encoding

Summary

TLDRThis video discusses the advantages of variable-length encodings over fixed-length encodings in information theory. It explains how expected length calculations can optimize encoding efficiency based on the probability of symbol occurrences. Through a binary tree example, it illustrates how symbols are assigned shorter encodings when their probabilities are higher, ultimately decoding a message to demonstrate the practical application. The analysis reveals that while variable-length encodings improve efficiency, they may not always reach the theoretical lower bound of entropy. The video concludes by hinting at future content on generating optimal variable-length codes.

Takeaways

- 😀 Fixed-length encodings are efficient only when all choices have equal probabilities.

- 🤔 Variable-length encodings can optimize efficiency when probabilities differ among choices.

- 📏 The expected length of encoding can be calculated by weighting each symbol's length by its probability.

- 🌟 Higher probability symbols receive shorter encodings to minimize expected length.

- 🌌 Lower probability symbols are assigned longer encodings due to their higher information content.

- 🌳 Encoding can be visualized using a binary tree structure, aiding in clarity and understanding.

- 🔍 Decoding involves traversing the binary tree based on the encoded data to retrieve original symbols.

- 📊 The expected length of variable-length encoding was calculated to be 1.67 bits per symbol.

- 📉 A fixed-length encoding for the same symbols would require more bits, resulting in inefficiency.

- 📈 The variable-length encoding approached the lower bound of bits needed, but did not reach it, highlighting the potential for further optimization.

Q & A

What is the main advantage of variable-length encoding over fixed-length encoding?

-Variable-length encoding allows for shorter expected lengths of encoding by using shorter codes for more probable symbols and longer codes for less probable symbols, resulting in more efficient data representation.

How is the expected length of an encoding computed?

-The expected length is computed by weighting the length of each symbol's encoding by its probability of occurrence, providing an average length for the entire encoding.

What does entropy (H(X)) represent in the context of encoding?

-Entropy represents the average information content per symbol in a dataset and serves as a theoretical lower bound for the length of encoding needed.

How does the probability of a symbol affect its encoding length?

-Higher probability symbols, which carry less information, are assigned shorter encodings, while lower probability symbols, which convey more information, are assigned longer encodings.

What was the example used in the transcript to illustrate variable-length encoding?

-The example involved encoding four symbols (A, B, C, and D) with specified probabilities, demonstrating how shorter codes were assigned to higher-probability symbols.

What is the significance of the binary tree in variable-length encoding?

-The binary tree visually represents the encoding process, with leaves corresponding to symbols and paths indicating their respective codes, ensuring that the encoding is unambiguous.

What is the expected length of the variable-length encoding in the provided example?

-The expected length of the variable-length encoding in the example is calculated to be 1 and 2/3 bits per symbol.

How does the expected length of variable-length encoding compare to fixed-length encoding?

-In the example, fixed-length encoding would require 2 bits per symbol, totaling 2000 bits for 1000 symbols, while variable-length encoding would require an expected length of 1667 bits for the same number of symbols.

What is the lower bound on the number of bits needed for encoding based on entropy?

-The lower bound on the number of bits needed to encode 1000 symbols is 1000 times the entropy, which is 1626 bits in the example.

What will the next video discuss following the topic of variable-length encoding?

-The next video will address systematic methods for generating the best possible variable-length codes.

Outlines

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowMindmap

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowKeywords

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowHighlights

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowTranscripts

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowBrowse More Related Video

#50 || Variable-Length Language Model|| Language Modeling || NLP || #nlp

FEMUR TUNNEL CALCULATIONS IN ACL SURGERY

RISC vs CISC | Computer Organization & Architecture

Percobaan Melde - Menentukan Cepar Rambat Gelombang Pada Tali

13" Achilles Tendon!? Giannis Antetokounmpo - Does Length Matter??

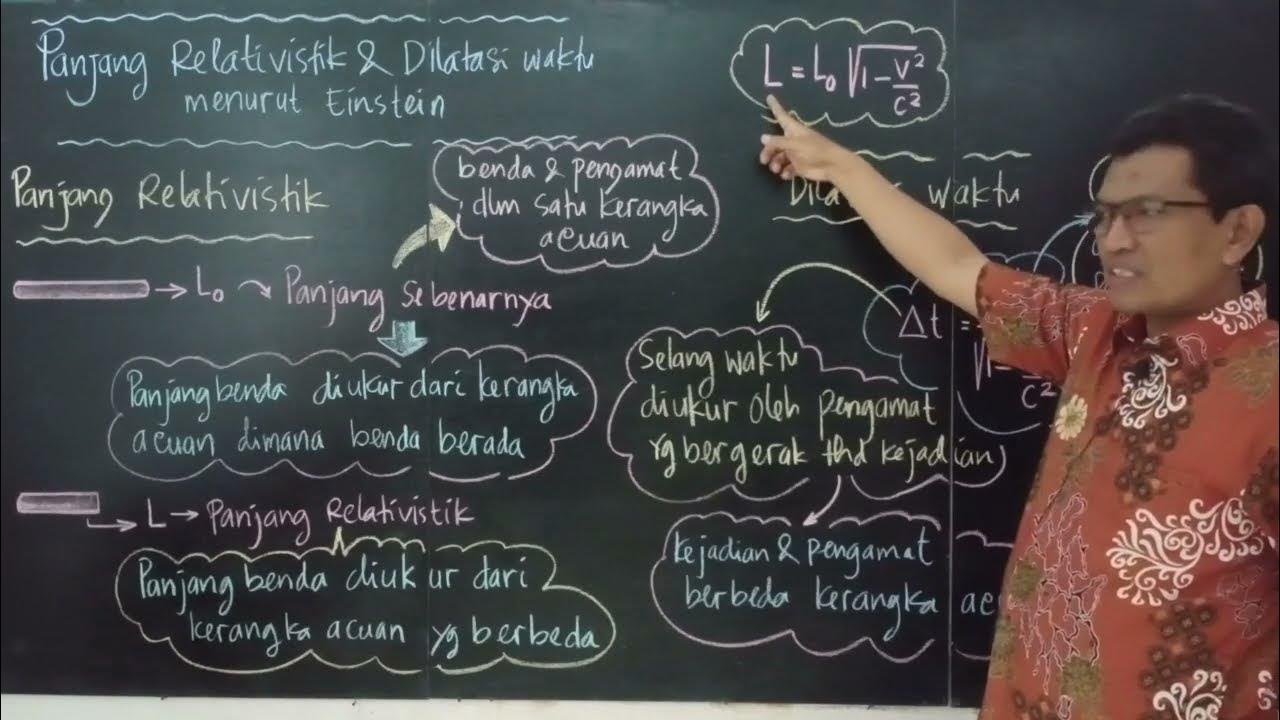

PANJANG RELATIVISTIK & DILATASI WAKTU | Relativitas Einstein - Fisika Kelas 12

5.0 / 5 (0 votes)