74 AlexNet Architecture Explained

Summary

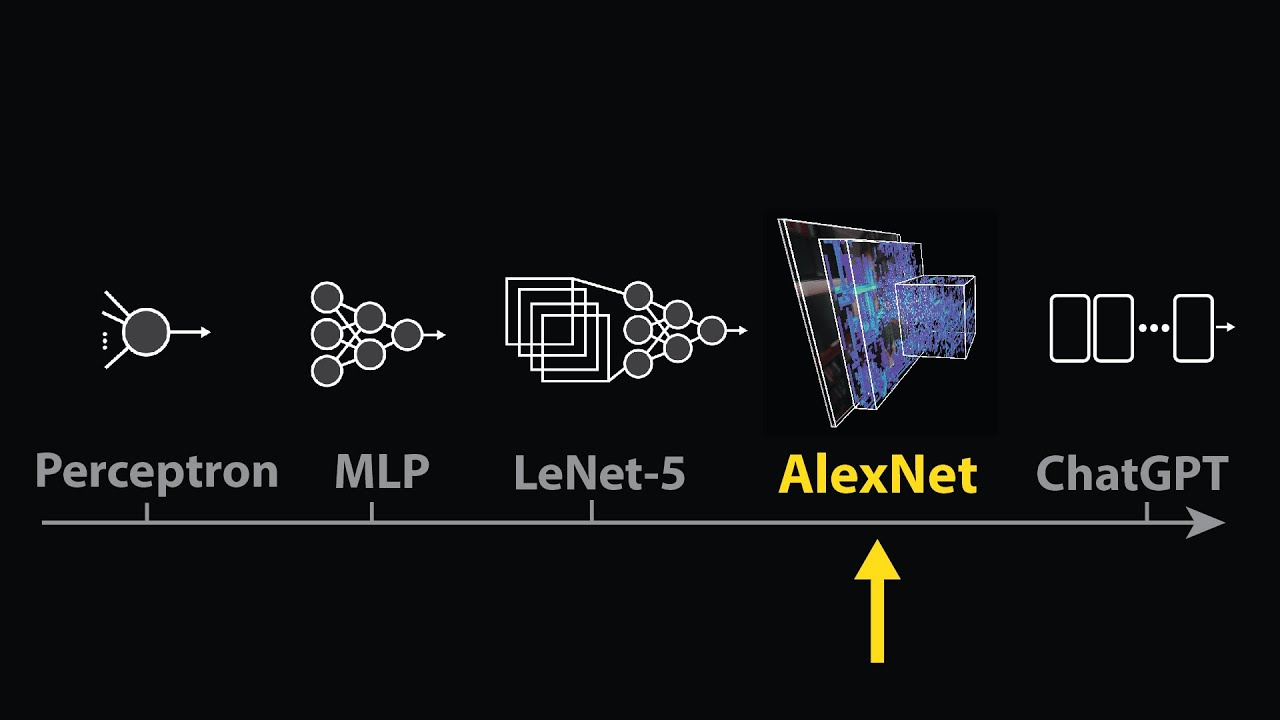

TLDRThe video explains AlexNet, a groundbreaking deep learning model introduced by Google engineers in 2012. It revolutionized computer vision, winning the ImageNet competition and outperforming previous models like LeNet-5. AlexNet introduced innovations such as ReLU activation, local response normalization, overlapping pooling, and dropout regularization. It consisted of eight layers, including five convolutional and three fully connected layers, and was trained on two GPUs. The model marked a significant advancement in neural networks, handling larger image datasets with enhanced accuracy and efficiency, influencing modern deep learning architectures.

Takeaways

- 🚀 AlexNet was proposed in 2012 by Google engineers, marking a breakthrough in computer vision and winning the ImageNet Challenge.

- 🧠 AlexNet introduced 8 layers, including 5 convolutional layers and 3 fully connected layers, significantly advancing neural network architecture.

- 💾 AlexNet had 62 million parameters, a huge increase compared to earlier networks like LeNet, which had only a few thousand parameters.

- ⚡ For the first time, AlexNet utilized the ReLU activation function, replacing the previously used Tanh and Sigmoid functions.

- 🧩 The network implemented Local Response Normalization (LRN), though this has since been replaced by Batch Normalization (BN).

- 🌊 AlexNet introduced overlapping pooling layers, with a 3x3 pooling layer and stride of 2, enabling overlap in the pooling process.

- 📈 Data augmentation was a key feature of AlexNet, helping the network generalize better by handling variance in the training data.

- 🛑 The network used dropout with a rate of 50%, preventing overfitting during training by randomly dropping neurons.

- 🔍 AlexNet could classify 1,000 objects, marking one of the first neural networks to handle such a large number of categories.

- 🏋️ AlexNet was trained on two GPUs, leveraging early GPU technology to handle its vast amount of parameters and computation.

Q & A

What is AlexNet, and why was it considered a breakthrough?

-AlexNet, proposed in 2012 by Google engineers, was a breakthrough in computer vision. It won the ImageNet Large Scale Visual Recognition Challenge in 2012, significantly outperforming competitors and introducing several architectural innovations.

How does AlexNet differ from earlier neural networks like LeNet-5?

-Compared to LeNet-5, AlexNet introduced deeper layers (8 layers total), used 62 million parameters, and benefited from GPU training. LeNet-5 had far fewer parameters and was much smaller in scale.

How many GPUs were used to train AlexNet, and what was notable about this at the time?

-AlexNet was trained on two GPUs, which was notable in 2012 because GPUs were not as powerful as today, yet they enabled large-scale training for AlexNet.

What are the key components of AlexNet’s architecture?

-AlexNet consists of 8 layers: 5 convolutional layers and 3 fully connected layers. It also introduced ReLU activation functions, local response normalization, overlapping pooling layers, and dropout for regularization.

What activation function did AlexNet introduce, and why was it significant?

-AlexNet introduced the ReLU (Rectified Linear Unit) activation function, which was significant because it allowed for faster training compared to the previously used tanh and sigmoid functions.

What is Local Response Normalization (LRN) in AlexNet, and how is it used today?

-Local Response Normalization (LRN) was used to normalize neuron outputs across layers in AlexNet. Today, LRN has mostly been replaced by batch normalization, which is more effective.

What is overlapping pooling in AlexNet, and how does it differ from non-overlapping pooling?

-Overlapping pooling in AlexNet involves using a 3x3 pooling layer with a stride of 2, which causes overlap between the pooled regions. In contrast, non-overlapping pooling typically uses a 2x2 pooling layer with a stride of 2, resulting in no overlap.

What is data augmentation, and how was it applied in AlexNet?

-Data augmentation in AlexNet involved generating variations of the input images to increase the size and diversity of the training data. This allowed the network to handle variances in inputs more effectively.

What is the role of dropout in AlexNet, and why was it important?

-Dropout in AlexNet randomly drops 50% of the neurons during training to prevent overfitting. It was an important regularization technique to improve the network's generalization capabilities.

How did AlexNet’s use of max-pooling differ from earlier networks?

-AlexNet used max-pooling layers after each convolutional layer with a 3x3 kernel and a stride of 2, which replaced the earlier use of average pooling found in networks like LeNet-5. Max-pooling was found to work better for feature extraction.

Outlines

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowMindmap

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowKeywords

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowHighlights

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowTranscripts

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowBrowse More Related Video

Google Keynote 2024 - The Gemini Era - And its Impact on Us - Google brings AI in our Daily Lives

NVIDIA Gana la BATALLA de la Inteligencia Artificial

What is a Machine Learning Engineer

Como funciona a Inteligência Artificial?

The moment we stopped understanding AI [AlexNet]

Advanced Theory | Neural Style Transfer #4

5.0 / 5 (0 votes)