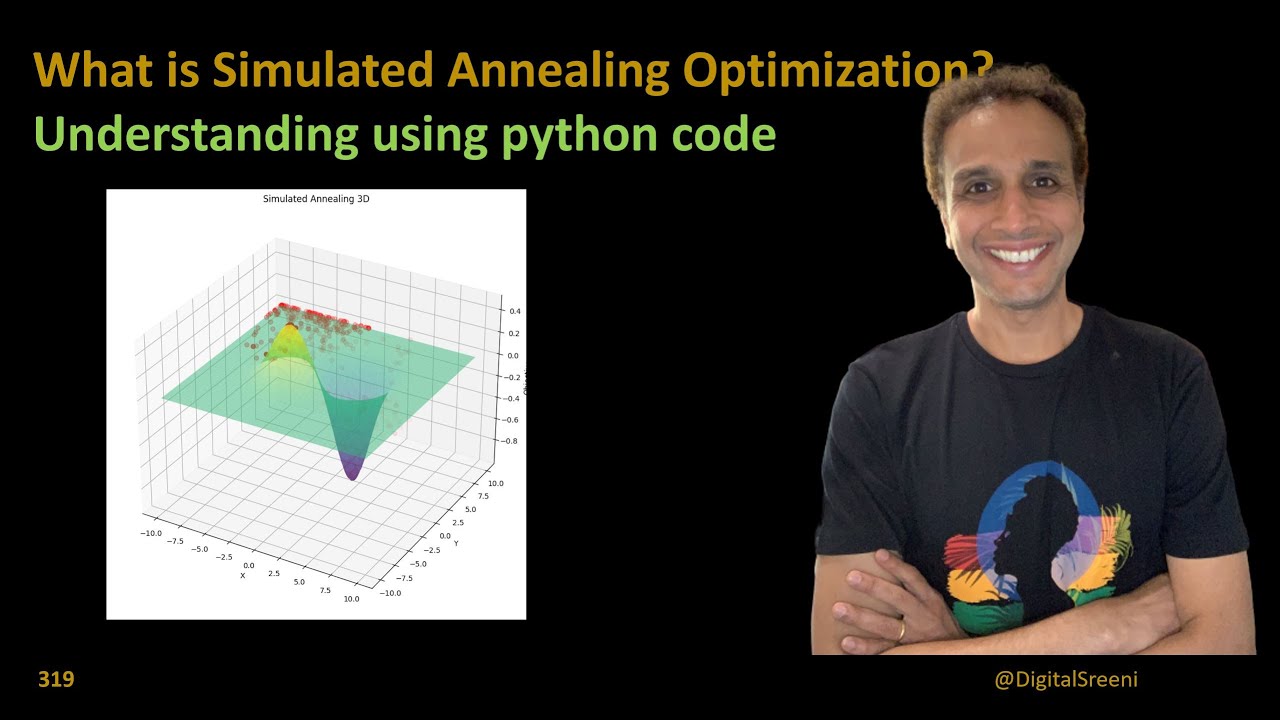

The simulated annealing algorithm explained with an analogy to a toy

Summary

TLDRThe video explains the simulated annealing algorithm, a method used to solve optimization problems by mimicking the controlled cooling process in metallurgy. It starts with a random configuration and gradually improves it, accepting both good and bad moves depending on a temperature variable that decreases over time. The algorithm is applied to complex problems like the traveling salesman and protein structure design. The video also contrasts simulated annealing with hill climbing and random walk methods, highlighting its balance between exploration and optimization.

Takeaways

- 🔧 The simulated annealing algorithm is inspired by the annealing process in metallurgy, where controlled cooling strengthens metals.

- 🚶 Simulated annealing is widely used in solving optimization problems like the traveling salesman, circuit board design, robot path planning, and bioinformatics.

- 🔄 The algorithm allows for accepting both good and bad moves initially, becoming more selective as it progresses, similar to how we play a water ring game.

- 📊 To apply simulated annealing, we need a way to define the 'energy' or 'fitness' of a solution, which is a measure of how good a configuration is.

- 🎯 The goal is to avoid getting stuck in local minima or maxima and work toward the global optimum solution.

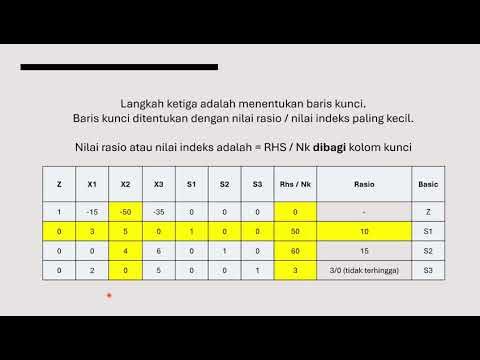

- 💡 The process involves computing the change in energy, represented by delta E, after altering the system's configuration.

- 🌡️ Temperature (T) is a key factor in the algorithm. Initially, the temperature is high, allowing bad moves to be accepted with high probability, and it decreases gradually.

- 📈 As the temperature decreases, the algorithm becomes less likely to accept bad moves, focusing on refining the solution.

- ⚙️ The algorithm repeats this process over several iterations (epochs) with different temperature values, trying to reach the global minimum or maximum.

- 🔍 The parameters like Tmax, Tmin, and number of epochs depend on the specific problem being solved and can be adjusted based on trial and error.

Q & A

What is the origin of the term 'annealing' in the simulated annealing algorithm?

-'Annealing' comes from metallurgy, where it refers to a process of slow and controlled cooling to make strong metallic objects. This concept is applied to the simulated annealing algorithm in computer science.

What types of problems can the simulated annealing algorithm be used to solve?

-The simulated annealing algorithm is commonly applied to solve problems like the travelling salesman problem, designing printed circuit boards, robot path planning, and in bioinformatics to design 3D protein structures.

How does the water bubble ring toy help explain the simulated annealing algorithm?

-The toy illustrates how one might start by pressing buttons vigorously (randomly exploring the solution space), then progressively become more careful and deliberate (moving towards an optimal solution). This mimics the way simulated annealing explores different configurations before narrowing down to a better solution.

How does the simulated annealing algorithm treat bad moves during the optimization process?

-In the beginning, the algorithm allows bad moves to explore the solution space, but as it progresses (temperature decreases), the acceptance of bad moves becomes less likely, guiding the algorithm towards better solutions.

What is the role of energy in the simulated annealing algorithm?

-Energy (E) measures how good a configuration is in optimization problems. The algorithm computes the energy change (ΔE) between configurations, and this value is used to decide whether to accept a new configuration.

What is a local maximum, and why is it important in the simulated annealing algorithm?

-A local maximum is a point in the solution space where the configuration appears optimal but is not the best possible solution (global maximum). The simulated annealing algorithm must avoid getting stuck in local maxima by accepting occasional bad moves early in the process.

How does temperature influence the acceptance of bad moves in the simulated annealing algorithm?

-At high temperatures, the probability of accepting bad moves is high, allowing exploration of the solution space. As temperature decreases, this probability lowers, leading the algorithm to focus on good moves and refine the solution.

What happens if the change in energy (ΔE) is very high during the optimization process?

-If the change in energy (ΔE) is very high, the probability of accepting the move decreases, indicating a larger difference between the old and new configurations, and the algorithm is less likely to accept the move.

What is the difference between hill climbing and random walk algorithms compared to simulated annealing?

-Hill climbing always accepts better moves, making it prone to getting stuck in local maxima. A random walk ignores the quality of moves, leading to endless exploration. Simulated annealing balances exploration and optimization by allowing bad moves initially but becoming selective as the solution progresses.

What are the key parameters in the simulated annealing algorithm, and how are they determined?

-The key parameters are maximum temperature (Tmax), minimum temperature (Tmin), and the number of epochs (iterations). Tmax is usually set to a high value (e.g., 3000-5000), Tmin to a low value (e.g., 0-10), and the number of epochs typically ranges from 100 to 200. These parameters are problem-dependent and adjusted based on trial and error.

Outlines

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowMindmap

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowKeywords

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowHighlights

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowTranscripts

This section is available to paid users only. Please upgrade to access this part.

Upgrade Now5.0 / 5 (0 votes)