ADABOOST: GEOMETRIC INTUTION LEC # 425

Summary

TLDRIn this lecture on applied data science, the instructor discusses AdaBoost, a popular boosting algorithm, and provides a geometric interpretation of its workings. AdaBoost, commonly used in computer vision and image processing, focuses on adjusting weights for misclassified data points in each iteration, thus adapting to errors. The lecture compares AdaBoost with Gradient Boosting, highlighting their differences and similarities. AdaBoost is explained step-by-step using a toy example, emphasizing its effectiveness in face detection, while noting that Gradient Boosting is more widely used in general-purpose machine learning tasks.

Takeaways

- 📚 AdaBoost is a popular boosting algorithm, also known as Adaptive Boosting, often compared with Gradient Boosting but with key differences.

- 🔍 AdaBoost is widely used in computer vision and image processing applications, especially in face detection, although it can be used in other fields.

- 🧠 The core idea of AdaBoost is to adaptively give more weight to misclassified points during each iteration of the model training process.

- 🌟 The process starts with a decision tree, typically a decision stump (a shallow tree with depth = 1), to classify the data into different regions.

- 📈 After each round of training, the misclassified points are given higher weight, which influences the next model to focus on correcting these errors.

- 📊 The final AdaBoost model is a combination of several weak classifiers (decision stumps), each weighted by how well they perform on the data.

- 🎯 The key difference between AdaBoost and Gradient Boosting is that AdaBoost assigns higher weight to misclassified points, while Gradient Boosting uses the negative gradient of the loss function.

- 💡 In AdaBoost, weights are updated exponentially for the misclassified points to emphasize their importance in the subsequent rounds of training.

- 🚀 AdaBoost’s effectiveness is demonstrated in tasks like face detection, but for general-purpose machine learning, Gradient Boosted Decision Trees (GBDT) are often more widely used.

- 🛠️ AdaBoost is available in libraries like Scikit-learn, and its variations can be found in other boosting algorithms.

Q & A

What is AdaBoost, and how does it differ from gradient boosting?

-AdaBoost, also known as Adaptive Boosting, is a popular boosting algorithm similar to gradient boosting, but with key differences. AdaBoost focuses on adapting to errors by increasing the weights of misclassified points, whereas gradient boosting uses pseudo-residuals computed from the negative gradient of the loss function.

What is a decision stump in the context of AdaBoost?

-A decision stump is a weak learner in AdaBoost that is essentially a decision tree with a depth of one. It creates a simple model, usually represented as a hyperplane parallel to the x or y axis, which separates data into two classes.

How are misclassified points handled in AdaBoost?

-In AdaBoost, misclassified points are given more weight in the next round of training. This is done by either up-sampling the misclassified points or explicitly assigning higher weights to them. The goal is to make the next model focus more on correcting these errors.

What happens at each stage of AdaBoost when a new model is trained?

-At each stage of AdaBoost, a new weak learner (like a decision stump) is trained on the weighted dataset. The weights of misclassified points from the previous round are increased, and the new model attempts to classify them correctly.

What role does the weight (Alpha) play in AdaBoost?

-The weight (Alpha) in AdaBoost determines the influence of each weak learner on the final model. The weight is calculated based on how well the model performs on the training data, with lower error rates resulting in higher Alpha values.

How does AdaBoost combine multiple weak learners?

-AdaBoost combines multiple weak learners by weighting their predictions using their respective Alpha values. The final model is a weighted sum of the individual models' predictions, allowing it to make more accurate classifications.

What are the main applications of AdaBoost?

-AdaBoost is commonly used in image processing applications, especially for tasks like face detection. However, it can also be applied to non-image processing tasks. It is particularly effective when combined with other techniques in tasks that involve identifying patterns or features in images.

Why are weights increased exponentially in AdaBoost?

-In AdaBoost, the weights of misclassified points are increased exponentially to ensure that subsequent models focus on correcting these errors. This adaptive mechanism helps the algorithm hone in on the most challenging points to classify.

How does AdaBoost compare to Gradient Boosted Decision Trees (GBDT) in terms of usage?

-While AdaBoost is effective in certain areas like face detection, Gradient Boosted Decision Trees (GBDT) are more commonly used in general-purpose machine learning, particularly in internet companies. GBDT tends to be preferred due to its flexibility and performance across various tasks.

What is the final model in AdaBoost composed of?

-The final model in AdaBoost is a weighted sum of the weak learners' predictions. Each weak learner contributes according to its Alpha value, which represents its accuracy in the training process. The final model combines these weighted predictions to make a more accurate classification.

Outlines

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowMindmap

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowKeywords

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowHighlights

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowTranscripts

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowBrowse More Related Video

Gradient Boost Part 1 (of 4): Regression Main Ideas

PSD - Data Visualization Part.01/02

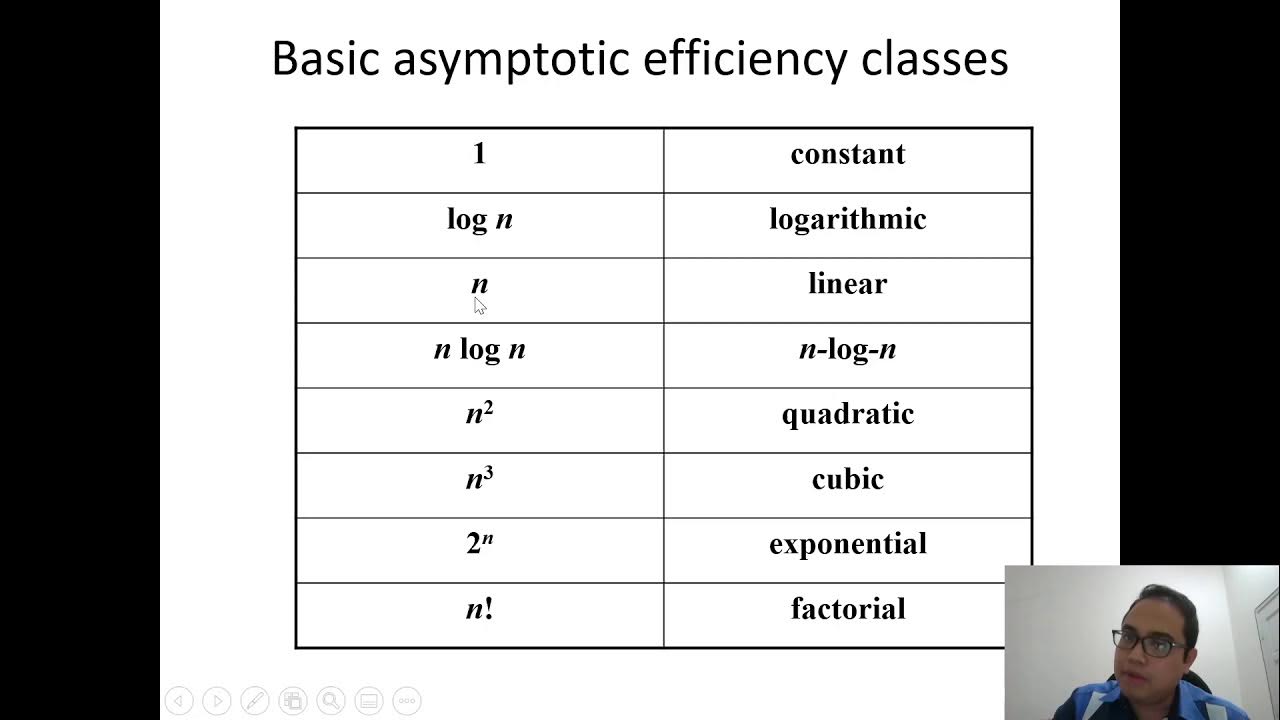

CSC645 - Chapter 1 (Continued) - Fundamentals of Algorithm Analysis

noc19-cs33 Lec 26 Parallel K-means using Map Reduce on Big Data Cluster Analysis

AdaBoost Ensemble Learning Solved Example Ensemble Learning Solved Numerical Example Mahesh Huddar

How to start a Career in Data Science - [Hindi] - Quick Support

5.0 / 5 (0 votes)