How a machine learns

Summary

TLDRThis script delves into the workings of neural networks, the cornerstone of machine learning. It explains how ANNs learn through layers of neurons, utilizing weights and activation functions to process inputs and produce outputs. The importance of non-linearity introduced by activation functions like ReLU, sigmoid, and softmax is highlighted. The script also covers the backpropagation process using gradient descent to minimize loss functions like MSE and cross-entropy, adjusting weights for improved predictions. Hyperparameters' role in guiding the learning process is also underscored.

Takeaways

- 🧠 **Neural Networks Fundamentals**: Neural networks like DNN, CNN, RNN, and LLMs are based on the basic structure of ANN.

- 🌐 **Structure of ANN**: ANNs consist of an input layer, hidden layers, and an output layer, with neurons connected by synapses.

- 🔢 **Learning Process**: ANNs learn by adjusting weights through the training process to make accurate predictions.

- 📈 **Weighted Sum Calculation**: The first step in learning is calculating the weighted sum of inputs multiplied by their weights.

- 📉 **Activation Functions**: These are crucial for introducing non-linearity into the network, allowing complex problem-solving.

- 🔄 **Output Layer Calculation**: The weighted sum is calculated for the output layer, potentially using different activation functions.

- 🎯 **Prediction Representation**: The predicted result is denoted as \( \hat{y} \), while the actual result is \( y \).

- 📊 **Activation Functions Explained**: ReLU, sigmoid, and Tanh are common functions, with softmax used for multi-class classification.

- 📉 **Loss and Cost Functions**: These measure the difference between predicted and actual values, guiding the learning process.

- 🔍 **Backpropagation**: A method for adjusting weights and biases based on the significant difference between predictions and actual results.

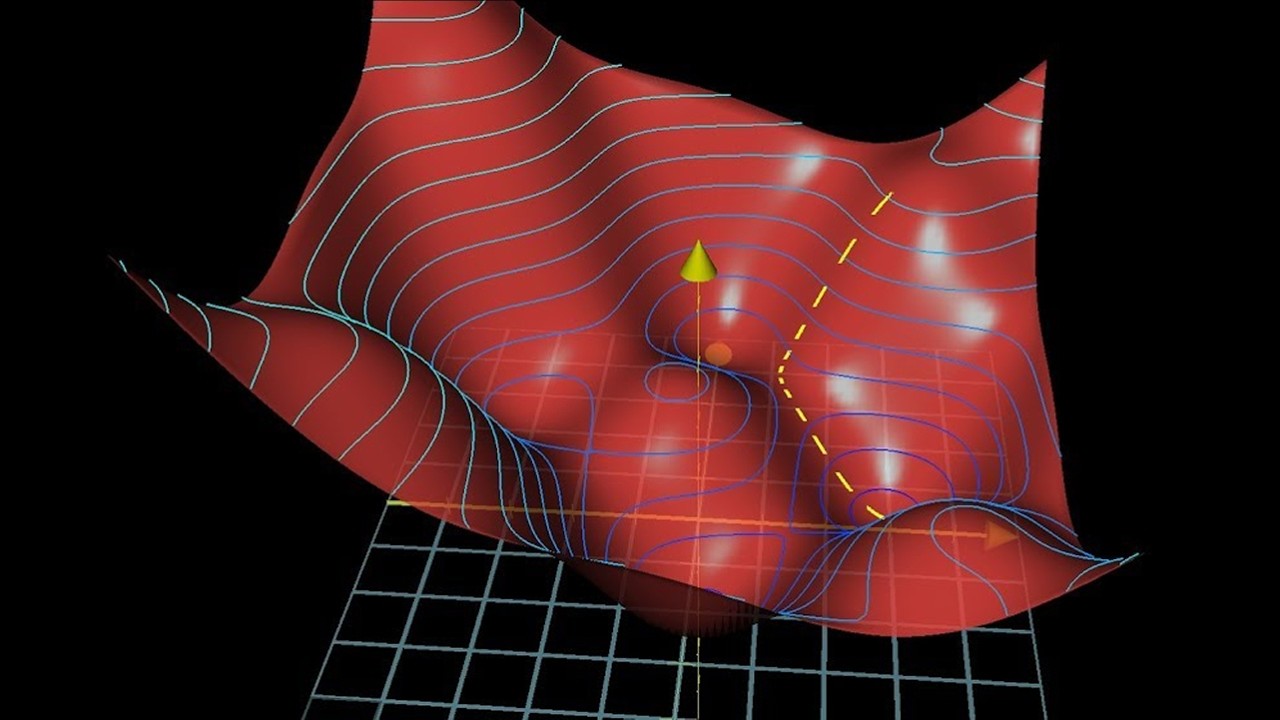

- 🔄 **Gradient Descent**: An optimization algorithm used to minimize the cost function by iteratively adjusting weights.

- 🔁 **Iteration and Epochs**: The training process is repeated through epochs until the cost function reaches an optimum value.

- 🛠️ **Hyperparameters**: Parameters like learning rate and number of epochs that determine the learning process, set before training begins.

Q & A

What is the fundamental structure of an artificial neural network (ANN)?

-An ANN has three layers: an input layer, a hidden layer, and an output layer. Each node in these layers represents a neuron.

How do neural networks learn from examples?

-Neural networks learn from examples by adjusting the weights through a training process, aiming to minimize the difference between predicted and actual results.

What is the purpose of the weights in a neural network?

-The weights in a neural network retain the information learned through the training process and are used to calculate the weighted sum of inputs.

Why are activation functions necessary in neural networks?

-Activation functions are necessary to introduce non-linearity into the network, allowing it to learn complex patterns that linear models cannot capture.

What is the difference between a cost function and a loss function?

-A loss function measures the error for a single training instance, while a cost function measures the average error across the entire training set.

How does gradient descent help in training a neural network?

-Gradient descent is an optimization algorithm that adjusts the weights and biases to minimize the cost function by iteratively moving in the direction of the steepest descent.

What is the role of the learning rate in gradient descent?

-The learning rate determines the step size during the gradient descent process, influencing how quickly the neural network converges to the optimal solution.

Why might a neural network with only linear activation functions not be effective?

-A neural network with only linear activation functions would essentially be a linear model, which cannot capture complex, non-linear relationships in the data.

What is the significance of the number of epochs in training a neural network?

-The number of epochs determines how many times the entire training dataset is passed through the network. It affects the thoroughness of the training process.

How does backpropagation contribute to the learning process of a neural network?

-Backpropagation is the process of adjusting the weights and biases in the opposite direction of the gradient to minimize the cost function when there is a significant difference between predicted and actual results.

What is the softmax activation function used for, and how does it differ from the sigmoid function?

-The softmax activation function is used for multi-class classification problems, outputting a probability distribution across multiple classes. The sigmoid function, on the other hand, is used for binary classification, outputting a probability between 0 and 1 for a single class.

Outlines

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowMindmap

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowKeywords

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowHighlights

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowTranscripts

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowBrowse More Related Video

Backpropagation in Neural Networks | Back Propagation Algorithm with Examples | Simplilearn

Gradient descent, how neural networks learn | Chapter 2, Deep learning

You Don't Understand AI Until You Watch THIS

Deep Learning: In a Nutshell

How computers are learning to be creative | Blaise Agüera y Arcas

What is a Neural Network?

5.0 / 5 (0 votes)