How to Pick the Right AI Foundation Model

Summary

TLDR本视频脚本介绍了一个AI模型选择框架,用于帮助用户根据特定用例选择合适的生成性AI模型。框架包含六个阶段:明确用例、列出可选模型、评估模型特性、运行测试、选择最佳选项和部署考量。通过比较不同模型的性能、成本和风险,用户可以找到最适合其需求的模型。

Takeaways

- 🎯 明确用例是选择AI模型的首要步骤,需要清晰定义使用场景。

- 📋 在众多基础模型中,选择合适大小的模型比单纯追求最大模型更为重要。

- 💡 考虑模型的成本,包括计算成本、复杂性和可变性。

- 🔍 评估模型时,要考虑模型的大小、性能、成本、风险和部署方法。

- 🚀 测试是评估模型性能的关键,应根据特定用例进行。

- 🏆 选择模型时,应考虑准确性、可靠性和速度这三个关键因素。

- 📊 准确性可以通过选择相关的评估指标来客观和重复地衡量。

- 🛡️ 可靠性涉及模型的一致性、可解释性和避免产生有害内容的能力。

- ⏱️ 速度是用户体验的重要部分,但与准确性往往是一个权衡。

- 📱 部署模型时,需要考虑模型和数据的部署位置和方式。

- 🌐 单一模型可能不适合所有用例,多模型方法可能更适合组织的需求。

Q & A

如何决定使用哪个基础模型来运行生成性AI用例?

-选择基础模型需要考虑模型的训练数据、参数数量、成本、复杂性、可变性等因素。应根据特定用例的需求,通过一个包含六个阶段的AI模型选择框架来评估和选择最合适的模型。

选择最大的基础模型是否总是最佳选择?

-并非总是最佳选择。虽然最大的模型通常具有很好的泛化能力,但它们也会带来计算成本、复杂性和可变性的增加。正确的方法是选择适合特定用例的大小的模型。

AI模型选择框架包含哪些阶段?

-AI模型选择框架包含六个阶段:1) 清晰阐述用例;2) 列出可用的模型选项;3) 确定每个模型的大小、性能、成本、风险和部署方法;4) 针对特定用例评估模型特性;5) 运行测试;6) 选择提供最大价值的选项。

在评估基础模型时应该考虑哪些因素?

-在评估基础模型时,应考虑模型的准确性、可靠性(包括一致性、可解释性和可信度)、避免产生仇恨言论等毒性内容的能力,以及响应用户提交提示的速度。

如何理解模型的准确性?

-模型的准确性指的是生成输出与期望输出的接近程度,可以通过选择与用例相关的评估指标,客观和重复地进行衡量。

为什么预训练基础模型对于特定用例很重要?

-预训练的基础模型经过针对特定用例的微调,如果在与我们用例接近的领域上预训练,它在处理我们的提示时可能表现得更好,使我们能够使用零样本提示获得期望的结果,而无需提供多个示例。

在选择模型时,部署方式如何影响决策?

-部署方式会影响模型的选择,因为不同的部署环境(如公有云或私有云)会涉及不同的成本、控制和安全考虑。例如,选择开源模型并在公有云上进行推理可能成本较低,而私有部署则提供更大的控制和安全性,但成本较高。

多模型方法是什么?

-多模型方法是指为不同的AI用例选择不同的基础模型,以找到模型和用例的最佳组合。这种方法认识到不同的模型和用例有不同的需求和优势。

在实际应用中,如何测试和评估模型的性能?

-在实际应用中,可以通过使用模型处理特定提示,然后根据预定义的评估指标和性能指标来评估模型的输出质量和性能。

在选择基础模型时,如何处理模型的偏见和风险?

-在选择基础模型时,应通过仔细评估模型的训练数据和输出,以及进行充分的测试来识别和减少偏见和风险。此外,选择具有透明度和可追溯性的模型可以帮助建立信任并减少风险。

模型的大小和性能之间存在哪些权衡?

-模型的大小和性能之间存在权衡,因为较大的模型可能提供更高的准确性,但也可能导致更高的计算成本和响应时间。选择模型时,需要找到性能、速度和成本之间的最佳平衡点。

在评估模型时,为什么考虑额外的好处很重要?

-考虑额外的好处,如更低的延迟和对模型输入输出的更大透明度,可以帮助我们更全面地评估模型的价值,并找到最适合特定用例的模型。

Outlines

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowMindmap

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowKeywords

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowHighlights

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowTranscripts

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowBrowse More Related Video

独立站建站:Wordpress vs Shopify?哪个更适合电商?详细对比 & 选择建议!

Pairwise Evaluation | LangSmith Evaluations - Part 17

New Salomon QUEST 4 GTX Or Salomon X ULTRA 4 MID GTX - Which Is Better?? (2021 Updates)

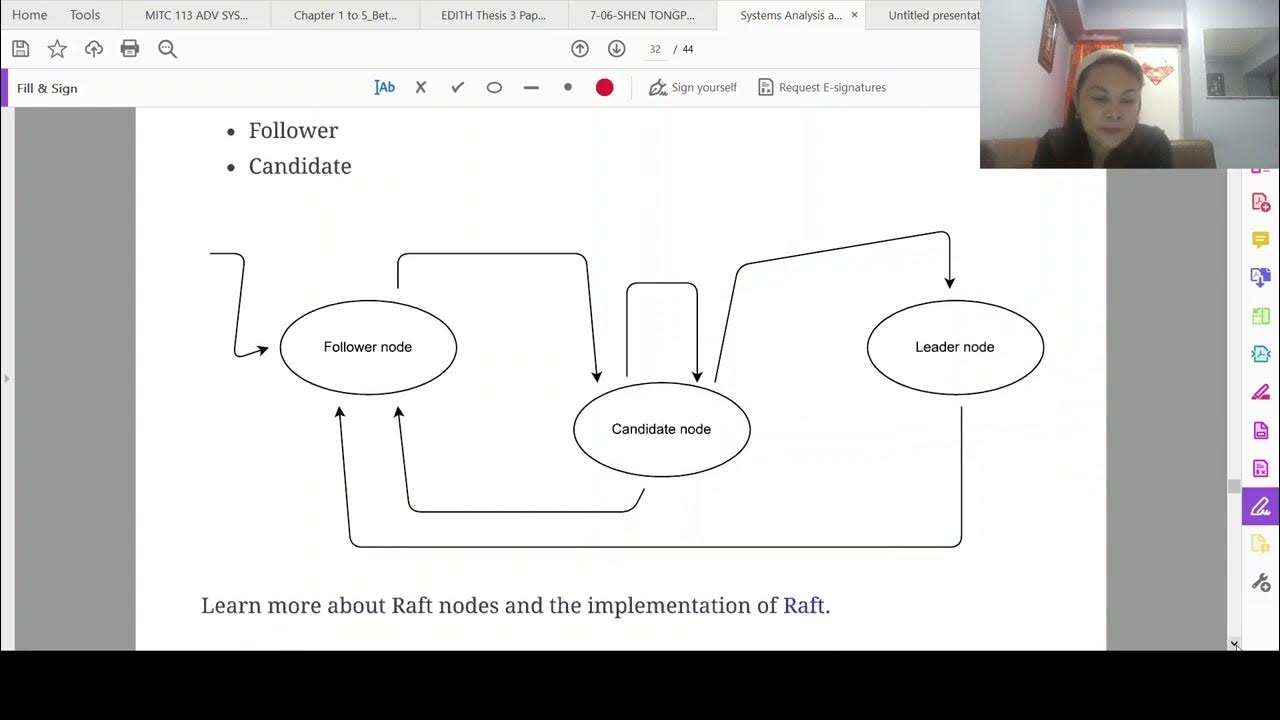

Distributed System Design

免費生成❗️AI攝影,超逼真數字人|攝影師還需要嗎|全網最簡單上手教程|AI Imaginative avatars|AI image generator|HyperBooth AI

Understand DSPy: Programming AI Pipelines

5.0 / 5 (0 votes)