Bruce Scheiner AI and Trust

Summary

TLDRBruce Schneier explores the crucial role of trust in society, distinguishing between interpersonal trust, based on human connections, and social trust, enforced by laws and technology. He delves into the transformation of societal trust mechanisms with the advent of AI, highlighting the potential risks of AI systems acting as 'double agents' for corporate interests. Schneier argues for the necessity of government intervention to ensure AI transparency, safety, and accountability, advocating for public AI models to counterbalance corporate control. His insightful analysis calls for regulatory measures to foster trustworthy AI, emphasizing the government's role in sustaining social trust in the AI era.

Takeaways

- 💬 Interpersonal and social trust are fundamental to society, with mechanisms in place to encourage trustworthy behavior.

- 👥 Bruce Schneier's book 'Liars and Outliers' discusses four systems for enabling trust: innate morals, reputation, laws, and security technologies.

- 📲 The advancement of technology, exemplified by platforms like Uber, has transformed traditional trust mechanisms, allowing trust among strangers based on systems rather than personal relationships.

- 🛡♂ Laws and technologies scale better than personal morals and reputation, enabling more complex and larger societies by fostering social trust.

- 👨💼 Corporate and governmental systems operate on social trust, relying on predictability and reliability rather than personal connections.

- 🧑💻 AI presents a unique challenge in trust, blending interpersonal and social trust, leading to potential misunderstandings about its role and intentions.

- 🪐 AI's relational and intimate nature could lead to it being mistakenly regarded as a friend rather than a service, obscuring the motivations of its corporate creators.

- 🛠 It is crucial to develop trustworthy AI through transparency, understanding of training and biases, and clear legal frameworks to ensure its accountability.

- 🌐 Bruce Schneier advocates for public AI models developed by academia, nonprofit groups, or governments to serve the public interest and provide a counterbalance to corporate-owned AI.

- 📚 Governments have a key role in creating social trust and must actively regulate AI and its corporate developers to ensure a society where AI serves as a trustworthy service rather than a manipulative friend.

Q & A

What are the four systems mentioned in the transcript that enable trust in society?

-The four systems mentioned for enabling trust in society are innate morals, concern about reputation, the laws we live under, and security technologies.

How do laws and security technologies differ from morals and reputation in terms of trust?

-Laws and security technologies are described as more formal and scalable systems that enable cooperation among strangers and complex societal structures, while morals and reputation are person-to-person, based on human connection and interpersonal trust.

What example is given to illustrate how technology has changed trust in a professional context?

-The transcript mentions Uber as an example, highlighting how technology and rules have made it safer and built trust between drivers and passengers, despite them being strangers.

What is the critical point about social trust and its scalability?

-The critical point about social trust is that it scales better than interpersonal trust, enabling transactions and interactions without the need for personal relationships, such as obtaining loans algorithmically or trusting corporate systems for food safety.

How do the transcript's views on AI relate to existential risks and corporate interests?

-The transcript suggests that fears of AI are often related to its potential for uncontrollable behavior and alignment with capitalism's profit motives. It highlights concerns about AI being used by corporations to maximize profits, potentially at the expense of individual trust and privacy.

Why are corporations likened to slow AIs, and what implications does this have?

-Corporations are likened to slow AIs because they are profit-maximizing machines, suggesting that their actions are driven by profit goals rather than human-like interests or ethics. This comparison implies that future AI technologies controlled by corporations could prioritize corporate interests over individual well-being.

What concerns are raised about the relational and intimate nature of future AI systems?

-The transcript raises concerns that future AI systems will be more relational and intimate, making it easier for them to influence users under the guise of personalized assistance, while potentially hiding corporate agendas and biases.

What does the transcript propose as a solution to ensure trustworthy AI?

-The transcript proposes the development of public AI models, transparency laws, regulations on AI and robotic safety, and restrictions on corporations behind AI to ensure that AI systems are trustworthy, their biases and training understood, and their behavior predictable.

What role does government play in establishing social trust in the context of AI, according to the transcript?

-According to the transcript, government plays a crucial role in establishing social trust by regulating AI and corporations, ensuring transparency, safety, and accountability in AI systems to protect societal interests and individual rights.

What distinction is made between 'corporate models' and 'public models' of AI in the transcript?

-The distinction is that corporate models are owned and operated by private entities for profit, while public models are proposed to be built by the public for the public, ensuring universal access, political accountability, and a foundation for free-market innovation in AI.

Outlines

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowMindmap

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowKeywords

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowHighlights

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowTranscripts

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowBrowse More Related Video

How to Build Trust on Your Virtual Team

TEDxMaastricht - Simon Sinek - "First why and then trust"

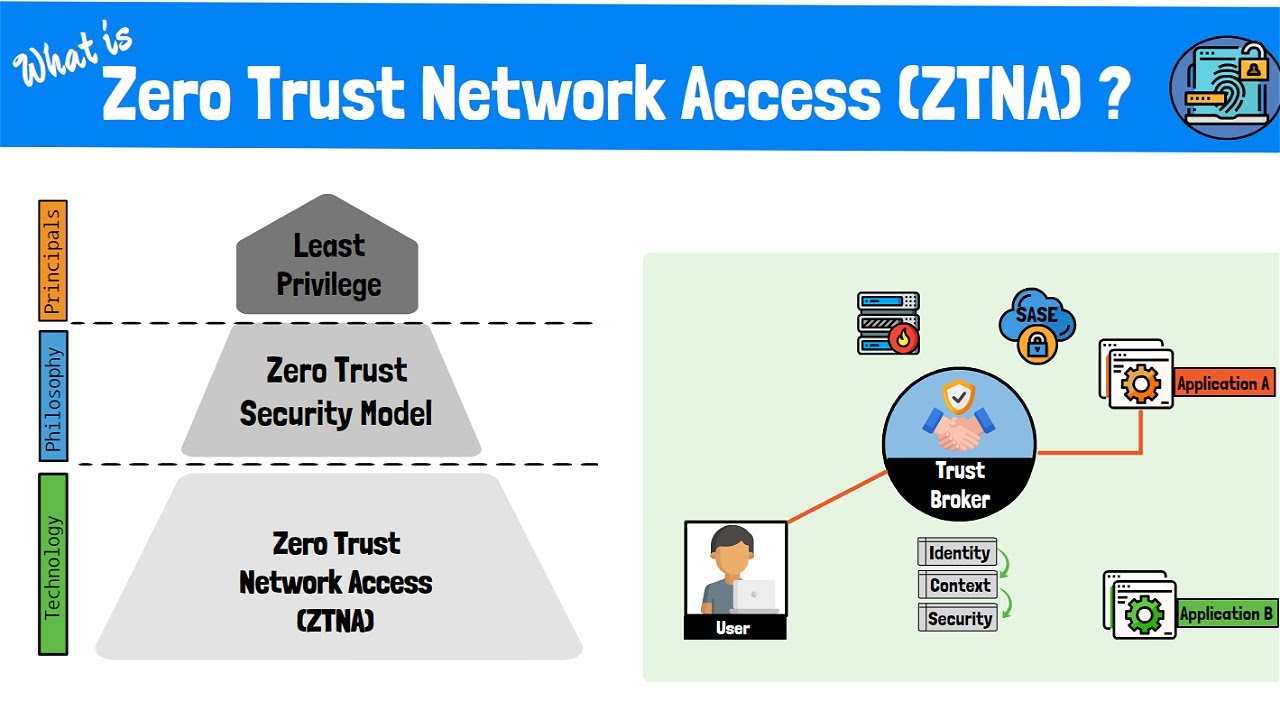

What is Zero Trust Network Access (ZTNA)? The Zero Trust Model, Framework and Technologies Explained

Paul Zak: Trust, morality - and oxytocin

Buildings that blend nature and city | Jeanne Gang

Structural Holes

5.0 / 5 (0 votes)