Networking 101 - Load Balancers

Summary

TLDRThe video script explains the concept and utility of load balancers in managing web traffic and ensuring high availability. It describes how a single server's finite resources can be insufficient, leading to the need for load balancing across multiple servers to handle increased traffic and maintain uptime. The script also touches on load balancer strategies like round-robin and least connections, and the importance of redundancy with multiple load balancers.

Takeaways

- 🌐 A load balancer is a device or software that distributes network traffic across multiple servers to ensure no single server bears too much load.

- 💡 Load balancers help prevent server downtime by allowing traffic to be rerouted to other servers if one server fails or requires maintenance.

- 🔁 They can improve website performance by spreading the load, thus allowing more connections to be handled by utilizing the resources of multiple servers.

- 🛠️ Load balancers enable maintenance and updates to be performed on servers without affecting the availability of the website or service.

- 🔄 Round-robin is a simple load balancing method where requests are distributed in a sequential manner across servers.

- 📉 Least connections is a more advanced method where the load balancer sends traffic to the server with the fewest current connections.

- 💻 Least loaded is an intelligent method where the load balancer sends traffic to the server with the most available resources, like CPU and memory.

- 🔀 Load balancers can dynamically add or remove servers to adjust to changing demand, ensuring optimal resource utilization.

- 🔒 Typically, load balancers are deployed in pairs for redundancy, ensuring that if one fails, the other can take over without service interruption.

- 🔧 They are crucial for high availability and scalability in web services, allowing businesses to grow their capacity and maintain uptime.

Q & A

What is a load balancer?

-A load balancer is a piece of hardware or software that distributes network traffic across multiple servers to ensure no single server becomes overwhelmed, thus improving response times and system availability.

Why is it important to have a load balancer?

-Load balancers are important because they help in managing finite server resources, ensuring no downtime, and providing redundancy to handle increased traffic or server maintenance without affecting the end-users.

What are the limitations of hosting a website on a single server?

-Hosting a website on a single server has limitations such as finite processing power, memory, disk space, and network connections, which can lead to bottlenecks and potential downtime.

How does a load balancer help in handling more connections?

-A load balancer helps in handling more connections by distributing the load across multiple servers, allowing the system to utilize the combined resources of all servers, thus increasing the capacity to serve more users.

What is the benefit of having multiple servers behind a load balancer?

-Having multiple servers behind a load balancer allows for redundancy and fault tolerance. If one server fails, the load balancer can redirect traffic to the remaining operational servers, ensuring continuous service.

How does a load balancer facilitate maintenance without downtime?

-A load balancer facilitates maintenance by allowing administrators to take a server offline for maintenance without affecting the overall service. Traffic is simply redirected to other servers in the pool.

What is round-robin load balancing?

-Round-robin load balancing is a method where the load balancer distributes traffic sequentially across servers in a circular order, ensuring an equal distribution of requests.

What is least connections load balancing?

-Least connections load balancing is a method where the load balancer sends traffic to the server with the fewest active connections, aiming to balance the load based on the number of connections rather than equal distribution.

What is least loaded load balancing?

-Least loaded load balancing is a method where the load balancer analyzes the resource usage of servers, such as CPU and memory, and directs traffic to the server with the most available resources to ensure optimal performance.

Why would a company need to scale their server capacity?

-A company may need to scale their server capacity to handle increased demand, improve performance, or to ensure that their services remain available and responsive during peak traffic times.

How does having redundant load balancers contribute to system reliability?

-Having redundant load balancers contributes to system reliability by providing a backup in case one load balancer fails. This ensures that traffic can still be distributed among servers, maintaining service availability.

Outlines

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowMindmap

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowKeywords

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowHighlights

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowTranscripts

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowBrowse More Related Video

What is a Load Balancer?

Load balancing options

API GATEWAY and Microservices Architecture | How API Gateway act as a Single Entry Point?

Proxy vs Reverse Proxy vs Load Balancer | Simply Explained

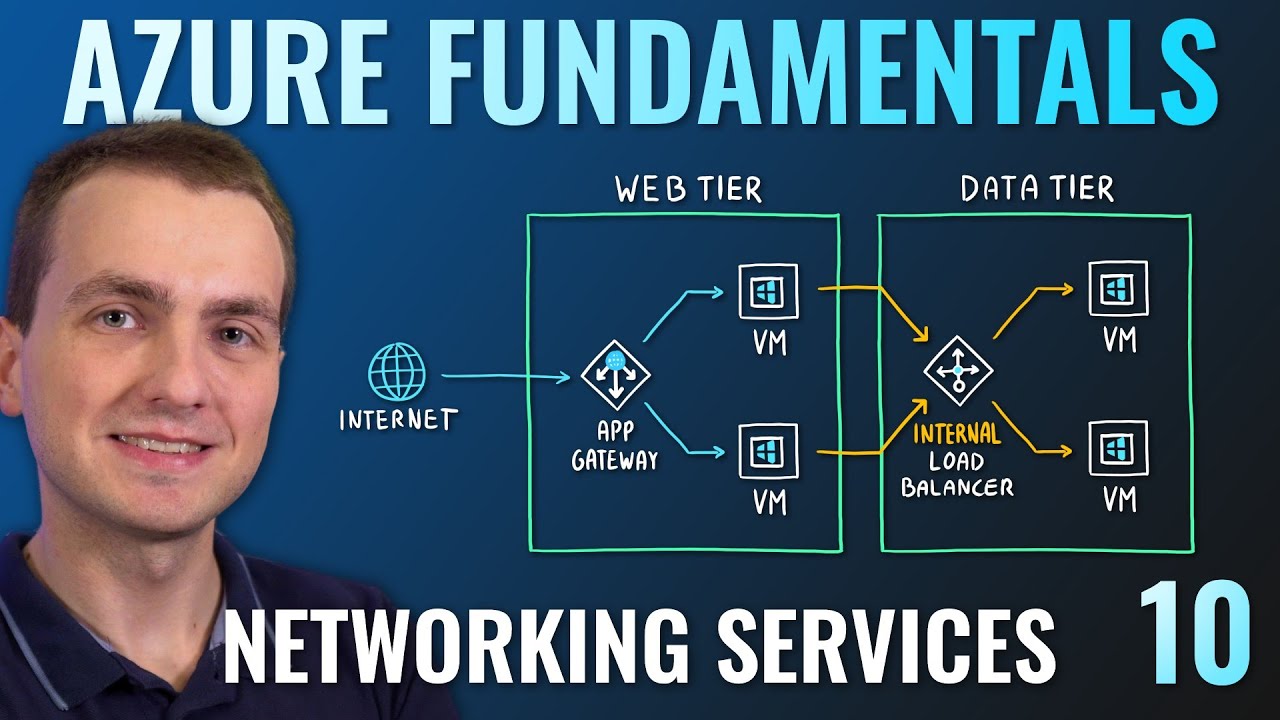

AZ-900 Episode 10 | Networking Services | Virtual Network, VPN Gateway, CDN, Load Balancer, App GW

NGINX Tutorial - What is Nginx

5.0 / 5 (0 votes)