The First AI That Can Analyze Video (For FREE)

Summary

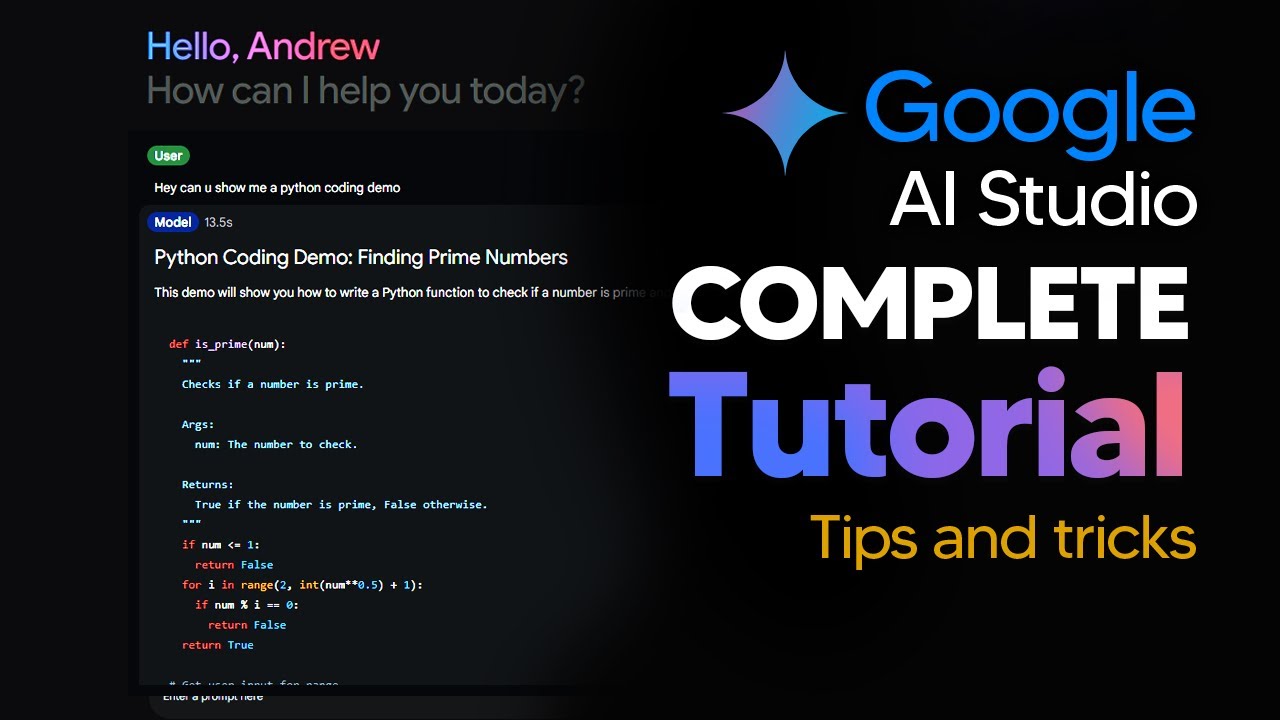

TLDRGoogle's AI Studio has recently become available to the public, introducing the Gemini 1.5 Pro model, notable for its 1 million tokens of context. The video explores unique features and use cases of the studio, emphasizing its appeal beyond developers. With comparisons to other models and platforms, it highlights the studio's multimodal capabilities, including video analysis, and advanced settings for customization. The script illustrates how the Gemini 1.5 Pro model enables in-depth interaction with extensive documents and multimedia, offering insights into leveraging its potential for creative and research applications, despite restrictions in Europe.

Takeaways

- 🌟 Google's AI studio has left early access, featuring unique capabilities including the Gemini 1.5 Pro model, exclusive outside of Europe.

- 🚀 The Gemini 1.5 Pro model offers an unprecedented 1 million tokens of context, vastly surpassing the capacity of previous models.

- 💻 Despite being a developer interface, Google's AI studio is accessible and useful even for non-developers, offering enhanced features like model switching and temperature settings.

- 🔍 Advanced settings in the studio allow for detailed control over the AI's behavior, addressing common concerns about bias and moderation.

- 🎥 Unique to this platform is the ability to upload and analyze video content, integrating both visual and audio data.

- 👩💻 The platform supports various prompt types, including chat, free form, and structured, facilitating a wide range of user interactions.

- 🛠️ Structured prompts enable multi-shot learning, allowing users to train the model for specific outputs with examples.

- 📚 With a massive context window, users can upload extensive documents or transcripts, like appliance manuals or podcast episodes, for detailed analysis.

- 🔑 The ability to fine-tune models with user-provided input-output pairs offers a tailored AI experience, enhancing the relevance of responses.

- 🌍 Accessibility is a concern, as users in Europe need a VPN to access the service, highlighting geographic limitations in AI tool availability.

Q & A

What is the significance of Google's AI Studio coming out of early access?

-Google's AI Studio coming out of early access signifies that it is now available to the general public, offering advanced features and access to powerful models like Gemini 1.5 Pro with 1 million tokens of context, previously not as accessible.

Why is Europe excluded from using Google's AI Studio?

-The script hints at a restriction for Europe but does not provide specific reasons, which could be due to regulatory, legal, or compliance issues related to data privacy and AI governance in European jurisdictions.

What are some key features of Google's AI Studio?

-Key features include the ability to switch models quickly, set temperature for creativity control, access advanced features like prompt presets, and use the developer interface for enhanced customization.

How does Gemini 1.5 Pro model compare to other models like GPT-3.5 and GPT-4?

-Gemini 1.5 Pro sits between the advanced models like GPT-4 and the Pro model similar to GPT-3.5, offering a unique position with its 1 million tokens of context, providing extensive depth for data processing and generation.

What unique functionality does Google's AI Studio provide concerning video input?

-Google's AI Studio allows users to upload and work with video inputs directly, a feature not available in ChatGPT, Cloud AI, or other open-source models. It can recognize both visual and audio components of the video.

How does the temperature setting in AI Studio affect model output?

-The temperature setting controls the creativity of the model, where a higher temperature leads to more creative but potentially less accurate outputs (prone to hallucinations), and a lower temperature results in more consistent, predictable outputs.

What are the types of prompts available in Google's AI Studio?

-Google's AI Studio offers three types of prompts: chat prompts, which are simple and straightforward; free form prompts, which include variables for dynamic use; and structured prompts, which involve multi-shot or few-shot prompting for pattern recognition.

How can the Gemini 1.5 Pro model's 1 million tokens of context be advantageous?

-The 1 million tokens of context allow for processing and understanding much larger documents or datasets in a single prompt, enabling complex tasks like summarizing extensive manuals or analyzing lengthy podcast transcripts, which is not possible with models having lower token limits.

What is the safety setting feature in Google's AI Studio?

-The safety setting allows users to control the model's moderation level, letting them decide whether to filter or block certain outputs, giving users more control over the content generated by the model.

How does Google's AI Studio facilitate the creation and testing of prompts?

-Google's AI Studio enables users to easily create, test, and save prompts with variables and examples. It supports multimodal inputs, including video, and allows for fine-tuning models by providing input-output pairs, making it highly versatile for developing tailored AI applications.

Outlines

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowMindmap

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowKeywords

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowHighlights

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowTranscripts

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowBrowse More Related Video

谷歌最强AI模型Gemini完全免费使用,比GPT-4还强?随意创建API key使用,文本图像任务轻松处理

Google actually beat GPT-4 this time? Gemini Ultra released

SHOCKING New AI Models! | All new GPT-4, Gemini, Imagen 2, Mistral and Command R+

Google I/O 2024: Everything Revealed in 12 Minutes

GEMINI 2.0 - ¡GOOGLE da UN GOLPE SOBRE LA MESA con su NUEVA GENERACIÓN!

How To Use New Google AI Studio (GoogleAI Tutorial) Complete Guide With Tips and Tricks

5.0 / 5 (0 votes)