Few-Shot Learning (2/3): Siamese Networks

Summary

TLDRThis lecture explores Siamese networks, a type of deep learning model used for one-shot learning. It discusses two training methods: learning pairwise similarity scores and triplet loss. The first method involves creating positive and negative sample pairs to teach the network to distinguish between same and different classes. The second method uses triplets of images (anchor, positive, and negative samples) to refine feature extraction, aiming to minimize intra-class variation and maximize inter-class separation. The lecture concludes with the application of these networks for one-shot prediction, where the model must classify new samples based on a small support set.

Takeaways

- 🔍 **Siamese Networks**: The lecture introduces Siamese networks, which are analogous to Siamese twins, physically connected but with separate bodies, used for learning similarities and differences between data samples.

- 📈 **Pairwise Similarity Training**: The first method for training Siamese networks involves learning pairwise similarity scores using positive and negative sample pairs, where positive pairs are of the same class and negative pairs are of different classes.

- 📚 **Data Preparation**: For training, a large dataset is required with labeled classes, from which positive and negative samples are prepared to teach the model about sameness and difference.

- 🐅 **Positive Samples**: Positive samples are created by randomly sampling two images from the same class, labeling them as '1' to indicate they belong to the same category.

- 🚫 **Negative Samples**: Negative samples are constructed by sampling one image from one class and another from a different class, labeling them as '0' to signify they are of different categories.

- 🧠 **Convolutional Neural Network (CNN)**: A CNN is used for feature extraction, with layers including convolutional, pooling, and a flattened layer, to transform input images into feature vectors.

- 🔗 **Feature Vector Comparison**: The network outputs two feature vectors from two input images, which are then compared by calculating the absolute difference, resulting in a vector z that represents their similarity.

- 📊 **Loss Function and Backpropagation**: The loss function measures the difference between the predicted similarity score and the actual label, using cross-entropy. Backpropagation is employed to update the model parameters to minimize this loss.

- 🔄 **Model Update Process**: Both the convolutional and fully connected layers of the network are updated during training, with gradients flowing from the loss function to the dense layers and then to the convolutional layers.

- 📊 **Triplet Loss Method**: An alternative training method involves triplet loss, where an anchor, a positive sample, and a negative sample are used to encourage the network to learn a feature space where intra-class distances are small and inter-class distances are large.

- 🔎 **One-Shot Prediction**: After training, the Siamese network can be used for one-shot prediction, where the model makes predictions based on a support set and a query image, identifying the class of the query by comparing it to the support set samples.

Q & A

What is the analogy behind the name 'Siamese Network'?

-The name 'Siamese Network' is an analogy to 'Siamese twins', where two individuals are physically connected. In the context of neural networks, it refers to the structure where two identical subnetworks share weights and are used to process two input samples.

How are positive samples defined in the training of a Siamese network?

-Positive samples in a Siamese network are defined as pairs of images that belong to the same class. These samples are used to teach the network to recognize similarities between items of the same category.

What role do negative samples play in training a Siamese network?

-Negative samples are pairs of images from different classes. They are used to teach the network to distinguish between different categories, ensuring that the network learns to identify dissimilarities.

How does the convolutional neural network contribute to feature extraction in a Siamese network?

-The convolutional neural network in a Siamese network is responsible for extracting feature vectors from the input images. It processes the images through convolutional and pooling layers, and outputs feature vectors that are then used to calculate similarity scores.

What is the significance of the feature vectors h1 and h2 in a Siamese network?

-The feature vectors h1 and h2 represent the outputs of the convolutional neural network for two input images. The difference between these vectors is used to calculate a similarity score, which is a key aspect of the Siamese network's functionality.

Why is the output of a Siamese network a scalar value between 0 and 1?

-The output of a Siamese network is a scalar value between 0 and 1 because it represents the similarity score between two input images. A value close to 1 indicates high similarity (same class), while a value close to 0 indicates low similarity (different classes).

What is the purpose of the sigmoid activation function in the output layer of a Siamese network?

-The sigmoid activation function in the output layer of a Siamese network is used to squash the output into a value between 0 and 1, which corresponds to the probability of the two inputs being from the same class.

How does the triplet loss method differ from the pairwise similarity method in training a Siamese network?

-The triplet loss method involves training the network using three images: an anchor, a positive sample, and a negative sample. The goal is to minimize the distance between the anchor and positive sample while maximizing the distance to the negative sample, unlike the pairwise method which focuses on pairs of images.

What is the concept of 'one-shot prediction' in the context of Siamese networks?

-One-shot prediction refers to the ability of a Siamese network to make predictions based on very few examples, typically just one. This is particularly useful in few-shot learning scenarios where the model must generalize from limited data.

How does the support set assist in one-shot prediction with a Siamese network?

-The support set provides a set of examples from known classes that the network can use for comparison when making predictions about a new, unseen query image. This allows the network to find the most similar class to the query, even if it was not present in the training data.

Outlines

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowMindmap

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowKeywords

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowHighlights

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowTranscripts

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowBrowse More Related Video

Deep Learning(CS7015): Lec 2.1 Motivation from Biological Neurons

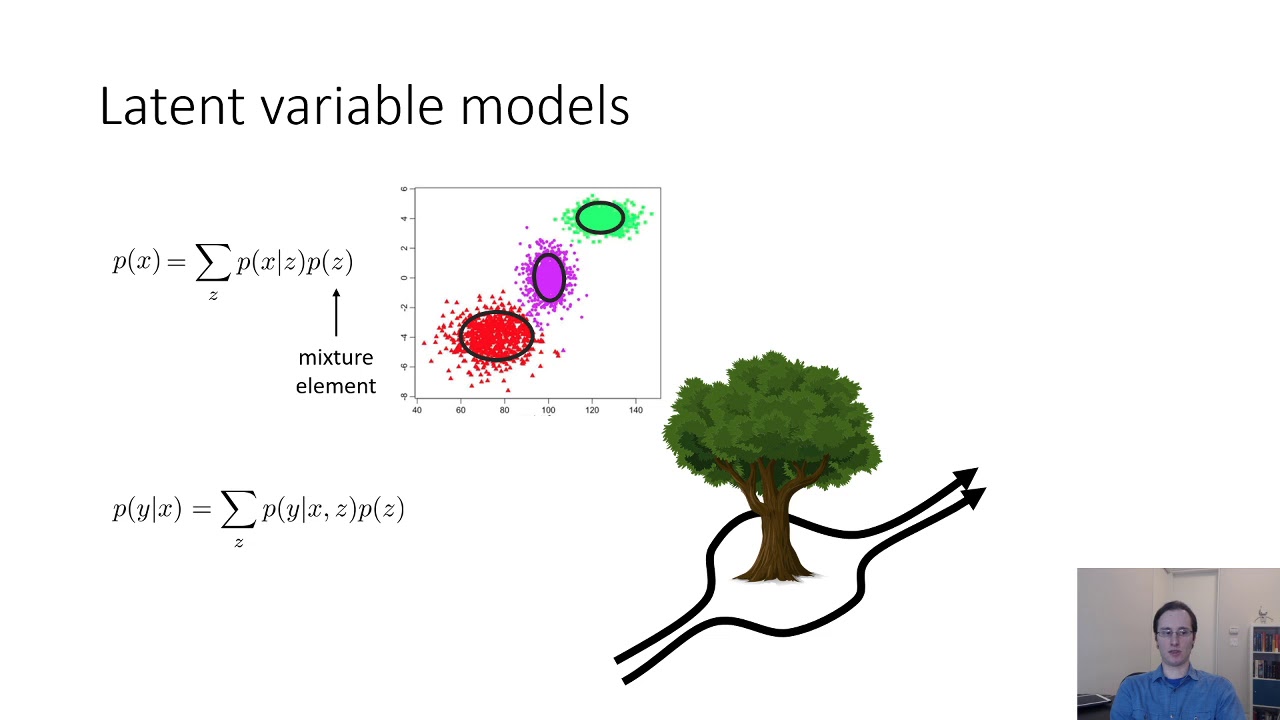

CS 285: Lecture 18, Variational Inference, Part 1

Deep Belief Nets - Ep. 7 (Deep Learning SIMPLIFIED)

AI vs ML vs DL vs Data Science - Difference Explained | Simplilearn

Few-Shot Learning (1/3): Basic Concepts

EfficientML.ai Lecture 2 - Basics of Neural Networks (MIT 6.5940, Fall 2024)

5.0 / 5 (0 votes)