CPU Pipeline - Computerphile

Summary

TLDRThis script delves into the evolution of CPUs, illustrating how they've advanced from simple addition to complex operations. It explains the CPU's basic operations through a robotic analogy, detailing the fetch-decode-execute cycle and how it's optimized through pipelining. The discussion covers challenges like branch prediction and hazards, and how techniques like delay slots and conditional execution help maintain pipeline efficiency. The script also touches on modern CPU features like multiple execution units and caches, aiming to keep the pipeline full and maximize processing speed.

Takeaways

- 😀 The script discusses the evolution of CPUs, transitioning from simple addition to more complex operations.

- 🤖 It uses the analogy of a 'robot' to explain how CPUs execute instructions, emphasizing the fetch-decode-execute cycle.

- 🔄 The concept of a pipeline is introduced to explain how modern CPUs perform multiple operations simultaneously, increasing efficiency.

- 🛠️ The script highlights that CPUs have separate components for fetching, decoding, and executing instructions, which can lead to inefficiencies if not managed properly.

- ⏱️ It mentions the use of a 'metronome' to represent the CPU's clock cycle, which dictates the pace of operations.

- 🔀 The importance of branch prediction in maintaining pipeline efficiency is discussed, as branches can disrupt the flow of instruction execution.

- 💡 ARM's approach to conditionally executing instructions to avoid pipeline flushes is explained as a clever solution to handling branches.

- 🔧 The script touches on the challenges of managing data and instruction caches to prevent conflicts between different parts of the CPU.

- 🤝 It also addresses the concept of 'hazards', where data dependencies can cause delays in the pipeline if not managed correctly.

- 🎮 Lastly, the script uses the Sega Dreamcast's Hitachi SH4 processor as an example of a CPU that could perform two operations at once, emphasizing the importance of instruction pairing.

Q & A

What was the main topic discussed in the script?

-The main topic discussed in the script is the evolution of CPUs, focusing on how modern CPUs have advanced beyond simple addition to perform complex tasks more efficiently, including the use of pipelines and branch prediction to optimize performance.

What is the role of the 'robot' in the CPU analogy?

-In the CPU analogy, the 'robot' represents the various components and processes within the CPU that perform tasks such as reading numbers from memory, performing calculations, and storing results back into memory.

What does the term 'pipeline' refer to in the context of CPUs?

-In the context of CPUs, 'pipeline' refers to a design technique where instructions are broken down into multiple stages that can be processed in an assembly line fashion, allowing for parallel processing and improved efficiency.

What is the significance of breaking down CPU operations into fetch, decode, and execute stages?

-Breaking down CPU operations into fetch, decode, and execute stages allows for a more efficient use of CPU resources by overlapping the stages of different instructions, thus reducing idle time and increasing the overall throughput of the CPU.

Why is it necessary to have separate caches for instructions and data?

-Separate caches for instructions and data are necessary to prevent contention between the fetch and execute stages of the CPU, which can lead to delays. Having distinct caches allows each stage to access the required data more efficiently.

What is a 'branch' in the context of CPU instructions?

-A 'branch' in CPU instructions refers to an operation that causes the CPU to jump to a different location in the program, typically based on a condition. This can affect the flow of execution and the efficiency of the pipeline.

What is the purpose of a delay slot in CPU instructions?

-A delay slot in CPU instructions is a technique used to keep the pipeline filled with useful work by executing an additional instruction immediately after a branch, even if the branch is taken, thus avoiding pipeline stalls.

How does branch prediction help in maintaining CPU pipeline efficiency?

-Branch prediction helps maintain CPU pipeline efficiency by allowing the fetch stage to anticipate the direction of program flow and continue fetching instructions from the predicted path, reducing the need to flush and restart the pipeline upon branches.

What is the concept of 'out-of-order execution' in CPUs?

-Out-of-order execution is a technique where CPUs execute instructions not necessarily in the order they appear in the program but in an order that maximizes the use of CPU resources, such as when certain dependencies are met.

Why is it important to manage data hazards in CPU operations?

-Managing data hazards is important in CPU operations to ensure that instructions that depend on the results of previous instructions are not executed prematurely, which could lead to incorrect results and system instability.

Outlines

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowMindmap

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowKeywords

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowHighlights

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowTranscripts

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowBrowse More Related Video

🦿 Langkah 08: Penjumlahan | Fundamental Matematika Alternatifa

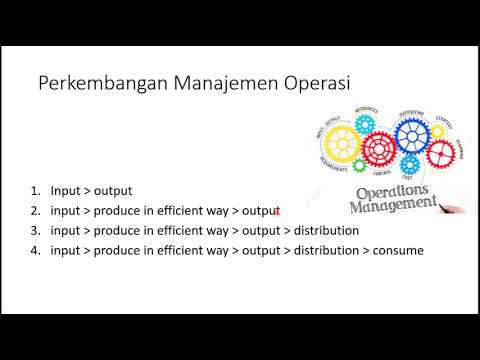

operations manajemen 1

Diketahui: (-1 4 -2 3)+(4 -5 -3 2)=(2 -1 -4 3)(2p 1 1 q+1). Nilai p+q= ...

Biological Hierarchy with Dr. K. Sathasivan

Addition and Subtraction of Small Numbers

How do computers work? CPU, ROM, RAM, address bus, data bus, control bus, address decoding.

5.0 / 5 (0 votes)