Building long context RAG with RAPTOR from scratch

Summary

TLDRランスは、新しい検索方法「Raptor」について語ります。長いコンテキストのLLM(GeminiやClaudeなど)が注目されていますが、ランスは長いコンテキストLLMの使用についていくつかの考慮事項を指摘します。特に、高コストと遅延の問題です。そこで、Raptorは、長いコンテキストを保持しながら、より軽量で使いやすい検索戦略を提供します。この方法は、ドキュメントを集約して、階層化した文書ツリーを作成し、情報を統合するものです。ランスは、このアプローチがどのように機能するかを説明し、実際にこのプロセスを実行します。

Takeaways

- 🔍 ランチャインドのLanceが、新しい方法「Raptor」について語りました。

- 📚 長いコンテキストのLLM(GeminiやClaudeなど)が注目されています。

- 🤖 Lanceは、長いコンテキストLLMを使ってコードアシスタントを開発し、効果的に応答しました。

- ⏱️ 長いコンテキストLLMの使用には、遅延とコストの考慮が必要です。

- 🔄 Raptorは、軽量で使いやすいリトリエーション戦略を提供し、長いコンテキストを利用しながら制限を克服することを目的としています。

- 📈 Raptorは、文書を組み合わせて、より高いレベルの要約を作り、それを再帰的に繰り返します。

- 🌳 Raptorは文書をクラスタリングし、各クラスタを要約して、最終的に1つのクラスタにまとめます。

- 🔗 Raptorは、要約と生の文書を一緒にインデックス化し、リトリエーションに使用することができます。

- 🔎 Raptorは、複数の文書から情報を統合する場合に特に役立ち、KNNなどの従来の方法の限界を克服します。

- 📝 Lanceは、Anthropicの新しいモデルCLAE 3を使用して、文書の要約を実行し、Raptorを構築することを計画しています。

- 📚 Raptorのコードはオープンソースで公開されており、実験と活用が容易になっています。

Q & A

Lanceが話す「Raptor」という新しい方法とは何ですか?

-「Raptor」は、長い文脈のLLMs(Language Models)をより効率的に扱うための方法です。文書をグループ化し、階層的に情報を要約することで、より大きな文書を扱いながらも、効率的な情報検索を実現します。

Lanceが使用した「Langs Smith」ダッシュボードは何のために使われましたか?

-「Langs Smith」ダッシュボードは、評価のために使用されました。20の質問に対して、各生成の50パーセントと99パーセントの遅延を測定し、コストを分析するために使用されました。

Lanceが指摘した、非常に長いコンテキストLLMsを使用する際のいくつかの考慮事項は何ですか?

-非常に長いコンテキストLLMsを使用する際には、遅延とコストが増加することが考えられます。また、文書のサイズがコンテキストウィンドウを超える場合、どのように処理するかという問題もあります。

Lanceが提案した「ドキュメントレベルでのインデックス作成」とは何ですか?

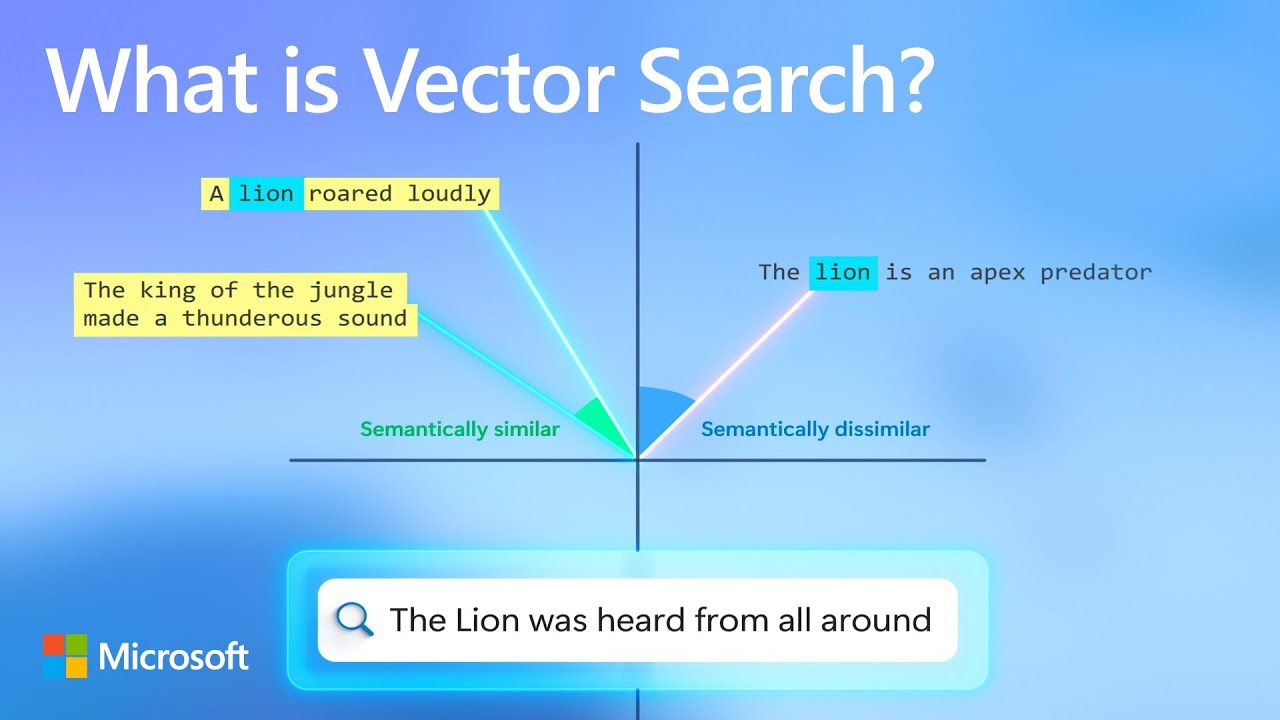

-「ドキュメントレベルでのインデックス作成」は、完全な文書を直接埋め込み、KNN(最近傍neighbor検索)などの方法で検索を行うことを意味します。これにより、文書を分割せずに、より簡単で効率的な検索が可能になります。

「ドキュメントツリー」の構築はどのようなアイデアですか?

-「ドキュメントツリー」の構築は、複数の文書から必要な情報を統合する場合に役立つアイデアです。文書を階層的にグループ化し、各レベルで情報を要約することで、特定の質問に対する回答に必要な情報が複数の文書から集約されることが可能になります。

Raptorのアプローチはどのように文書をグループ化し、要約するのですか?

-Raptorは、ガウス混合モデル(GMM)を使用して文書の分布をモデル化し、最適なクラスター数を自動的に決定します。UMAP(Uniform Manifold Approximation and Projection)という次元削減方法を用いてクラスタリングプロセスを改善し、ローカルとグローバルの2つのスケールでデータを分析します。

Raptorが提供する検索方法の利点は何ですか?

-Raptorは、原始文書と高レベルの要約を両方索引化することで、異なるタイプの質問に対応する柔軟性と強さを提供します。これにより、詳細な回答が必要な場合や、複数の文書から情報を統合する必要がある場合に、より効果的な検索が可能になります。

Lanceが使用したAnthropicの新しいモデルCLAE 3はどのようなものです?

-CLAE 3はAnthropicが開発した新しいモデルで、非常に高い性能を持ちます。Lanceはこのモデルを使用して、個々の文書の要約を生成し、Raptorのアプローチを適用しています。

Raptorのアプローチはどのように実装されましたか?

-Raptorのアプローチは、文書を埋め込み、クラスタリングし、要約するプロセスを繰り返すことで実装されました。このプロセスは、文書の階層的構造を形成し、最終的に1つのクラスターにまとめます。

Lanceが使用した「chroma」とは何ですか?

-「chroma」は、Lanceが使用したベクトルストアです。これは、文書とその要約を索引化し、効率的な情報検索を実現するために使用されました。

Raptorのアプローチが適用される可能性がある状況はどのようなものですか?

-Raptorのアプローチは、コンテキストウィンドウを超える大型の文書集合や、詳細な回答が必要な場合に適用される可能性があります。また、複数の文書から情報を統合する必要がある質問に対しても効果的です。

Outlines

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowMindmap

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowKeywords

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowHighlights

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowTranscripts

This section is available to paid users only. Please upgrade to access this part.

Upgrade Now5.0 / 5 (0 votes)