Dagster Crash Course: develop data assets in under ten minutes

Summary

TLDRIn this video, Pete takes you on a crash course for building an ETL pipeline with Dagster, a data orchestration platform. Starting from scratch, he guides you through the process of fetching data from the GitHub API, transforming it, visualizing it in a Jupyter notebook, and uploading the notebook as a GitHub Gist. Along the way, he covers key Dagster concepts like software-defined assets, resources for managing external dependencies, testing strategies, and scheduling pipelines to run automatically. By the end, you'll have a solid understanding of how Dagster streamlines data workflows and empowers you to build robust, testable, and production-ready ETL pipelines with ease.

Takeaways

- 🔑 Dagster is a tool for building data pipelines and ETL (Extract, Transform, Load) workflows as a DAG (Directed Acyclic Graph) of software-defined assets.

- 📦 Dagster provides a command-line interface and UI for scaffolding projects, managing dependencies, and running pipelines.

- 🧩 Software-defined assets are Python functions that represent data assets (e.g., reports, models, databases) and can be interconnected to form a pipeline.

- 🔄 Dagster caches intermediate computations, allowing efficient re-execution and iteration on specific pipeline steps.

- ⚙️ Dagster's resource system facilitates secure configuration, secret management, and test-driven development through dependency injection.

- 📅 Dagster supports scheduling pipelines to run at regular intervals, enabling automated data workflows.

- 🔬 The presenter demonstrated building an ETL pipeline to fetch GitHub repository stars, transform the data, visualize it in a Jupyter notebook, and publish the result as a GitHub Gist.

- 🧪 Test-driven development is encouraged in Dagster, with utilities for mocking external dependencies and asserting pipeline outputs.

- 📚 Extensive documentation and tutorials are available to learn more about Dagster's features and best practices.

- 🌟 The presenter encouraged users to explore Dagster further and contribute to the open-source project by starring the repository.

Q & A

What is the purpose of this video?

-The video provides a crash course on how to build an ETL (Extract, Transform, Load) pipeline using Dagster, a data orchestration platform.

What is the example pipeline demonstrated in the video?

-The example pipeline fetches GitHub stars data for the Dagster repository, transforms the data into a week-by-week count, creates a visualization in a Jupyter notebook, and uploads the notebook as a GitHub Gist.

What is the role of software-defined assets in Dagster?

-Software-defined assets are functions that return data representing a data asset in the pipeline graph, such as a machine learning model, a report, or a database table. These assets can have dependencies on other assets, forming a data pipeline.

How does Dagster handle caching and reusing computations?

-Dagster uses a system called IO managers to cache the output of computations in persistent storage like local disk or S3. This allows reusing cached data instead of recomputing it, improving efficiency and iteration speed.

What is the purpose of the resources system in Dagster?

-The resources system in Dagster allows abstracting away external dependencies, like API clients, into configurable resources. This enables testability, secret management, and swapping in test doubles for external services.

How does the video demonstrate secret management?

-The video shows how to move the GitHub API access token out of the source code and into an environment variable, which is then read by the GitHub API resource. This prevents secrets from being stored in the codebase.

What is the role of the test demonstrated in the video?

-The test is a smoke test that verifies the happy path of the pipeline by mocking the GitHub API and asserting expected outputs from the software-defined assets. It demonstrates Dagster's testability features.

How does Dagster enable scheduling pipelines?

-Dagster allows defining jobs and schedules within a repository. The video demonstrates adding a daily schedule to the ETL pipeline job, which can then be run automatically by Dagster's scheduler daemon.

What is the role of the Dagster UI in the development process?

-The Dagster UI provides a visual interface for launching and monitoring pipeline runs, inspecting asset metadata, and managing schedules. It aids in the development and operation of Dagster pipelines.

What are some potential next steps after completing this tutorial?

-The video suggests exploring Dagster's documentation, tutorials, and guides further, as the tutorial covers only a basic introduction. There are more advanced features and best practices to learn for production-ready Dagster pipelines.

Outlines

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowMindmap

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowKeywords

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowHighlights

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowTranscripts

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowBrowse More Related Video

How to build and automate your Python ETL pipeline with Airflow | Data pipeline | Python

26 DLT aka Delta Live Tables | DLT Part 1 | Streaming Tables & Materialized Views in DLT pipeline

Apache Airflow vs. Dagster

Building a Data Warehouse using Matillion ETL and Snowflake | Tutorial for beginners| Part 1

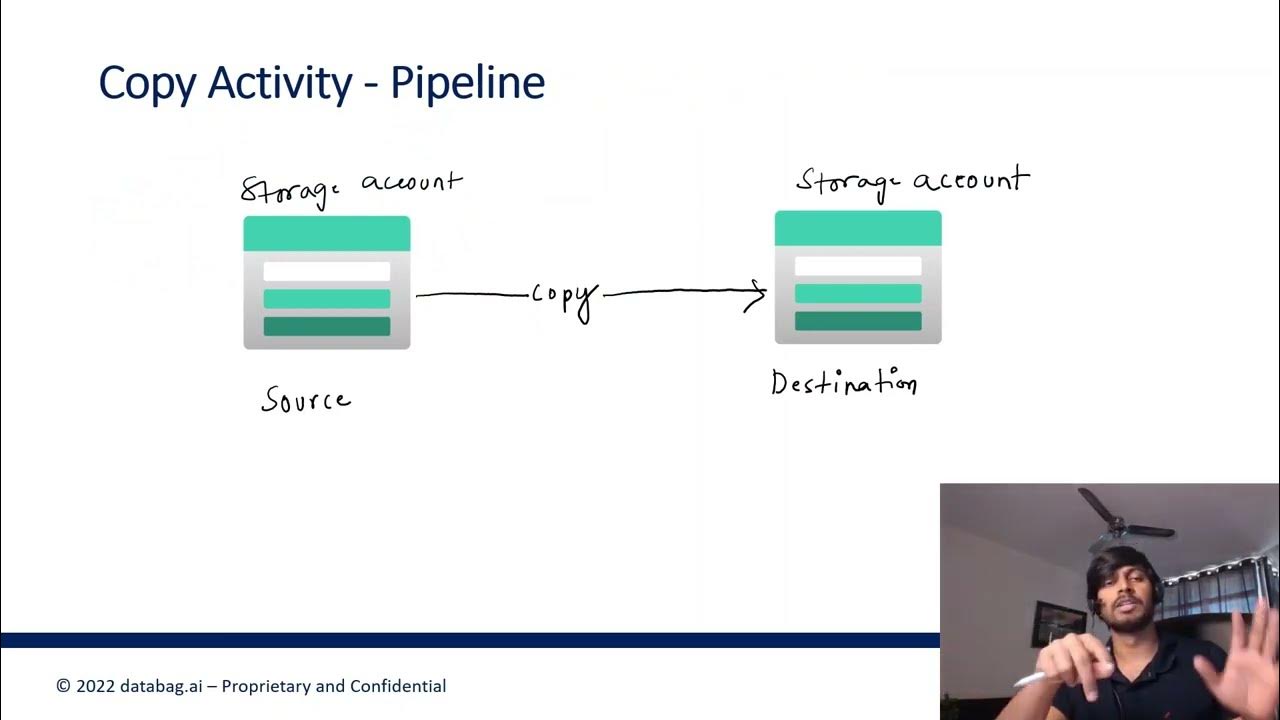

Azure Data Factory Part 3 - Creating first ADF Pipeline

How Hard Is AWS Certified Data Engineer Associate DEA-C01

5.0 / 5 (0 votes)