Where We Go From Here with OpenAI's Mira Murati

Summary

TLDRIn this insightful discussion, the guest shares their journey from Albania to working at OpenAI, emphasizing the importance of math and science in their upbringing. They delve into the challenges of building products with AI models, exploring the evolution from theoretical concepts to practical applications in fields like aerospace and automotive engineering. The conversation highlights the significance of AI safety, alignment with human values, and the potential of large language models to transform how we interact with technology, suggesting a future where AI systems could autonomously perform intellectual tasks, raising questions about reliability and the economic implications of AI advancements.

Takeaways

- 🌟 The speaker emphasizes the difficulty of building good products on top of AI models, suggesting that while model creation is a focus, the real challenge lies in application.

- 📚 Born in post-Communist Albania, the speaker's early education was heavily focused on math and sciences, which influenced his career path in mechanical engineering and AI.

- 🚀 With a background in mechanical engineering and aerospace, the speaker's interest in AI was sparked during his time at Tesla, particularly with the development of autopilot systems.

- 🕶️ The speaker explored augmented reality and virtual reality, noting the importance of understanding the practical applications and limitations of these technologies.

- 🧠 The shift in focus from theoretical knowledge to building practical applications is highlighted, with an emphasis on the generality of AI over domain-specific competence.

- 🔮 OpenAI's mission attracted the speaker due to its focus on building AGI (Artificial General Intelligence), which he sees as a fundamental and inspiring goal for technology development.

- 🤖 The speaker discusses the importance of AI safety, particularly the development of reinforcement learning with human feedback to align AI systems with human values.

- 🔗 The dialogue capability of AI models like Chat GPT is seen as a special form of interaction that allows for the expression of uncertainty and correction of errors, contributing to AI safety.

- 🛠️ The speaker suggests that we are at an inflection point in redefining human-digital interaction, moving towards a model where AI systems act more as collaborators than tools.

- 📈 The transcript discusses the concept of scaling laws in AI, indicating that there is still significant room for improvement as models scale up in terms of data and compute power.

- 💡 The future of AI is envisioned with models that encompass multiple modalities (text, images, video) for a more comprehensive understanding, akin to human perception.

Q & A

What was the educational focus in post-Communist Albania and how did it influence the speaker's interests?

-In post-Communist Albania, there was a significant focus on math and physics, which influenced the speaker to develop a strong interest in these fields. The humanities were considered questionable due to the ambiguity of information sources and truthfulness, leading the speaker to pursue math and sciences relentlessly.

How did the speaker's career evolve from mechanical engineering to working with AI?

-The speaker started as a mechanical engineer working in Aerospace and later joined Tesla, where they initially worked on Model S dual motor and then led the Model X program. This experience sparked an interest in AI applications, particularly autopilot, which led them to explore AI in different domains such as augmented reality, virtual reality, and eventually joining OpenAI to focus on general AI (AGI).

What was the speaker's initial attraction to OpenAI?

-The speaker was drawn to OpenAI because of its mission, which they felt was more important than any other technology they could contribute to. They believed that building intelligence is a core unit that affects everything and is inspiring for elevating and increasing the collective intelligence of humanity.

How does the speaker view the role of physics and math backgrounds in the field of AI today compared to 15 years ago?

-The speaker observes that many influential contributors to the AI space today have a physics or math background, which is a shift from 15 years ago when the field was more dominated by engineers from lexical, mechanical, and other engineering backgrounds.

What does the speaker mean by 'building an intuition' in the context of problem-solving in math and theoretical spaces?

-The speaker refers to the process of deeply engaging with a problem over an extended period, often requiring time for reflection and incubation, which leads to the development of a new idea or solution. This process builds an intuition for identifying the right problems to work on and the discipline to persevere until a solution is found.

How does the speaker perceive the current state of AI systems in terms of their capabilities and limitations?

-The speaker sees AI systems as having made significant progress, particularly in representation and understanding of concepts, but acknowledges that there are still substantial limitations, especially regarding output reliability, such as the issue of hallucinations and the need for models to express uncertainty.

What is the speaker's perspective on the future of programming and AI interaction?

-The speaker believes that we are at an inflection point where the way we interact with digital information is being redefined through AI systems. They envision a future where natural language interfaces become more prevalent, allowing for collaboration with AI models as if they were companions or co-workers.

What was the original intent behind creating Chat GPT, according to the speaker?

-Chat GPT was initially created as a means to get feedback from researchers on the dialogue modality of AI, with the goal of using this feedback to improve the alignment, safety, and reliability of more advanced models like GPT-4.

How does the speaker define AGI (Artificial General Intelligence)?

-The speaker defines AGI as a computer system capable of performing autonomously the majority of intellectual work, indicating a level of capability that spans a wide range of tasks beyond specific domains.

What are the speaker's thoughts on the future of AI in terms of economics and the workforce?

-The speaker anticipates that AI systems will take over more tasks, allowing humans to focus on higher-level work and potentially reducing the amount of time spent working. They also suggest that AI could lead to a more powerful and accessible platform for building applications and products.

What is the speaker's view on the potential future of AI models in terms of their capabilities and roles?

-The speaker expects AI models to become incredibly powerful, with the ability to understand and interact with the world across multiple modalities. They also highlight the importance of addressing the challenge of super alignment to ensure these models are safe and aligned with human intentions.

Outlines

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowMindmap

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowKeywords

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowHighlights

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowTranscripts

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowBrowse More Related Video

OpenAI's Mira Murati on ChatGPT and the power of curiosity | Behind the Tech with Kevin Scott

Revolutionizing customer experience - Caesars Entertainment

The Youngest Elected Official in New York City

Connecting Minds: Interview with Software AG's Sanjay Brahmawar & Sharon Doherty

Preventing Students from Joining Gangs | Jose Segura | TEDxMontgomeryBlairHS

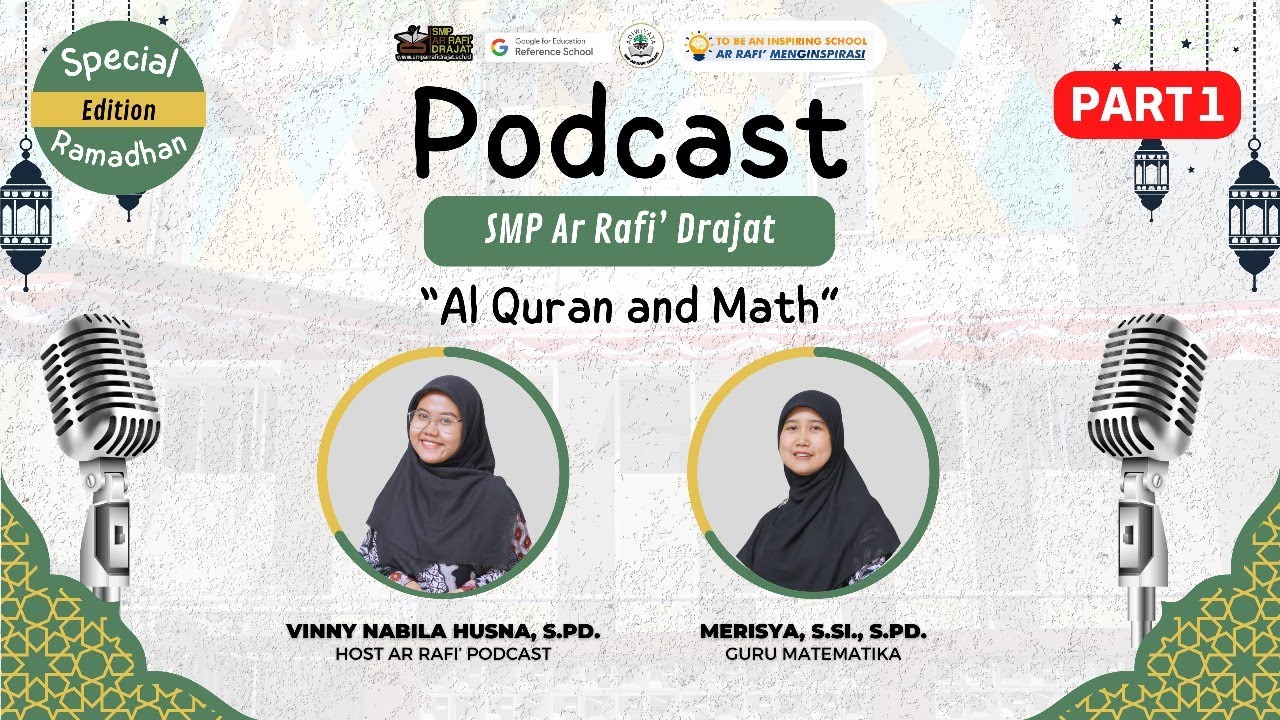

"Al-Quran and Math" With Miss Merisya, S.Si., S.Pd Part 1

5.0 / 5 (0 votes)