Memory Hierarchy & Interfacing

Summary

TLDRThis educational session delves into the concept of memory hierarchy, explaining how different memory units are ranked based on access time and size, with registers being the fastest and secondary storage the slowest. It highlights the purpose of the hierarchy in bridging the speed gap between processors and memory while keeping costs reasonable. The video also explores two methods of memory interfacing, discussing the hit and miss ratios and their impact on average memory access time, aiming to provide a clear understanding of computer organization and memory efficiency.

Takeaways

- 📚 The concept of memory hierarchy in computing is analogous to ranking or order based on different parameters, similar to how movies can be ranked by release dates or ratings.

- 🏰 Memory units are ranked based on access time and size, with registers being the fastest and secondary memory being the slowest but largest in capacity.

- 💻 The purpose of the memory hierarchy is to bridge the speed gap between the fast processor and the slower memory while keeping costs reasonable.

- 🔍 Memory interfacing is a part of computer organization that involves connecting memory units to the processor and I/O peripherals to feed instructions efficiently.

- ⏱️ Processor speed is measured in MIPS (Million Instructions Per Second), and the goal is to provide this many instructions from memory quickly and cost-effectively.

- 🎯 In the memory hierarchy, a 'hit' occurs when the processor finds the required information in the current level of memory, and a 'miss' means it must look in the next level.

- 📈 The hit ratio is calculated as the percentage of times the processor successfully accesses the required information from a memory level without missing.

- 🔢 The effective or average memory access time can be calculated using a formula that considers hit ratios and access times of different memory levels.

- 🔄 There are two ways of interfacing memory units: simultaneous connection to the processor, allowing parallel search across levels, and level-wise connection, where the processor searches level by level sequentially.

- ⏳ In simultaneous interfacing, the average access time is calculated considering the parallel search and the probabilities of hits and misses at each level.

- 📉 In level-wise interfacing, the average access time formula accounts for the sequential search, including the cumulative access times of all levels in case of a miss.

- 📚 The next session will involve solving numerical problems related to memory hierarchy to enhance understanding of the concept.

Q & A

What does the term 'hierarchy' refer to in the context of memory hierarchy?

-In the context of memory hierarchy, 'hierarchy' refers to the ranking or organization of different memory units based on various parameters such as access time, size, and cost.

Why is the memory hierarchy important in computer systems?

-The memory hierarchy is important because it helps bridge the speed mismatch between the fast processor and the slower memory, ensuring efficient data retrieval at a reasonable cost.

What are the different levels of memory units in the hierarchy based on access time and size?

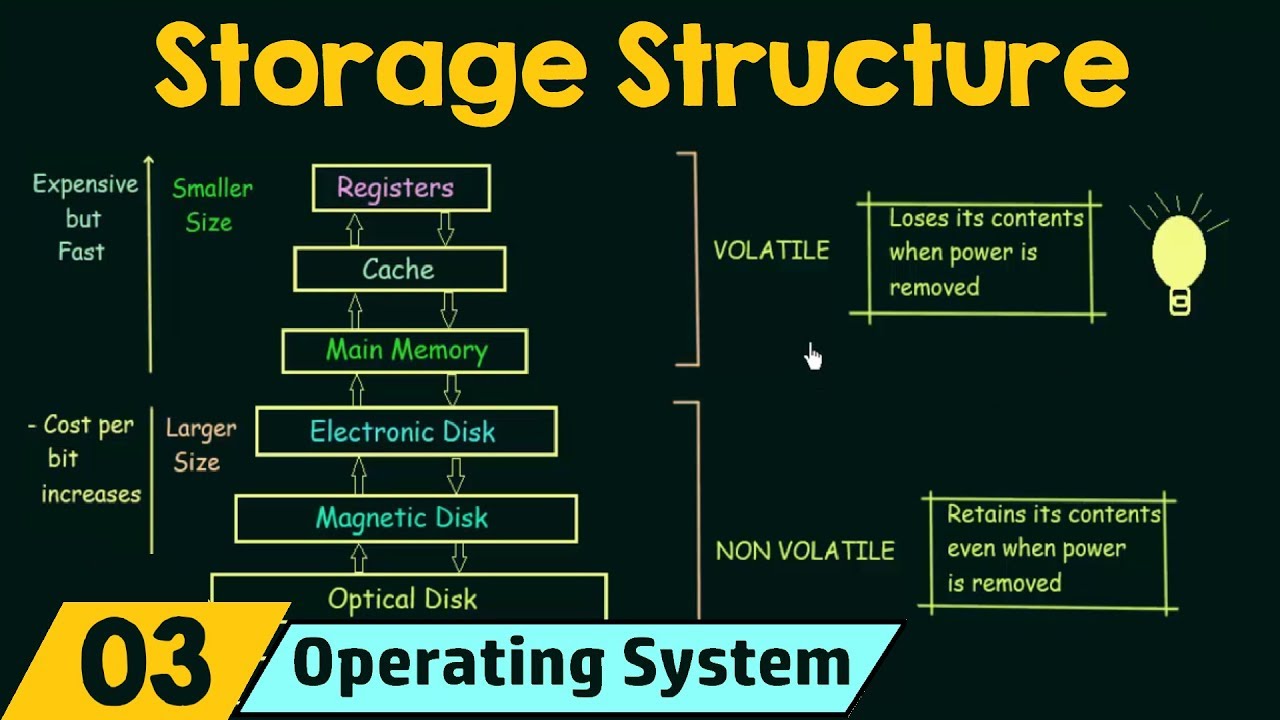

-The different levels of memory units, from fastest to slowest, are registers, SRAM caches, DRAM main memory, and secondary memory storages.

How does the cost and usage frequency relate to the memory hierarchy?

-As we move up in the memory hierarchy, the cost and frequency of usage increase, with the processor being the most expensive and most frequently used component.

What is the purpose of the memory interfacing in computer organization?

-Memory interfacing in computer organization deals with the way of connecting various levels of memory units, especially to the processor and I/O peripherals, to efficiently feed the processor with instructions.

What does MIPS stand for and what does it measure?

-MIPS stands for Million Instructions Per Second and it measures the speed of the processor by counting how many instructions it can execute in a second.

What is a 'hit' in the context of memory hierarchy?

-A 'hit' in the context of memory hierarchy occurs when the processor finds the required instruction or data in the memory level it is currently referring to.

What is a 'miss' and how does it relate to the memory hierarchy?

-A 'miss' occurs when the processor does not find the required instruction or data in the current memory level and must look for it in the next level of the hierarchy.

What is the hit ratio and how is it calculated?

-The hit ratio is the percentage of times the processor finds the required instructions or data in a particular memory level. It is calculated by dividing the number of hits by the total number of instructions.

What are the two ways of interfacing memory units with the processor as described in the script?

-The two ways of interfacing memory units with the processor are: 1) Simultaneously connecting all different memory levels to the processor, allowing it to look for information in all levels side by side. 2) Connecting memory units level-wise, where the processor looks for information in one level at a time, moving to the next level only if the information is not found in the current one.

Can you explain the formula for effective or average memory access time in the context of simultaneous memory interfacing?

-The formula for effective or average memory access time in simultaneous memory interfacing is the sum of the products of hit ratios and access times for each memory level. It accounts for the time taken to access each level based on the probability of finding the instruction there and includes all levels from the fastest to the slowest.

Outlines

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowMindmap

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowKeywords

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowHighlights

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowTranscripts

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowBrowse More Related Video

L-3.1: Memory Hierarchy in Computer Architecture | Access time, Speed, Size, Cost | All Imp Points

Introduction to Memory

Manajemen Memori - Sistem Operasi

L-3.1 Memory Hierarchy | Memory Organisation | Computer System Architecture | COA | CSA

Basics of OS (Storage Structure)

Armazenamento e manipulação de dados em memória - Hierarquia de memória

5.0 / 5 (0 votes)