Relation between solution of linear regression and Lasso regression

Summary

TLDRThe script delves into the concept of regularization in linear regression, particularly exploring the idea of encouraging sparsity in solutions. It contrasts L2 norm regularization with L1 norm regularization, explaining how L1 can lead to solutions with more zero values, effectively selecting important features. The discussion introduces LASSO, or Least Absolute Shrinkage and Selection Operator, as a method for achieving sparse solutions by penalizing the sum of absolute values of coefficients, making it a popular choice for feature selection in high-dimensional spaces.

Takeaways

- 🔍 The script discusses the geometric insight into regularization in linear regression and how it affects the search region for the optimal weights (w).

- 🧩 It suggests a method to encourage sparsity in the solution, meaning having more coefficients (w's) exactly equal to zero, by changing the regularization approach.

- 📏 The script introduces the concept of L1 norm as an alternative to L2 norm for regularization, defining L1 norm as the sum of the absolute values of the vector's components.

- 🔄 L1 regularization is presented as a method to minimize the loss function plus a regularization term involving the L1 norm of w, aiming to promote sparsity in the solution.

- 📐 The script explains that L1 regularization can be formulated as a constrained optimization problem, with the constraint being the L1 norm of w.

- 📊 The geometric representation of L1 constraints is a polyhedral shape, unlike the elliptical contours of L2 regularization, which may lead to hitting a point where some features are zero.

- 🎯 The hope with L1 regularization is that the optimization process will more likely result in a sparse solution where some features have exactly zero weight.

- 🌐 The script mentions that in high-dimensional spaces, L1 regularization tends to yield sparser solutions compared to L2 regularization.

- 🏷️ LASSO (Least Absolute Shrinkage and Selection Operator) is introduced as the name for linear regression with L1 regularization, emphasizing its role in feature selection.

- 🔑 LASSO is described as a method to minimize the loss with an L1 penalty, aiming to shrink the length of w and select important features by pushing irrelevant ones to zero.

- 📚 The script acknowledges that while intuitive arguments are made for the sparsity-promoting properties of L1 regularization, formal proofs are part of more advanced courses and are not covered in the script.

Q & A

What is the main idea discussed in the script regarding regularization in linear regression?

-The script discusses the use of geometric insights to understand how regularization works in linear regression and suggests using L1 norm instead of L2 norm to encourage sparsity in the solution, which means having more coefficients set to exactly zero.

What does the term 'sparsity' refer to in the context of linear regression?

-Sparsity in linear regression refers to a solution where many of the coefficients (w's) are exactly zero, which means that those features do not contribute to the model.

What is the difference between L1 and L2 norm in terms of regularization?

-L1 norm is the sum of the absolute values of the components of a vector, while L2 norm is the sum of the squares of the components. L1 regularization tends to produce sparse solutions, whereas L2 regularization tends to shrink coefficients towards zero but does not set them to zero.

What is the geometric interpretation of L1 and L2 regularization in terms of the search region for w?

-L2 regularization corresponds to a circular search region (an ellipse in higher dimensions), while L1 regularization corresponds to a diamond-shaped or L-shaped region, which is more likely to intersect the contours at points where some features have zero weight.

Why might L1 regularization be preferred over L2 in certain scenarios?

-L1 regularization might be preferred when there are many features, and it is expected that most of them are not useful or redundant. It can help in feature selection by pushing the coefficients of irrelevant features to zero.

What is the acronym LASSO stand for, and what does it represent in the context of linear regression?

-LASSO stands for Least Absolute Shrinkage and Selection Operator. It represents a method of linear regression that uses L1 regularization to minimize the model's complexity and perform feature selection.

How does LASSO differ from ridge regression in terms of the solution it provides?

-While ridge regression (L2 regularization) shrinks all coefficients towards zero but does not set any to zero, LASSO (L1 regularization) can set some coefficients exactly to zero, thus performing feature selection.

What is the 'shrinkage operator' mentioned in the script, and how does it relate to L1 and L2 regularization?

-A shrinkage operator is a term used to describe the effect of regularization, which is to reduce the magnitude of the coefficients. Both L1 and L2 regularization act as shrinkage operators, but they do so in different ways, with L1 potentially leading to sparsity.

What is the intuition behind the script's argument that L1 regularization might lead to more sparse solutions than L2?

-The intuition is based on the geometric shapes of the constraints imposed by L1 and L2 norms. L1's flat sides may cause the optimization to hit points where many coefficients are zero, whereas L2's circular constraint tends to shrink coefficients uniformly towards zero without setting them to zero.

Can the script's argument about the likelihood of L1 producing sparse solutions be proven mathematically?

-While the script does not provide a proof, it suggests that in advanced courses, one might find mathematical arguments and proofs that support the claim that L1 regularization is more likely to produce sparse solutions compared to L2.

What is the practical implication of using L1 regularization in high-dimensional datasets?

-In high-dimensional datasets, L1 regularization can be particularly useful for feature selection, as it is more likely to produce sparse solutions that only include the most relevant features, thus simplifying the model and potentially improving its performance.

Outlines

Этот раздел доступен только подписчикам платных тарифов. Пожалуйста, перейдите на платный тариф для доступа.

Перейти на платный тарифMindmap

Этот раздел доступен только подписчикам платных тарифов. Пожалуйста, перейдите на платный тариф для доступа.

Перейти на платный тарифKeywords

Этот раздел доступен только подписчикам платных тарифов. Пожалуйста, перейдите на платный тариф для доступа.

Перейти на платный тарифHighlights

Этот раздел доступен только подписчикам платных тарифов. Пожалуйста, перейдите на платный тариф для доступа.

Перейти на платный тарифTranscripts

Этот раздел доступен только подписчикам платных тарифов. Пожалуйста, перейдите на платный тариф для доступа.

Перейти на платный тарифПосмотреть больше похожих видео

Relation between solution of linear regression and ridge regression

Ridge regression

Regulaziation in Machine Learning | L1 and L2 Regularization | Data Science | Edureka

Goodness of Maximum Likelihood Estimator for linear regression

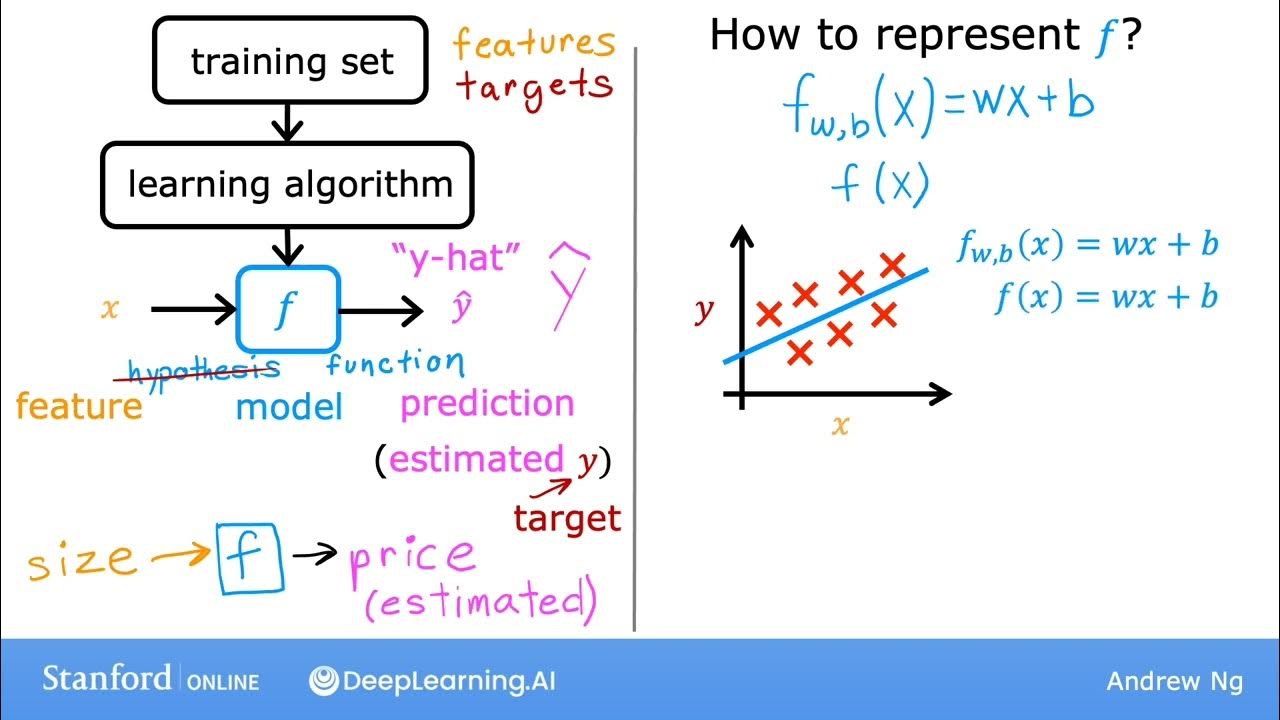

#10 Machine Learning Specialization [Course 1, Week 1, Lesson 3]

Machine Learning Tutorial Python - 17: L1 and L2 Regularization | Lasso, Ridge Regression

5.0 / 5 (0 votes)