How databases scale writes: The power of the log ✍️🗒️

Summary

TLDRIn this informative video, G KCS explores the optimization of database write operations using log-structured merge trees (LSM trees). The script explains how traditional B+ trees in databases can be inefficient for scaling, and introduces the concept of LSM trees that prioritize fast writes using linked lists while also maintaining quick read times through sorted string tables. The video delves into the mechanics of LSM trees, including compaction and the use of bloom filters to speed up search queries, offering a comprehensive look at balancing performance in database management.

Takeaways

- 🌐 The video discusses optimizing database operations by reducing unnecessary data exchanges and I/O calls to improve response times.

- 📚 Traditional databases use B+ trees for efficient insertion and search operations, which are both O(log n) in complexity.

- 🔧 To scale databases, the video suggests condensing multiple data operations into a single query to minimize acknowledgments and headers.

- 🔑 The trade-off for condensing data is the need for additional memory on the server to temporarily store the queries before flushing them to the database.

- ✂️ Write operations can be optimized using linked lists, which offer O(1) constant time complexity for appending data.

- 🔍 However, linked lists are inefficient for read operations, which require a sequential search, leading to O(n) time complexity.

- 📈 The Log-Structured Merge Tree (LSM Tree) is introduced as a hybrid data structure that combines the fast write benefits of a log with the fast read benefits of a sorted array.

- 🔄 LSM Trees work by periodically merging sorted arrays in the background, reducing the number of required read operations and improving efficiency.

- 📊 The video explains the concept of 'compaction' in LSM Trees, where smaller sorted arrays are merged into larger ones to optimize read performance.

- 🛠️ Bloom filters are suggested as a method to speed up search queries by reducing the number of chunks that need to be checked, albeit with a chance of false positives.

- 🔑 The script concludes with the importance of balancing fast writes with efficient reads in database optimization, highlighting the LSM Tree as a practical solution.

Q & A

What is the main topic of the video script?

-The main topic of the video script is optimizing database operations by discussing the use of data structures like B+ trees, linked lists, and log-structured merge trees for efficient write and read operations.

Why are B+ trees preferred in traditional databases?

-B+ trees are preferred in traditional databases because they provide good insertion and search times, both of which are of the order log n, making them efficient for handling SQL commands like insert and select.

What is the main idea behind condensing data queries into a single query?

-The main idea behind condensing data queries into a single query is to reduce unnecessary data exchange, such as acknowledgments and headers, and to decrease I/O calls, which in turn can free up resources and reduce request-response times.

What is the time complexity of write operations in a linked list?

-The time complexity of write operations in a linked list is O(1), which means it is constant time because appending data to the end of the list is a straightforward operation.

What is the disadvantage of using a log for database storage in terms of read operations?

-The disadvantage of using a log for database storage is that read operations are slow, as they require a sequential search through the entire log, which is an O(n) operation where n is the number of records in the log.

What is a log-structured merge tree (LSM tree) and how does it help with database optimization?

-A log-structured merge tree (LSM tree) is a data structure that combines the fast write capabilities of a linked list with the fast read capabilities of sorted arrays or trees. It optimizes databases by allowing fast writes through sequential log appends and fast reads through sorted string tables that are created and merged in the background.

What is the purpose of merging sorted arrays in the background of an LSM tree?

-The purpose of merging sorted arrays in the background of an LSM tree is to improve read performance by reducing the number of chunks that need to be searched during a query, thus decreasing the overall time complexity for read operations.

What is compaction in the context of LSM trees?

-Compaction in the context of LSM trees is the process of taking multiple sorted string tables and merging them into a single, larger sorted array to facilitate faster search queries.

What is a bloom filter and how can it be used to speed up read queries in a database?

-A bloom filter is a probabilistic data structure that can be used to test whether an element is a member of a set. In the context of databases, it can speed up read queries by quickly determining the presence of a record in a chunk, reducing the need for full sequential searches and thus speeding up the overall read operation, albeit with a possibility of false positives.

How does the script suggest managing the trade-off between fast writes and fast reads in database optimization?

-The script suggests using a hybrid approach where writes are fast due to the use of a linked list-like structure, while reads are optimized by merging sorted arrays in the background to form sorted string tables. Additionally, bloom filters can be used to further speed up read queries by reducing unnecessary searches.

Outlines

Этот раздел доступен только подписчикам платных тарифов. Пожалуйста, перейдите на платный тариф для доступа.

Перейти на платный тарифMindmap

Этот раздел доступен только подписчикам платных тарифов. Пожалуйста, перейдите на платный тариф для доступа.

Перейти на платный тарифKeywords

Этот раздел доступен только подписчикам платных тарифов. Пожалуйста, перейдите на платный тариф для доступа.

Перейти на платный тарифHighlights

Этот раздел доступен только подписчикам платных тарифов. Пожалуйста, перейдите на платный тариф для доступа.

Перейти на платный тарифTranscripts

Этот раздел доступен только подписчикам платных тарифов. Пожалуйста, перейдите на платный тариф для доступа.

Перейти на платный тарифПосмотреть больше похожих видео

Choosing a Database for Systems Design: All you need to know in one video

Google SWE teaches systems design | EP1: Database Design

Google SWE teaches systems design | EP22: HBase/BigTable Deep Dive

AVL trees in 5 minutes — Intro & Search

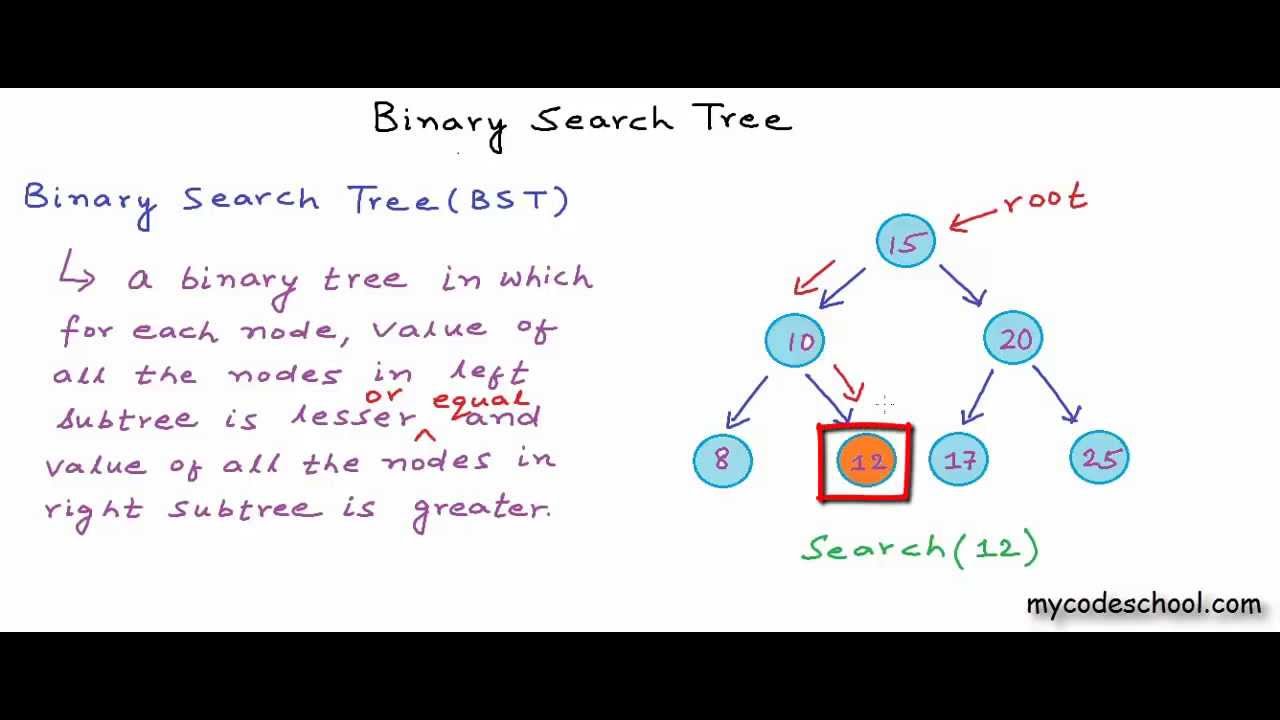

Data structures: Binary Search Tree

AQA A’Level Trees & Binary trees

5.0 / 5 (0 votes)