Memory, Cache Locality, and why Arrays are Fast (Data Structures and Optimization)

Summary

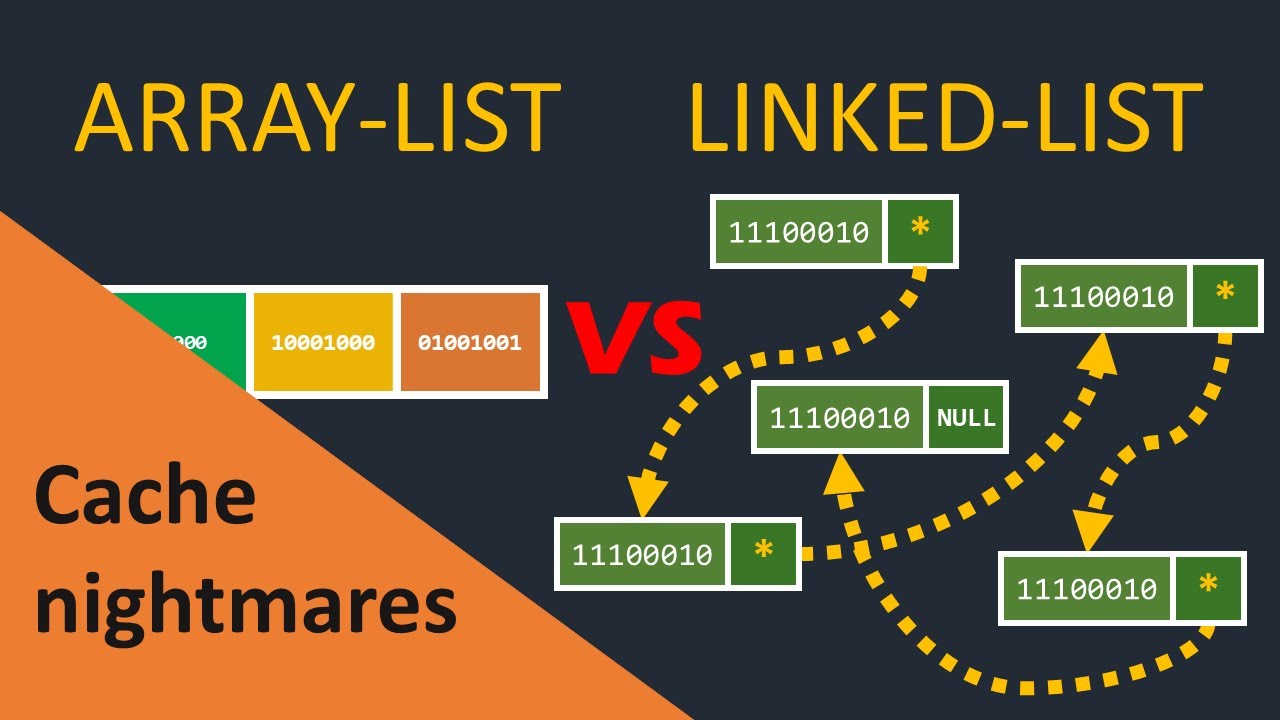

TLDRThis video script delves into the world of data structures, focusing on arrays and their optimization. It explains arrays as a contiguous block of memory, beneficial for fast access and iteration. The script also explores CPU caches, including L1, L2, and L3, and how they work in tandem with prefetching to enhance performance. It touches on the limitations of arrays, such as slow search times and the inefficiency of inserting or removing elements from the middle. The video promises further exploration of advanced data structures like hash tables, which are built on the foundation of arrays.

Takeaways

- 📚 The video introduces a new series on data structures and optimization, starting with arrays.

- 🔍 Understanding the internal workings of arrays is crucial for performance optimization.

- 🗃 Arrays are a way to store multiple items at once, accessible by their index, typically zero-indexed.

- 💾 Arrays use contiguous memory, meaning all elements are stored together in a single block of memory.

- 🏢 Memory is divided into slots, each with an address, allowing for direct access to any byte.

- 🔄 The CPU uses caches (L1, L2, L3) to store frequently accessed data quickly, reducing access time.

- 🔮 Prefetching is a technique where the CPU predicts future data needs and loads it into cache preemptively.

- 🚀 Caches are faster than main memory, and a cache hit is much quicker than a cache miss, which requires fetching data from main memory.

- 🔍 Operations on arrays are efficient due to their contiguous nature, which aligns well with CPU cache optimization.

- 🚧 Dynamic arrays can grow by allocating more memory initially and tracking the array's end, maintaining contiguous memory allocation.

- 🛑 While arrays are fast for certain operations, finding an element or inserting/removing from the middle or start can be slow and inefficient.

Q & A

What is the main topic of the video series?

-The main topic of the video series is data structures and optimization, starting with an in-depth discussion on arrays.

Why is understanding what happens under the hood important for performance?

-Understanding what happens under the hood is important for performance because it allows developers to make informed decisions about data structures and algorithms that can optimize their code execution speed.

What is an array and what is its basic property?

-An array is a data structure used to store several items at once. Its basic property is the ability to look up individual elements by their index, typically starting with index zero.

What does 'contiguous memory' mean in the context of arrays?

-In the context of arrays, 'contiguous memory' means that the memory allocated for the array is all together in one block with no gaps between the elements.

How does memory allocation work in terms of variable storage?

-Memory allocation for a variable involves reserving a specific amount of space in memory, either on the stack or heap, based on the variable's data type and size.

What are the two strategies mentioned to save time when retrieving data?

-The two strategies mentioned are: 1) Checking out the entire book instead of just a chapter to quickly access other chapters from the same book, and 2) Predicting the next data request based on current patterns and prefetching it.

What are the roles of L1, L2, and L3 caches in a CPU?

-L1, L2, and L3 caches are levels of built-in memory in a CPU that store data to speed up access. The CPU checks these caches in order (from L1 to L3) when looking for data, with each level being slower but larger than the previous one.

Why are contiguous chunks of memory beneficial for CPU operations?

-Contiguous chunks of memory are beneficial for CPU operations because they allow for faster access and processing. The CPU can efficiently iterate over or access elements in a contiguous block without incurring additional memory access costs.

What is prefetching and how does it relate to CPU operations?

-Prefetching is a technique where the CPU predicts future memory access patterns and loads data into the cache before it is explicitly requested. This helps to reduce latency and improve performance by having the data readily available when needed.

What are the limitations of arrays when it comes to finding, inserting, or removing elements?

-Arrays have limitations in finding elements as it requires checking each element one by one. Inserting or removing elements from anywhere except the end is also inefficient, as it may require shifting elements to maintain the array's order, which can be time-consuming for large arrays.

How do dynamic arrays accommodate more items?

-Dynamic arrays accommodate more items by allocating more memory than initially needed and keeping track of the array's end. This allows the array to grow without the need for re-allocation and copying of the entire array.

Why are arrays foundational to understanding more advanced data structures?

-Arrays are foundational because many advanced data structures, such as hash tables, are often implemented using a combination of arrays and other structures like linked lists. Understanding arrays helps in grasping the basic principles that are extended in more complex data structures.

Outlines

Этот раздел доступен только подписчикам платных тарифов. Пожалуйста, перейдите на платный тариф для доступа.

Перейти на платный тарифMindmap

Этот раздел доступен только подписчикам платных тарифов. Пожалуйста, перейдите на платный тариф для доступа.

Перейти на платный тарифKeywords

Этот раздел доступен только подписчикам платных тарифов. Пожалуйста, перейдите на платный тариф для доступа.

Перейти на платный тарифHighlights

Этот раздел доступен только подписчикам платных тарифов. Пожалуйста, перейдите на платный тариф для доступа.

Перейти на платный тарифTranscripts

Этот раздел доступен только подписчикам платных тарифов. Пожалуйста, перейдите на платный тариф для доступа.

Перейти на платный тарифПосмотреть больше похожих видео

5.0 / 5 (0 votes)