Issues with PCA

Summary

TLDRThis video delves into the challenges of using Principal Component Analysis (PCA) for dimensionality reduction, particularly in high-dimensional or non-linear datasets. The speaker outlines two key issues: time complexity, as more dimensions increase computational cost, and the inability of PCA to capture non-linear relationships in data. Although PCA is useful in many cases, it may fail when the data has an inherent low-dimensional structure. The surprising twist is that solving the time complexity issue can also address the problem of non-linearity, hinting at a more advanced solution that will be explored in the video.

Takeaways

- 😀 PCA (Principal Component Analysis) is a powerful method for reducing the dimensionality of data while preserving as much variance as possible.

- 😀 PCA works by finding the directions (principal components) that maximize the variance in the data.

- 😀 The key challenge with PCA is that the data might not always lie in a linear subspace, which can lead to issues when trying to reduce dimensions.

- 😀 Time complexity becomes an issue when the number of features is large, making PCA computationally expensive.

- 😀 Non-linear relationships between features in the data might not be well captured by PCA, which assumes linearity in the data.

- 😀 A significant risk with PCA is that it might incorrectly suggest the need for higher dimensions if it fails to recognize the actual low-dimensional structure of the data.

- 😀 PCA can work perfectly if the data truly lies in a lower-dimensional linear subspace, but it can fail to capture essential patterns if the data structure is non-linear.

- 😀 A common solution to PCA's challenges involves reducing both time complexity and non-linearity issues simultaneously, using a smart and interconnected approach.

- 😀 If the non-linearity issue is addressed, it might also help to improve the time complexity problem and vice versa.

- 😀 The current problem lies in how to intelligently address both the time complexity and non-linearity issues without compromising performance or accuracy.

- 😀 The next steps in the video will focus on addressing the time complexity issue first, and then drawing insights that can help with solving the non-linearity problem.

Q & A

What is the main challenge with applying PCA to high-dimensional data?

-The main challenge is that PCA can become computationally expensive as the number of features increases, leading to high time complexity. Additionally, PCA assumes linear relationships between features, which may not be suitable for datasets with non-linear structures.

Why might PCA not work well when the data is non-linearly related?

-PCA assumes that the data lies in a linear subspace, meaning that it can only capture linear relationships between features. If the data has non-linear relationships, PCA might fail to capture the true underlying structure, leading to inaccurate dimensionality reduction.

What is meant by a 'low-dimensional linear subspace' in the context of PCA?

-A low-dimensional linear subspace refers to a space formed by linear combinations of fewer dimensions, such as a line or plane passing through the origin in a higher-dimensional space. PCA tries to find this subspace that best captures the variance in the data.

What happens when PCA reduces a dataset to two dimensions, but the data actually requires more?

-If PCA reduces the dataset to two dimensions while the data inherently requires more dimensions, the error will remain high. This may lead to misleading conclusions, such as incorrectly assuming the data can be adequately represented in only two dimensions.

How does PCA handle dimensionality reduction in terms of time complexity?

-PCA can be computationally expensive when dealing with high-dimensional data. The time complexity grows with the number of features, which can make it impractical for large datasets unless optimizations are applied.

What is the surprising fact the speaker introduces about solving the issues with PCA?

-The surprising fact is that solving one of the two major issues (time complexity or non-linear relationships) could also solve the other. By addressing one problem, you might be able to simultaneously address the other.

What is the main difference between time complexity and structural issues in PCA?

-Time complexity refers to the computational cost of running PCA on high-dimensional data, while structural issues concern whether the data follows linear or non-linear relationships between features. Both can hinder PCA's effectiveness, but they seem unrelated at first glance.

Why might PCA fail to provide the desired result when the features are non-linearly related?

-PCA might fail in this case because it is designed to detect linear relationships between features. If the relationships in the data are non-linear, PCA might not be able to capture the full complexity of the data's structure, leading to a poor representation after dimensionality reduction.

What does the speaker mean by 'reconstructing the dataset' in PCA?

-Reconstructing the dataset in PCA means using the reduced-dimensional representation to approximate the original dataset. When PCA reduces the data to a lower number of dimensions, it tries to retain as much variance as possible, but some information may be lost in the process, leading to reconstruction error.

What will the speaker do to address the issues with PCA?

-The speaker plans to first address the issue of time complexity and then make observations that will help in solving the structural issue related to non-linear relationships. This approach aims to tackle both problems using a unified solution.

Outlines

Этот раздел доступен только подписчикам платных тарифов. Пожалуйста, перейдите на платный тариф для доступа.

Перейти на платный тарифMindmap

Этот раздел доступен только подписчикам платных тарифов. Пожалуйста, перейдите на платный тариф для доступа.

Перейти на платный тарифKeywords

Этот раздел доступен только подписчикам платных тарифов. Пожалуйста, перейдите на платный тариф для доступа.

Перейти на платный тарифHighlights

Этот раздел доступен только подписчикам платных тарифов. Пожалуйста, перейдите на платный тариф для доступа.

Перейти на платный тарифTranscripts

Этот раздел доступен только подписчикам платных тарифов. Пожалуйста, перейдите на платный тариф для доступа.

Перейти на платный тарифПосмотреть больше похожих видео

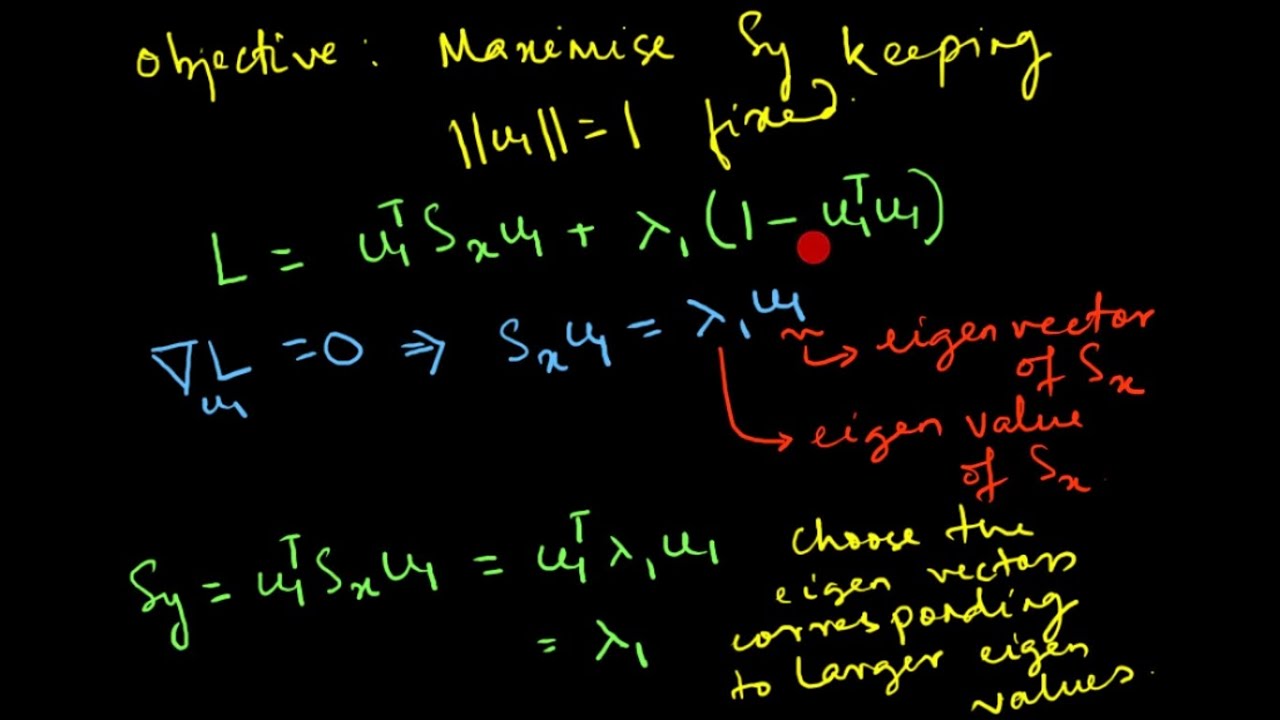

Principal Component Analysis (PCA) : Mathematical Derivation

10.3 Probabilistic Principal Component Analysis (UvA - Machine Learning 1 - 2020)

1 Principal Component Analysis | PCA | Dimensionality Reduction in Machine Learning by Mahesh Huddar

StatQuest: Principal Component Analysis (PCA), Step-by-Step

Lec-46: Principal Component Analysis (PCA) Explained | Machine Learning

StatQuest: PCA main ideas in only 5 minutes!!!

5.0 / 5 (0 votes)