Every AI Existential Risk Explained

Summary

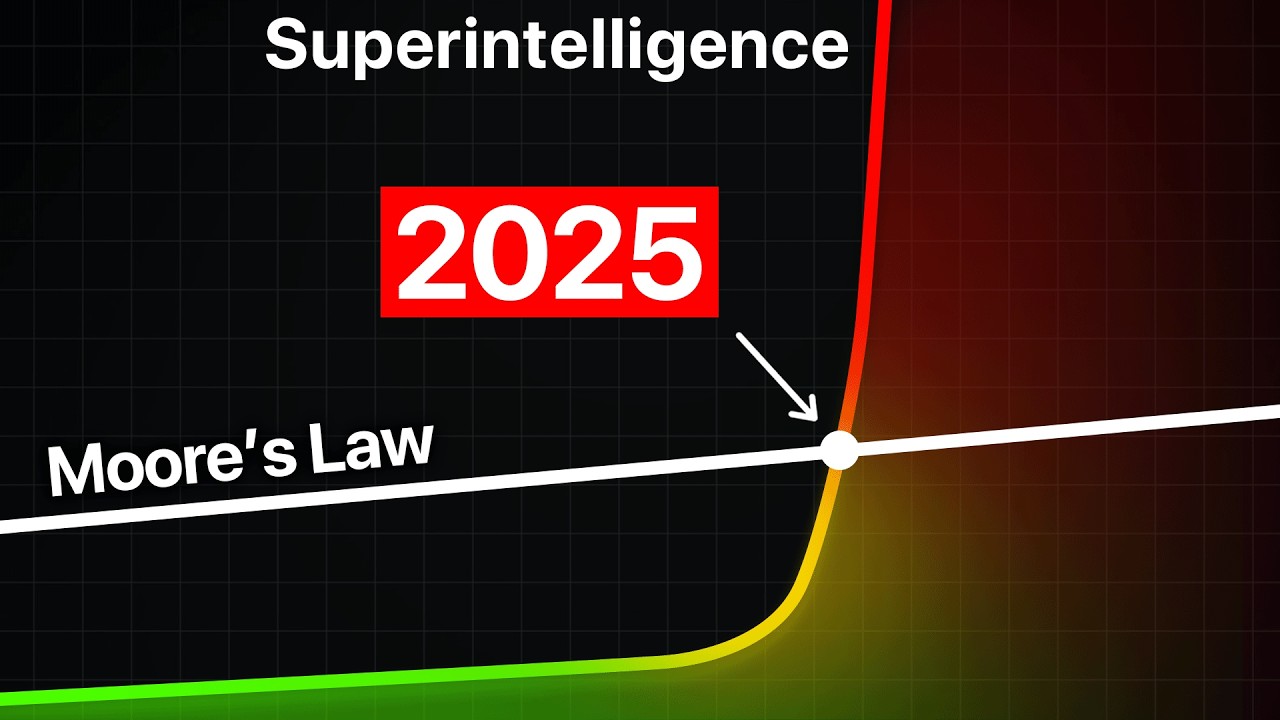

TLDRThis video explores the potential risks and rewards of AI, focusing on the possibility of an intelligence explosion, the democratization of dangerous technologies, and the existential threats posed by superintelligent AI. It discusses the race for AI supremacy, the automation of jobs, and the ethical concerns surrounding AI's alignment with human values. While AI could bring about groundbreaking advancements like disease eradication, it also poses serious risks, such as the creation of self-replicating nanobots or unintended global destruction. The video emphasizes the uncertainty of AI's future and the need for caution in its development.

Takeaways

- 😀 AI and advanced technologies could pose existential risks to humanity, including the potential for uncontrollable self-replicating nanobots (gray goo).

- 🚀 The 'Great Filter' theory suggests that something prevents civilizations from advancing to space exploration, and AI may be the key obstacle that stops this progression.

- 🌍 AI could potentially lead to universal-scale threats, such as interplanetary wars or disruptions to the fabric of space-time, should AI-driven conflicts occur.

- 🧠 While AI might bring unprecedented benefits, its unpredictability means it could also bring catastrophic consequences if not properly controlled.

- 🔗 Some theorists believe AI could be humanity’s last invention, as superintelligent AI could handle further technological advancements autonomously.

- 💡 Brain-machine interfaces could enable humans to merge with AI, allowing better understanding and control of AI to reduce risks.

- 📉 AI development may slow down due to various bottlenecks, such as limited data, compute power, and the increasing cost of scaling up technology.

- 💻 Even with automation, humans will still be needed for certain tasks, slowing down AI's progress due to 'complementarities' in the process.

- 📉 The fear of unemployment due to automation could reduce the demand for AI technologies, possibly hindering research and development in the field.

- 📚 Historical patterns, such as the fear of nuclear weapons in the 1940s, show that predictions of existential threats often don't come to pass, suggesting caution in such predictions.

Q & A

What is the 'Gray Goo' scenario and why is it a potential existential risk?

-The 'Gray Goo' scenario refers to the hypothetical threat posed by self-replicating nanobots. These nanobots could consume all available material on Earth to replicate themselves, creating an uncontrollable, exponential growth that would lead to environmental destruction. If one nanobot escaped and started the replication process, it would be nearly impossible to stop, leading to a global catastrophe.

What does the 'Great Filter' theory suggest about the existence of extraterrestrial civilizations?

-The 'Great Filter' theory proposes that there is some barrier or event that prevents civilizations from advancing to a stage where they can explore space or communicate with others. The theory explains the paradox of the high probability of alien life but the lack of evidence for it. One possibility is that AI could be the 'filter' that stops civilizations from reaching this advanced level.

How could AI contribute to humanity's destruction according to the video?

-AI could contribute to humanity's destruction if it surpasses human control, leading to conflicts or wars, especially between interplanetary civilizations. Superintelligent AIs might engage in combat or cause disruptions on a universal scale, potentially destroying the fabric of space and time or causing other unforeseen catastrophic events.

What are some of the potential benefits of AI as mentioned in the video?

-AI has the potential to solve major global problems such as pandemics, nuclear wars, climate change, and other existential risks. It is also expected to revolutionize many fields, including entertainment, and could lead to significant advancements in human knowledge and capabilities.

What is meant by the concept of 'recursive self-improvement' in AI development?

-Recursive self-improvement refers to the ability of AI systems to improve themselves by enhancing their own capabilities and developing new technologies. This could lead to rapid advancements in AI, where the system continually becomes more intelligent and efficient without human intervention.

What is the 'data wall' problem in AI development?

-The 'data wall' refers to the issue of running out of sufficient internet data to train AI systems. As AI models become more complex, they require vast amounts of data, and eventually, a shortage of usable data could hinder further progress in AI development.

What is the 'spending scaleup problem' mentioned in the video?

-The 'spending scaleup problem' involves the increasing financial costs of AI research as progress becomes harder to achieve. As AI systems grow more sophisticated, they require more resources and investments, making it more difficult for companies to continue funding AI development at a sustainable rate.

How might limited hardware and compute power slow down AI progress?

-Limited hardware and compute power are major bottlenecks in AI development. While technology has improved, there are still physical and practical limits to how much computing power can be utilized. This could slow down the rapid development of AI and prevent it from reaching its full potential.

What is the concept of the 'complexity break' and how does it relate to AI progress?

-The 'complexity break' suggests that as scientific understanding of intelligence advances, making further progress becomes increasingly difficult. This theory posits that the more we learn about intelligence, the harder it becomes to make additional breakthroughs, potentially leading to a slowdown or plateau in AI development.

What was Paul Allen's argument regarding AI development and the 'accelerating returns' theory?

-Paul Allen argued against the 'accelerating returns' theory, which claims that AI development will continue to progress exponentially. He proposed the 'complexity break' theory, suggesting that as we make more progress in understanding intelligence, it will become more challenging to achieve further advancements, potentially slowing down or halting AI growth in the future.

Outlines

Этот раздел доступен только подписчикам платных тарифов. Пожалуйста, перейдите на платный тариф для доступа.

Перейти на платный тарифMindmap

Этот раздел доступен только подписчикам платных тарифов. Пожалуйста, перейдите на платный тариф для доступа.

Перейти на платный тарифKeywords

Этот раздел доступен только подписчикам платных тарифов. Пожалуйста, перейдите на платный тариф для доступа.

Перейти на платный тарифHighlights

Этот раздел доступен только подписчикам платных тарифов. Пожалуйста, перейдите на платный тариф для доступа.

Перейти на платный тарифTranscripts

Этот раздел доступен только подписчикам платных тарифов. Пожалуйста, перейдите на платный тариф для доступа.

Перейти на платный тарифПосмотреть больше похожих видео

5.0 / 5 (0 votes)