Nlp - 2.8 - Kneser-Ney Smoothing

Summary

TLDRThe transcript explores sophisticated smoothing techniques in language modeling, focusing on absolute discounting and Kenisi/Nai smoothing. Absolute discounting applies a fixed reduction to word counts, enhancing probability estimates. The importance of context is highlighted, showcasing how continuation probabilities provide more accurate predictions when bigrams are unseen. Kenisi/Nai smoothing combines absolute discounting with improved lower-order estimates, redistributing probability mass for better accuracy. This elegant approach is widely applied in speech recognition and machine translation, demonstrating the significance of context and adaptive probability distribution in language modeling.

Takeaways

- 😀 Smoothing techniques, such as Good-Turing and Kneser-Ney, are essential in language modeling to improve probability estimates for unseen data.

- 📉 Good-Turing smoothing adjusts counts based on observed frequencies to better estimate the occurrence of words, particularly when they have not been seen in a corpus.

- 🔍 Absolute discounting simplifies Good-Turing by applying a fixed discount (like 0.75) to counts and combining these with unigram probabilities.

- 📊 Kneser-Ney smoothing enhances the estimation of unigram probabilities by focusing on the likelihood of words appearing in novel contexts, termed 'continuation probability.'

- 📝 Continuation probability is calculated by counting the number of distinct bigrams that can precede a given word and normalizing this count.

- 🔄 Kneser-Ney smoothing utilizes a recursive approach to manage n-grams of varying lengths, applying actual counts for the highest-order n-grams and continuation counts for lower orders.

- ✨ The integration of absolute discounting and continuation probabilities in Kneser-Ney provides a robust framework for predicting word occurrences in context.

- 🌐 Kneser-Ney smoothing is widely applied in fields like speech recognition and machine translation due to its effectiveness in handling probabilities.

- 🧮 The lambda weight in the Kneser-Ney algorithm is crucial for determining how much probability mass should be assigned to unigrams based on previous bigram counts.

- 🔗 Understanding the balance between unigram and bigram probabilities is key to improving language model performance and handling unseen word combinations effectively.

Q & A

What is the primary focus of the discussion in the transcript?

-The transcript primarily discusses smoothing techniques in natural language processing, specifically absolute discounting and Kneser-Ney smoothing.

What is Good-Turing discounting and how is it applied?

-Good-Turing discounting is a method used to adjust probabilities based on the frequency of occurrences in the data, where discounted counts help to save computational resources by maintaining a close relationship with original counts.

How does absolute discounting work?

-Absolute discounting applies a fixed discount value to bigram counts, allowing for the interpolation of bigram probabilities with unigram probabilities, simplifying the calculations involved in estimating word probabilities.

What is Kneser-Ney smoothing and why is it significant?

-Kneser-Ney smoothing improves unigram probability estimation by using continuation probability, which measures how likely a word is to appear as a novel continuation, thus providing better context-based predictions.

What does the continuation probability represent?

-The continuation probability for a word measures how many different preceding words can pair with it in bigrams, giving an estimate of how likely that word is to appear after another word.

How is the continuation probability calculated?

-Continuation probability is calculated by counting the number of different word types that can precede a given word in bigrams and normalizing this count by the total number of word bigram types.

What is the relationship between absolute discounting and Kneser-Ney smoothing?

-Kneser-Ney smoothing builds upon absolute discounting by using its principles while improving the estimation of probabilities for lower-order grams through the concept of continuation probability.

What are the practical applications of Kneser-Ney smoothing?

-Kneser-Ney smoothing is commonly used in applications such as speech recognition and machine translation due to its effectiveness in accurately predicting word sequences.

Why is it important to differentiate between actual counts and continuation counts in M-gram models?

-Differentiating between actual counts for the highest order and continuation counts for lower orders allows for a more accurate probability estimation, ensuring that rare or context-specific words are appropriately modeled.

What advantage does Kneser-Ney smoothing provide in modeling frequent words?

-Kneser-Ney smoothing provides the advantage of assigning lower continuation probabilities to frequent words that occur in limited contexts, thus enhancing the overall accuracy of word predictions in varying contexts.

Outlines

Этот раздел доступен только подписчикам платных тарифов. Пожалуйста, перейдите на платный тариф для доступа.

Перейти на платный тарифMindmap

Этот раздел доступен только подписчикам платных тарифов. Пожалуйста, перейдите на платный тариф для доступа.

Перейти на платный тарифKeywords

Этот раздел доступен только подписчикам платных тарифов. Пожалуйста, перейдите на платный тариф для доступа.

Перейти на платный тарифHighlights

Этот раздел доступен только подписчикам платных тарифов. Пожалуйста, перейдите на платный тариф для доступа.

Перейти на платный тарифTranscripts

Этот раздел доступен только подписчикам платных тарифов. Пожалуйста, перейдите на платный тариф для доступа.

Перейти на платный тарифПосмотреть больше похожих видео

Peramalan Kuantitatif 1

EfficientML.ai 2024 | Introduction to SVDQuant for 4-bit Diffusion Models

N-Gons Special - Triangles, Quads & N-Gons in hard surface modeling - 3Ds Max 2017

Forecasting (12): Simple exponential smoothing forecast

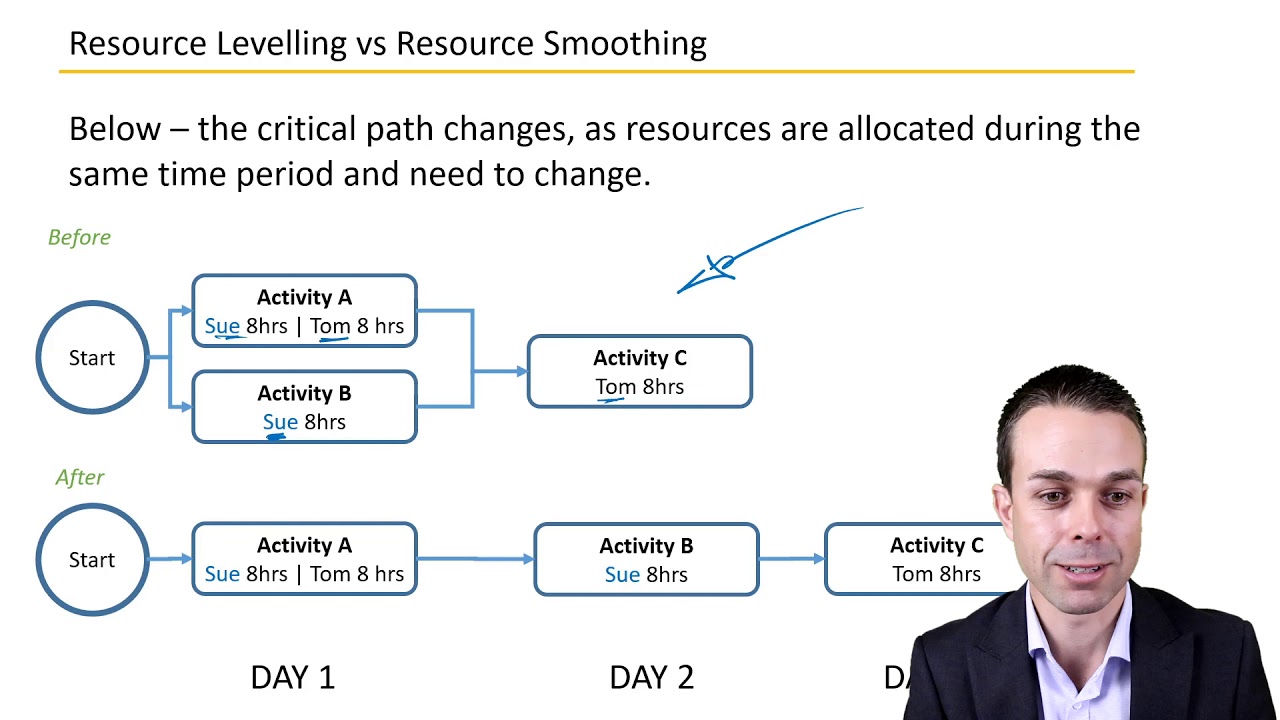

Resource Leveling versus Resource Smoothing - Key Project Management Concepts from the PMBOK

Belajar Smoothing step by step Untuk pemula #by:QQ HAIRSTYLE SALON CARUBAN

5.0 / 5 (0 votes)