Simulated Annealing

Summary

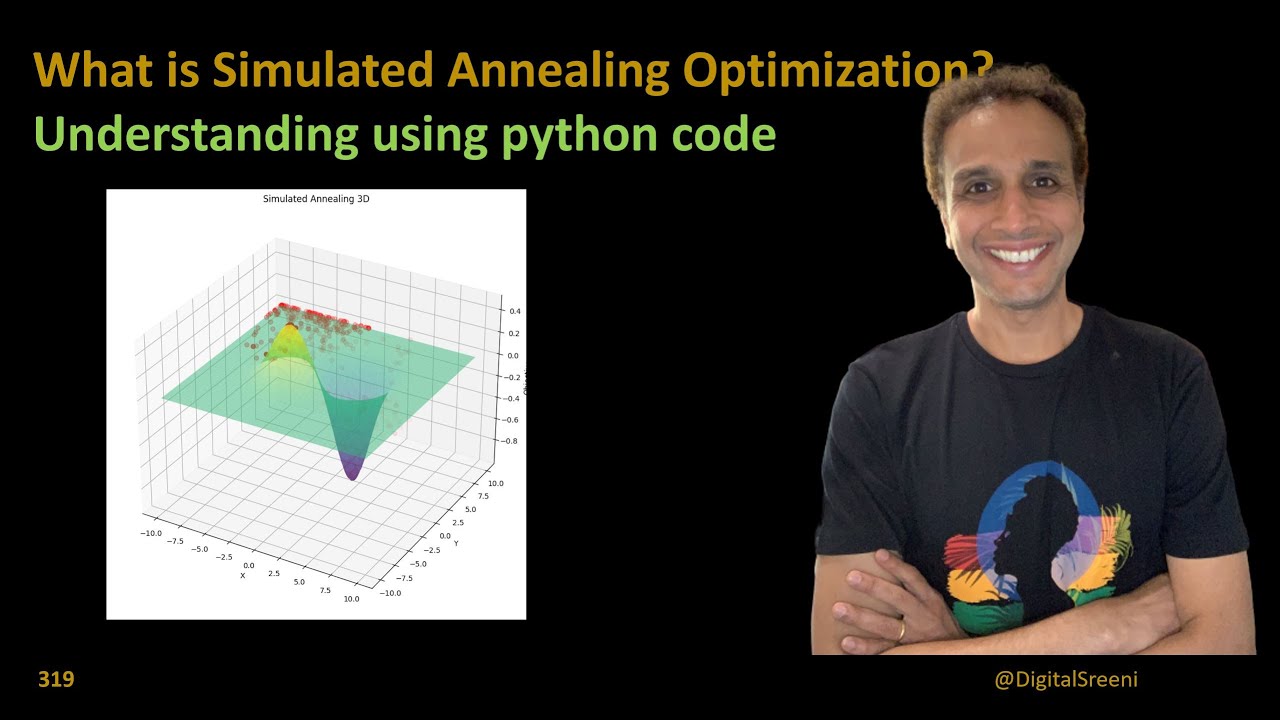

TLDRThis video explores the limitations of hill climbing in optimization and introduces simulated annealing as a solution. The speaker explains how hill climbing struggles with local maxima and how simulated annealing overcomes this by sometimes allowing worse moves, mimicking the metallurgic process of heating and cooling metals. By controlling the randomness of these decisions, simulated annealing helps find better solutions over time, eventually narrowing down to the optimal one. The video delves into the math behind this method and demonstrates how it works with temperature decline and probability.

Takeaways

- 🤔 Hill climbing struggles with local maxima, where it won't accept worse moves, potentially trapping it.

- 🎲 Introducing controlled randomness allows for sometimes making worse moves to escape local maxima.

- 🌱 Metaheuristics are inspired by natural processes and provide solutions beyond simple optimization algorithms.

- 🔥 Simulated annealing is inspired by metallurgical processes, where heated metal gradually cools to a stable structure.

- 🌡️ Simulated annealing involves accepting worse moves more frequently when the system (or temperature) is volatile or 'hot.'

- 🔄 The algorithm computes the energy change (Delta E) to determine whether to accept a move based on its impact.

- 📉 As temperature decreases, the probability of accepting worse moves decreases, guiding the system to a stable, optimized solution.

- 🔢 The probability of accepting worse moves is based on an exponential function: e^(Delta E / temperature).

- 🎯 The goal is to sometimes accept worse solutions early on to avoid getting stuck, gradually stabilizing at better solutions.

- 📊 Simulated annealing is useful for escaping local maxima to potentially find a global maximum in optimization problems.

Q & A

What is the main limitation of hill climbing as described in the video?

-The main limitation of hill climbing is that it can get stuck at a local maxima and won't consider moves that reduce the objective function, even if those moves could eventually lead to a better overall solution.

What is the solution proposed to overcome the limitation of hill climbing?

-The proposed solution is to introduce randomness, allowing for the possibility of taking steps that reduce the objective function temporarily, which can help escape local maxima and potentially reach a better global solution.

What is simulated annealing, and how is it related to metallurgy?

-Simulated annealing is an optimization technique inspired by the process of cooling metal in metallurgy. As the temperature of the metal decreases, the movement of molecules slows down and they settle into a more stable, crystalline structure. Similarly, simulated annealing allows for exploration (random moves) at high temperatures, and as the temperature decreases, the algorithm becomes more selective about improving moves.

How does simulated annealing handle 'bad moves' in optimization?

-Simulated annealing allows for 'bad moves' (those that decrease the objective function) with a probability based on the difference in energy (Delta E) and the current temperature. At high temperatures, the probability of accepting a bad move is higher, but as the temperature decreases, this probability becomes smaller.

What is the role of temperature in simulated annealing?

-Temperature controls the likelihood of accepting worse solutions. At higher temperatures, the algorithm is more likely to accept worse solutions to explore more of the solution space. As the temperature decreases, the algorithm becomes more conservative and favors only improving moves.

How is the probability of accepting a worse move calculated in simulated annealing?

-The probability is calculated using the formula e^(Delta E / T), where Delta E is the change in energy (objective function value) and T is the current temperature. If Delta E is negative (indicating a worse move), the probability decreases as T decreases.

What happens to the probability of accepting a worse move as temperature decreases?

-As temperature decreases, the probability of accepting a worse move also decreases. Eventually, when the temperature is very low, the probability becomes so small that the algorithm mostly rejects bad moves.

How is randomness introduced in the simulated annealing algorithm?

-Randomness is introduced by selecting a random candidate move from the possible options at each step. This allows the algorithm to explore the solution space without always following the best or worst move.

What happens if the Delta E (energy difference) is positive in simulated annealing?

-If Delta E is positive (indicating an improvement in the objective function), the move is always accepted, as it represents a step toward a better solution.

How does simulated annealing help in finding the global maxima?

-Simulated annealing helps in finding the global maxima by allowing occasional moves to worse solutions, which helps escape local maxima. As the temperature decreases, the algorithm focuses on refining the solution to find a global or better local maxima.

Outlines

Этот раздел доступен только подписчикам платных тарифов. Пожалуйста, перейдите на платный тариф для доступа.

Перейти на платный тарифMindmap

Этот раздел доступен только подписчикам платных тарифов. Пожалуйста, перейдите на платный тариф для доступа.

Перейти на платный тарифKeywords

Этот раздел доступен только подписчикам платных тарифов. Пожалуйста, перейдите на платный тариф для доступа.

Перейти на платный тарифHighlights

Этот раздел доступен только подписчикам платных тарифов. Пожалуйста, перейдите на платный тариф для доступа.

Перейти на платный тарифTranscripts

Этот раздел доступен только подписчикам платных тарифов. Пожалуйста, перейдите на платный тариф для доступа.

Перейти на платный тарифПосмотреть больше похожих видео

The simulated annealing algorithm explained with an analogy to a toy

319 - What is Simulated Annealing Optimization?

TA_5026201012

EDM 04 :: Galapagos Example II // Bounding Box

Convex Relaxations in Power System Optimization: Solution Methods for AC OPF (5 of 8)

Hill Climbing Search Solved Example using Local and Global Heuristic Function by Dr. Mahesh Huddar

5.0 / 5 (0 votes)