API GATEWAY and Microservices Architecture | How API Gateway act as a Single Entry Point?

Summary

TLDRThe video script discusses the critical role of API Gateways in handling high volumes of requests and their differences from load balancers. It explains how API Gateways route requests to appropriate microservices, perform API composition, authentication, and rate limiting. The script also covers service discovery, request/response transformation, and caching. It further elaborates on how API Gateways scale across multiple regions and availability zones, ensuring no single point of failure, and how DNS-based load balancing helps distribute traffic efficiently.

Takeaways

- 🚪 **API Gateway as a Single Entry Point**: API Gateway acts as the single entry point for all client API requests, routing them to the correct backend service based on the API endpoint.

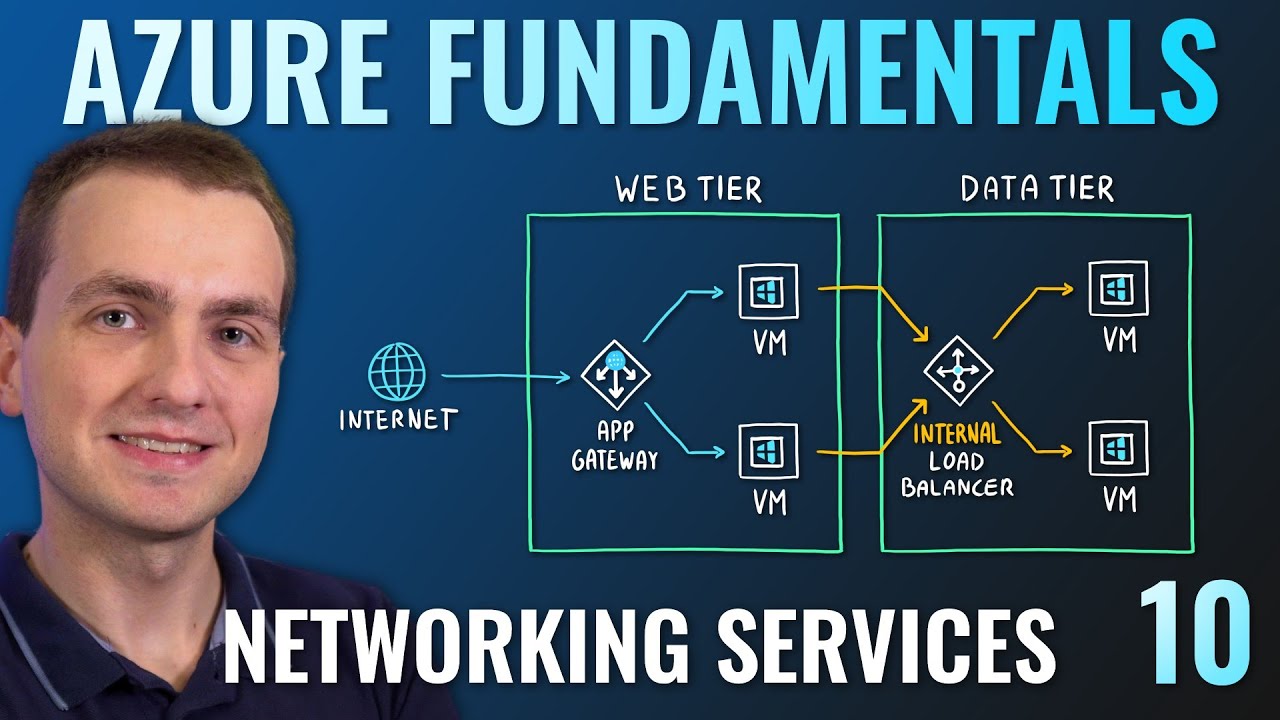

- 🔄 **Difference from Load Balancer**: Unlike load balancers that distribute traffic across instances of the same microservice, API Gateway understands and routes API requests to different microservices based on the endpoint.

- 🤖 **API Composition**: API Gateway can compose responses by calling multiple microservices and aggregating the data, reducing the complexity on the client side.

- 🔐 **Authentication**: API Gateway provides authentication services, integrating with authorization servers to validate client tokens before allowing access to microservices.

- 🚦 **Rate Limiting and Throttling**: API Gateway can enforce rate limits and throttling to manage traffic and prevent abuse, ensuring fair usage and system stability.

- 🔍 **Service Discovery**: It interacts with service discovery systems to find the correct microservice instances to route requests to, as microservice locations can change due to scaling.

- 🔄 **Request/Response Transformation**: API Gateway can transform incoming requests and outgoing responses to fit the needs of the system, including caching responses for efficiency.

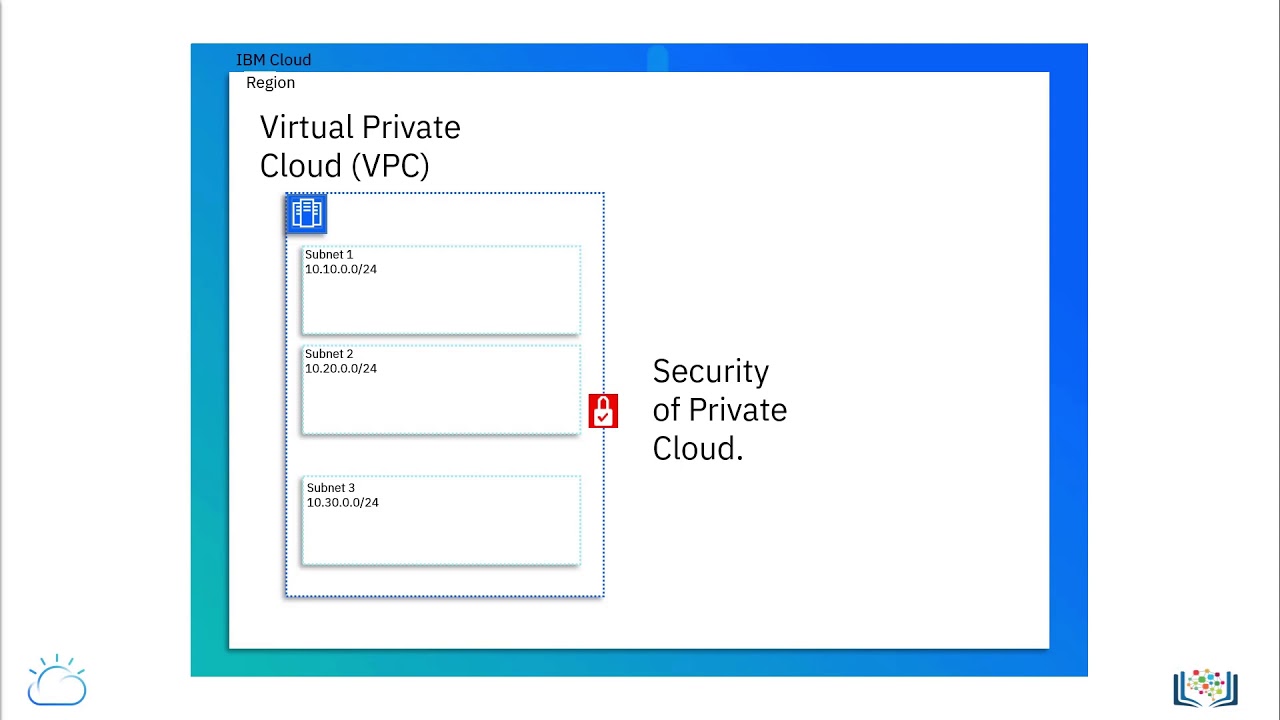

- 🌐 **Scalability Across Regions and AZs**: API Gateway scales across multiple regions and availability zones to handle high traffic and provide low latency, ensuring high availability.

- 🌐 **DNS-Based Load Balancing**: At the highest level, DNS-based load balancers distribute traffic to the appropriate regional API Gateways, considering factors like latency and compliance.

- 🛡️ **No Single Point of Failure**: The system design ensures that there are no single points of failure, with traffic able to be rerouted in case of outages in availability zones or regions.

Q & A

What is an API Gateway and how does it differ from a load balancer?

-An API Gateway is a single entry point that accepts client API requests and routes them to the correct backend service based on the API endpoint. Unlike a load balancer, which distributes traffic to multiple instances of a microservice, an API Gateway understands the API structure and makes routing decisions accordingly.

What is API composition and why is it important?

-API composition is a feature of API Gateways that simplifies client requests by allowing the Gateway to call multiple microservices and aggregate the results into a single response. This reduces the complexity on the client-side and is frequently used in services like Netflix to tailor API responses based on the device type.

How does the authentication feature of an API Gateway work?

-The API Gateway can authenticate clients by integrating with an authorization server. Clients pass an access token obtained from the authorization server with their requests. The API Gateway validates this token, and if it's valid, the request is allowed to proceed to the microservices.

Can you explain rate limiting and API throttling in the context of an API Gateway?

-Rate limiting is a feature that sets rules to manage the maximum number of concurrent requests an API Gateway can handle before returning a 429 error. API throttling is a more granular level of control that can limit the request rate for individual users or applications, blocking them once they exceed the allowed rate.

What is service discovery and how does it interact with an API Gateway?

-Service discovery is a system that keeps track of the location (IP address and port) of microservices as they scale up or down. The API Gateway uses service discovery to find the current location of a microservice before invoking it, ensuring that the request is routed to the correct instance.

How does an API Gateway handle millions of requests per second?

-An API Gateway can handle high volumes of requests by being deployed in multiple regions and availability zones, with each region having multiple instances of the Gateway. This distributed architecture ensures that there is no single point of failure and allows the Gateway to scale horizontally to meet demand.

What is the role of DNS in distributing traffic to different API Gateways?

-DNS plays a crucial role in load balancing at the domain name level. Services like AWS Route 53 or Azure Traffic Manager act as DNS-based load balancers, distributing traffic to the appropriate API Gateway based on factors like latency, compliance, and geographical location.

How does the API Gateway decide which microservice to route a request to?

-The API Gateway decides which microservice to route a request to based on the API endpoint. It examines the structure of the incoming API request and uses this information to determine the appropriate backend service to handle the request.

What are the benefits of using an API Gateway over a traditional load balancer?

-API Gateways offer intelligent routing based on API structure, support for API composition to aggregate data from multiple services, authentication, rate limiting, and service discovery. These features provide more flexibility and control over API traffic compared to traditional load balancers, which only distribute traffic without understanding the API context.

Can you provide an example of how API composition might work in a real-world scenario?

-In an e-commerce platform, when a user requests their order history, the API Gateway can compose a response by calling the product and invoice microservices to fetch relevant details. If the request comes from a mobile device, it might show only product and invoice details, while a request from a PC might include additional information like ratings, reviews, and recommendations, all aggregated into a single response by the API Gateway.

What are some other capabilities of API Gateways mentioned in the script?

-Besides routing, API composition, authentication, rate limiting, and service discovery, API Gateways can also perform request and response transformation, caching of responses to reduce load, and logging for monitoring and debugging purposes.

Outlines

Этот раздел доступен только подписчикам платных тарифов. Пожалуйста, перейдите на платный тариф для доступа.

Перейти на платный тарифMindmap

Этот раздел доступен только подписчикам платных тарифов. Пожалуйста, перейдите на платный тариф для доступа.

Перейти на платный тарифKeywords

Этот раздел доступен только подписчикам платных тарифов. Пожалуйста, перейдите на платный тариф для доступа.

Перейти на платный тарифHighlights

Этот раздел доступен только подписчикам платных тарифов. Пожалуйста, перейдите на платный тариф для доступа.

Перейти на платный тарифTranscripts

Этот раздел доступен только подписчикам платных тарифов. Пожалуйста, перейдите на платный тариф для доступа.

Перейти на платный тарифПосмотреть больше похожих видео

5.0 / 5 (0 votes)