The AI Dilemma: Navigating the road ahead with Tristan Harris

Summary

TLDRThe speaker discusses the challenges and risks posed by AI, emphasizing the need for governance to keep pace with technological advancements. The talk highlights the complexity AI introduces, comparing it to social media's impact, and warns of the dangers of AI-driven misinformation, fraud, and societal issues. The speaker advocates for a balance between AI's benefits and risks, urging for better governance, safety measures, and responsible deployment. They propose using AI to improve governance itself, ensuring that society can effectively manage the technology's rapid evolution.

Takeaways

- 🧠 The script discusses the profound impact of AI, likening it to giving humans 'superpowers' and amplifying our capabilities exponentially.

- 🤖 It highlights the work of the Center for Humane Technology, focusing on designing technology that strengthens social fabric rather than undermining it.

- 🌐 The speaker emphasizes the importance of understanding AI risks to steer towards a positive future, acknowledging the complexity of modern issues like social media's impact on society.

- 📈 The script points out the 'race to the bottom of the brain stem' for attention, illustrating the incentive-driven design of social media platforms that can lead to negative societal outcomes.

- 🏁 The film 'The Social Dilemma' is mentioned, which explores the unintended consequences of social media, serving as a cautionary example of AI's potential dangers.

- 🔑 The incentives behind social media are identified as a key driver of its negative impacts, with a focus on engagement over societal well-being.

- 🌐 The script raises concerns about the rapid development of AI and its alignment with 20th-century governance structures, calling for an upgrade in governance to match technological advancements.

- 🚀 It discusses the 'race to roll out' AI, where market dominance drives the release of AI models, potentially overlooking safety and inclusivity.

- 🔮 The dangers of generative AI are exemplified, such as the creation of deepfakes and the potential for misuse in various sectors, including politics and journalism.

- 🛡 The speaker calls for a reevaluation of incentives and governance related to AI deployment, suggesting measures like safety requirements and developer liability for AI models.

- 🌟 Finally, the script suggests leveraging 21st-century technology to upgrade governance processes, aiming to create a future where AI benefits are realized without compromising societal values.

Q & A

What is the main focus of the speaker's presentation?

-The speaker's presentation focuses on the dilemma of AI, discussing how AI amplifies human capabilities and the challenges it poses to society, governance, and the ethical considerations of its development and deployment.

What is the 'AI Dilemma' as mentioned in the script?

-The 'AI Dilemma' refers to the paradox where AI, while offering significant benefits, also introduces complex challenges and risks that society must navigate carefully to ensure a positive future.

What is the Center for Humane Technology?

-The Center for Humane Technology is an organization that the speaker represents, which is dedicated to considering how technology can be designed to be humane and beneficial to the systems that humans depend on.

Why is the speaker concerned about the current trajectory of AI development?

-The speaker is concerned because the rapid development of AI is outpacing our ability to govern and understand its implications, leading to a complexity gap that could result in negative consequences if not addressed properly.

What role does social media play in the speaker's discussion?

-Social media is presented as the first contact between humanity and a form of runaway AI, causing various societal issues such as addiction, misinformation, and mental health problems, which serve as a warning for the potential risks of AI.

What does the speaker mean by 'race to the bottom of the brain stem'?

-This phrase describes the competition among social media platforms to capture users' attention by any means necessary, even if it involves exploiting the most primitive parts of the human brain.

What is the 'Social Dilemma' documentary, and why is it relevant to the speaker's discussion?

-The 'Social Dilemma' is a documentary that explores the negative impacts of social media on society, which is relevant to the speaker's discussion as it exemplifies the unintended consequences of AI-driven platforms.

What is the 'race to roll out' and how does it relate to AI development?

-The 'race to roll out' refers to the competition among AI developers to release new models and achieve market dominance, often at the expense of safety and ethical considerations.

What is the concern with generative AI and its potential misuse?

-Generative AI can be misused to create deep fakes, spread misinformation, and manipulate public opinion, which poses significant risks to society if not properly regulated and controlled.

What solutions does the speaker propose to address the challenges posed by AI?

-The speaker suggests investing in safety research, aligning incentives with responsible AI deployment, and using technology to upgrade governance processes to match the pace of technological advancement.

What is the 'upgrade governance plan' mentioned by the speaker?

-The 'upgrade governance plan' is a proposal to invest in governance mechanisms that keep pace with technological advancements, ensuring that regulations and safety measures evolve alongside AI capabilities.

Outlines

このセクションは有料ユーザー限定です。 アクセスするには、アップグレードをお願いします。

今すぐアップグレードMindmap

このセクションは有料ユーザー限定です。 アクセスするには、アップグレードをお願いします。

今すぐアップグレードKeywords

このセクションは有料ユーザー限定です。 アクセスするには、アップグレードをお願いします。

今すぐアップグレードHighlights

このセクションは有料ユーザー限定です。 アクセスするには、アップグレードをお願いします。

今すぐアップグレードTranscripts

このセクションは有料ユーザー限定です。 アクセスするには、アップグレードをお願いします。

今すぐアップグレード関連動画をさらに表示

Media Policy & You: Crash Course Media Literacy #9

[FULL] Dialog - Gempuran AI Jadi Peluang atau Ancaman Pendidikan? [Selamat Pagi Indonesia]

Navigating the Future: AI, Public Thinking, Global Challenges: #Futurist Ufuk Tarhan | Gerd Leonhard

The Urgent Risks of Runaway AI — and What to Do about Them | Gary Marcus | TED

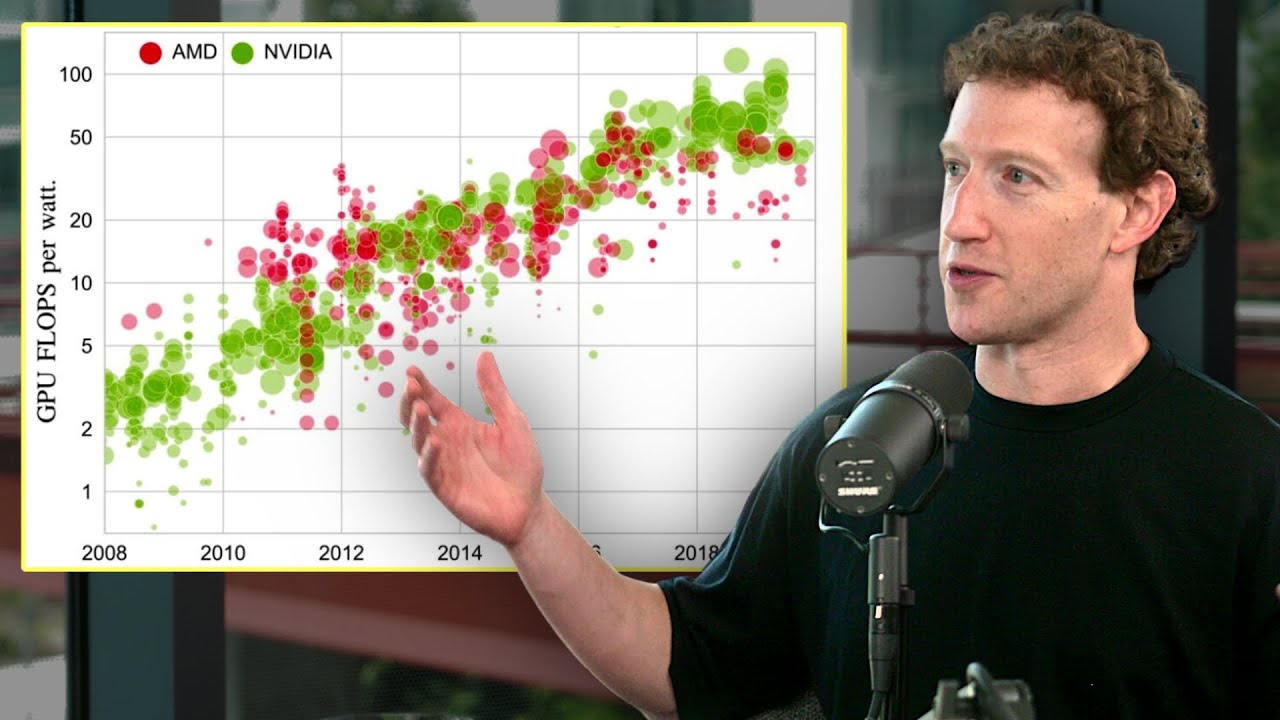

Energy, not compute, will be the #1 bottleneck to AI progress – Mark Zuckerberg

AI시대, 미래학자가 연구한 미래 직업과 필수 역량 | 자녀교육 취준생 진로 은퇴후 삶

5.0 / 5 (0 votes)