Template Models: Hidden Markov Models - Stanford University

Summary

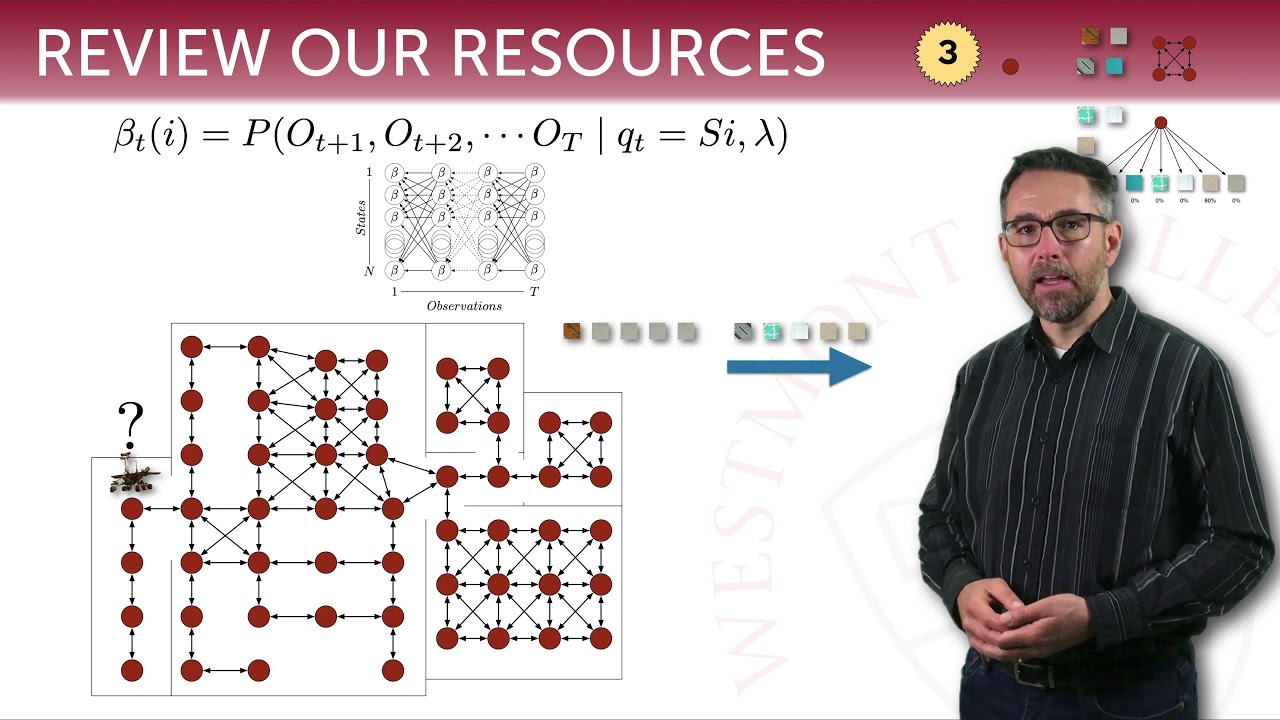

TLDRHidden Markov Models (HMMs) are a powerful subclass of dynamic Bayesian networks used for modeling sequences in diverse applications like robot localization and speech recognition. These models consist of a transition model and an observation model, where the former defines how the system moves through different states and the latter explains how observations relate to these states. Despite their simple structure, HMMs display underlying complexity, particularly in the sparsity of the transition model. With applications ranging from biological sequence analysis to language processing, HMMs are key to understanding and predicting sequential data.

Takeaways

- 😀 Hidden Markov Models (HMM) are a subclass of dynamic Bayesian networks, known for their utility in applications like robot localization, speech recognition, and biological sequence analysis.

- 😀 A Hidden Markov Model typically consists of two main components: a transition model that determines the likelihood of moving from one state to another, and an observation model that determines the likelihood of an observation given the current state.

- 😀 The structure of HMMs can be complex, particularly in the transition model, which often has internal sparsity, meaning that not all states are equally likely to transition into others.

- 😀 In robot localization, HMMs can track a robot's position (pose) and orientation over time, with inputs like control signals and observations helping to refine the robot's movement.

- 😀 In speech recognition, HMMs are used to model spoken sentences by decomposing the acoustic signals into smaller units, like phonemes, to identify words and their boundaries.

- 😀 A phonetic alphabet is used to break words into phonemes, which are the basic units of speech, and each phoneme has a distinct transition model for its beginning, middle, and end phases.

- 😀 For continuous speech recognition, HMMs transition through states for each word, then phoneme, and ultimately individual acoustic signals, which helps decode spoken language into written text.

- 😀 HMMs are particularly valuable in situations where sequences need to be modeled, and their probabilistic structure allows for effective reasoning about observed data.

- 😀 The sparsity in the transition model of an HMM (e.g., the robot’s movement model) highlights the conditional dependencies and how certain states are more likely to transition into specific other states.

- 😀 By using probabilistic inference, an HMM can output the most likely sequence of words that correspond to the observed acoustic signal in speech recognition systems.

- 😀 HMMs are widely used for sequence modeling, not only in speech but also in other fields like biological sequence analysis and natural language processing, making them one of the most utilized forms of probabilistic graphical models.

Q & A

What is a Hidden Markov Model (HMM)?

-A Hidden Markov Model (HMM) is a probabilistic model that represents systems with hidden states. It consists of two key components: a transition model that describes how the system transitions from one state to another, and an observation model that shows how likely it is to observe specific data given the current state.

How does the structure of an HMM manifest in real-world applications?

-In real-world applications, HMMs exhibit internal structures, especially in the transition model, which defines the probability of moving between states. This structure allows HMMs to be effectively used in various fields, such as robot localization, speech recognition, and biological sequence analysis.

How does the transition model in an HMM work?

-The transition model in an HMM defines the probabilities of moving from one state to another over time. For example, if the system is in state S1, the transition model will specify the probability of transitioning to state S2 or staying in S1, ensuring the probabilities sum to 1.

What role does the observation model play in an HMM?

-The observation model in an HMM determines the likelihood of observing specific data given the current state. It tells us how probable it is to see certain observations, like acoustic signals in speech recognition or sensor data in robot localization, based on the system's state.

What is the importance of sparsity in the transition model of an HMM?

-Sparsity in the transition model refers to the idea that not all states are directly connected to each other. In practical applications like robot localization, this sparsity means that certain transitions are not possible or are highly unlikely, making the model more efficient and realistic.

How is HMM applied in robot localization?

-In robot localization, an HMM tracks the robot’s position on a map. The state variable represents the robot's position and orientation, and the observation model represents the data the robot observes, such as landmarks. The transition model defines how the robot moves between positions over time.

What is the key challenge in speech recognition using HMM?

-The key challenge in speech recognition using HMM is identifying the boundaries between words and mapping the observed acoustic signals to phonemes, the smallest units of sound. This involves handling noisy acoustic signals and determining the most likely sequence of words.

What are phonemes, and why are they important in speech recognition?

-Phonemes are the smallest units of sound in a language. In speech recognition, phonemes are crucial because they allow the system to break down continuous speech into manageable parts, enabling the identification of words and sentences.

How does an HMM represent the process of recognizing a word in speech recognition?

-In speech recognition, HMMs represent the process by breaking a word into a sequence of phonemes. Each phoneme is modeled by a series of states (beginning, middle, end), with transitions between these states corresponding to the acoustic signals observed at each point in time.

What makes HMMs suitable for sequence modeling in biological applications?

-HMMs are ideal for biological sequence modeling because they can handle sequential data, such as DNA sequences, where elements can have different roles (e.g., functional vs. non-functional). The transition and observation models of HMMs are effective for identifying patterns and annotating sequences.

Outlines

このセクションは有料ユーザー限定です。 アクセスするには、アップグレードをお願いします。

今すぐアップグレードMindmap

このセクションは有料ユーザー限定です。 アクセスするには、アップグレードをお願いします。

今すぐアップグレードKeywords

このセクションは有料ユーザー限定です。 アクセスするには、アップグレードをお願いします。

今すぐアップグレードHighlights

このセクションは有料ユーザー限定です。 アクセスするには、アップグレードをお願いします。

今すぐアップグレードTranscripts

このセクションは有料ユーザー限定です。 アクセスするには、アップグレードをお願いします。

今すぐアップグレード5.0 / 5 (0 votes)