Hidden Dynamics T1 Video 1

Summary

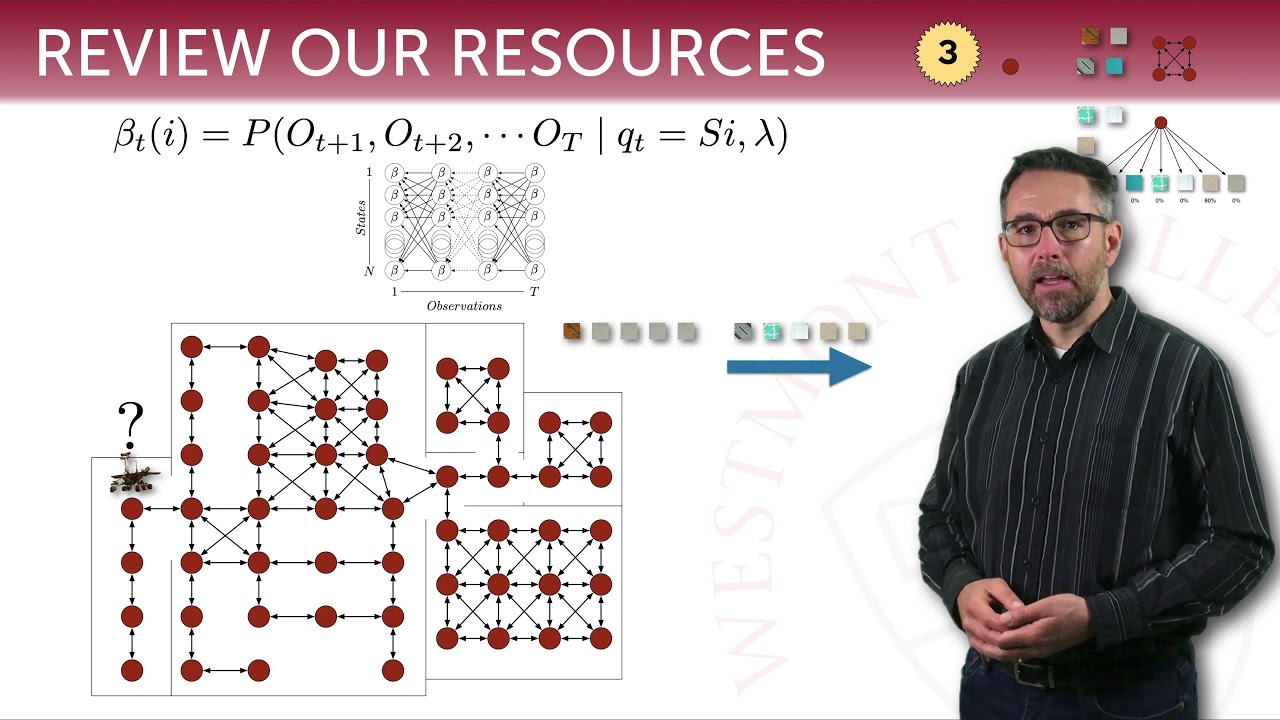

TLDRThis tutorial delves into Hidden Markov Models (HMMs), explaining their use in dynamic systems where states cannot be directly observed. HMMs combine Markov models and Bayesian inference to update knowledge about hidden states based on indirect measurements. The tutorial covers various applications, including brain dynamics, motor and sensory systems, and brain-computer interfaces. It introduces both binary and continuous latent variables in HMMs and explains their role in different scenarios, such as detecting fish locations based on fishing data. The tutorial progresses with a focus on Kalman filters and linear dynamical systems.

Takeaways

- 😀 Markov Models are dynamical systems where the future depends only on the present state, not the past. Physics itself is Markovian.

- 😀 Hidden Markov Models (HMMs) are useful for describing tasks where the underlying states are hidden, and only indirect evidence is observable.

- 😀 The core idea of HMMs is that the brain must infer hidden states of the world from observed sensory data and dynamic models.

- 😀 An HMM combines a fully observed Markov model with Bayesian Inference to update knowledge about hidden states based on new evidence.

- 😀 HMMs can be applied to a variety of tasks, including understanding brain dynamics, predicting motor actions, and creating brain-computer interfaces.

- 😀 For 'what' questions, HMMs model brain states such as being awake or asleep, or sensory/motor system behaviors.

- 😀 'How' models in HMMs explore mechanisms like ion channels, which are hard to observe directly but can be inferred through other measurements.

- 😀 'Why' models in HMMs help understand why animals infer certain objects or events from their sensory data and make certain inferences.

- 😀 Two types of HMMs are introduced: one for binary latent variables and one for continuous latent variables.

- 😀 The fishing example is used to build intuition for HMMs, where you infer the location of fish based on your fishing success over time.

Q & A

What is the main focus of the tutorials in the transcript?

-The tutorials focus on 'Hidden Dynamics,' specifically using Hidden Markov Models (HMMs) to understand and infer hidden states in dynamical systems.

What is the difference between a Markov Model and a Hidden Markov Model?

-A Markov Model describes a system where the next state depends only on the current state and is fully observable. A Hidden Markov Model (HMM) has hidden states that cannot be observed directly, requiring inference from indirect measurements.

How does a Hidden Markov Model combine Markov dynamics with Bayesian inference?

-The HMM uses a Markov Model to describe the hidden dynamics of the system and Bayesian inference to update probabilities about the hidden states based on observed evidence.

What types of questions can HMMs help answer?

-HMMs can address 'what' questions (e.g., brain states, intended actions), 'how' questions (e.g., hidden mechanisms like ion channels or synaptic plasticity), and 'why' questions (e.g., understanding how animals infer and interpret the world).

What are the two main categories of HMMs discussed in the tutorials?

-The tutorials cover HMMs with binary latent variables and those with continuous latent variables, such as linear dynamical systems with Gaussian variability.

What is the Sequential Probability Ratio Test in the context of HMMs?

-It is a method introduced when the binary latent variable is fixed, allowing the sequential updating of evidence to decide between two hypotheses about the hidden state.

What role does the Kalman filter play in these tutorials?

-The Kalman filter is used as a practical example of a linear dynamical system with Gaussian variability, illustrating how to infer a continuous hidden state from sequential observations.

Why is the fishing example used in the tutorials?

-The fishing example provides an intuitive metaphor for HMMs, showing how one can infer a hidden state (location of the fish) from a sequence of observations (catching or missing fish).

How do HMMs relate to brain-computer interfaces and neuroscience?

-HMMs can model brain dynamics, predict intended actions, describe sensory and motor systems, and support applications like brain-computer interfaces by inferring hidden brain states from observed signals.

What is the significance of graphical models in understanding HMMs?

-Graphical models provide a structured way to represent uncertainty and statistical dependencies between hidden states and observed measurements, which is fundamental for understanding HMMs and later Markov Decision Processes.

How does the confidence about a hidden state evolve over time in an HMM?

-Confidence increases as more sequential evidence is accumulated, allowing the system or the observer to better infer the true hidden state, as illustrated in the fishing example.

Outlines

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowMindmap

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowKeywords

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowHighlights

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowTranscripts

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowBrowse More Related Video

5.0 / 5 (0 votes)