Eigenvectors and eigenvalues | Chapter 14, Essence of linear algebra

Summary

TLDRThis script delves into the concept of eigenvectors and eigenvalues, often found challenging by students. It emphasizes the importance of understanding matrices as linear transformations and being comfortable with determinants, linear systems, and change of basis. The script explains how eigenvectors remain on their span during transformations, with eigenvalues representing the stretch or squish factor. It also touches on the utility of eigenvectors in identifying axes of rotation in 3D space and simplifies matrix operations through eigenbases, making complex transformations more manageable.

Takeaways

- 🧠 Eigenvectors and eigenvalues are foundational concepts in linear algebra that can be challenging to grasp without a strong visual understanding.

- 🔍 Understanding matrices as linear transformations is crucial for comprehending eigenvectors and eigenvalues.

- 📏 Eigenvectors are special vectors that remain on their span after a linear transformation, only being stretched or squished by a scalar factor known as the eigenvalue.

- 📐 The transformation of a vector can be visualized as moving from its original position to a new position on a line or plane, with eigenvectors aligning with this new position.

- 🔄 Eigenvectors with eigenvalues of 1 indicate vectors that remain fixed in place after a transformation, such as the axis of rotation in 3D space.

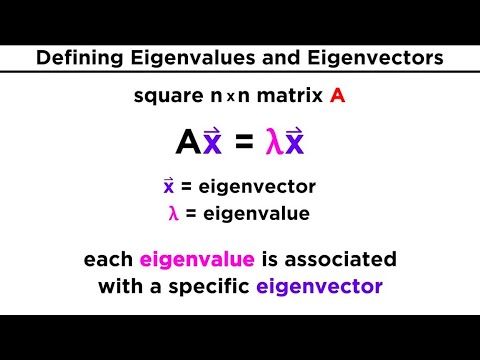

- 🔢 The process of finding eigenvectors and eigenvalues involves solving for values that satisfy the equation Av = λv, where A is the matrix, v is the eigenvector, and λ is the eigenvalue.

- 📉 Determining eigenvalues involves setting the determinant of (A - λI) to zero, where I is the identity matrix, and solving for λ.

- 🔍 The absence of real eigenvalues, such as in the case of a 90-degree rotation, indicates that no real eigenvectors exist for the transformation.

- 📚 A shear transformation provides an example where eigenvectors exist but are limited to a single line, highlighting the diversity of eigenvector behavior.

- 🌐 The concept of an eigenbasis, where basis vectors are eigenvectors, simplifies matrix operations and transformations, especially when raising matrices to high powers.

Q & A

What is an eigenvector?

-An eigenvector is a special vector that remains on its own span during a linear transformation, meaning it only gets stretched or squished without being rotated.

What is the significance of eigenvalues?

-Eigenvalues represent the factor by which an eigenvector is stretched or squished during a linear transformation.

Why are eigenvectors and eigenvalues important in understanding linear transformations?

-Eigenvectors and eigenvalues help to understand the essence of a linear transformation without being dependent on the specific coordinate system, making it easier to analyze transformations.

How can eigenvectors help in visualizing a 3D rotation?

-If you find an eigenvector for a 3D rotation, it corresponds to the axis of rotation, simplifying the understanding of the rotation to just an axis and an angle.

What is the relationship between eigenvectors and the columns of a transformation matrix?

-The columns of a transformation matrix represent the effect of the transformation on the basis vectors, and eigenvectors are special basis vectors that are stretched or squished without rotation during the transformation.

What does it mean for a matrix to be diagonalizable?

-A matrix is diagonalizable if there exists a basis of eigenvectors, meaning it can be represented as a diagonal matrix in some coordinate system.

How do you determine if a matrix has eigenvectors?

-You determine if a matrix has eigenvectors by finding values of lambda that make the determinant of (A - lambda * I) zero, where A is the matrix and I is the identity matrix.

What is the role of the determinant in finding eigenvalues?

-The determinant is used to find eigenvalues by setting it to zero after subtracting lambda from the diagonal elements of the matrix, resulting in a polynomial equation that can be solved for lambda.

Why might a transformation not have eigenvectors?

-A transformation might not have eigenvectors if it rotates every vector off of its own span, as is the case with a 90-degree rotation, which results in imaginary eigenvalues.

What is an eigenbasis and why is it useful?

-An eigenbasis is a set of basis vectors that are also eigenvectors. It is useful because it allows the transformation matrix to be represented as a diagonal matrix, simplifying operations like computing matrix powers.

How does changing the basis to an eigenbasis simplify matrix operations?

-Changing to an eigenbasis simplifies matrix operations because the transformation matrix in this basis is diagonal, with eigenvalues on the diagonal, making operations like matrix multiplication and exponentiation much easier.

Outlines

このセクションは有料ユーザー限定です。 アクセスするには、アップグレードをお願いします。

今すぐアップグレードMindmap

このセクションは有料ユーザー限定です。 アクセスするには、アップグレードをお願いします。

今すぐアップグレードKeywords

このセクションは有料ユーザー限定です。 アクセスするには、アップグレードをお願いします。

今すぐアップグレードHighlights

このセクションは有料ユーザー限定です。 アクセスするには、アップグレードをお願いします。

今すぐアップグレードTranscripts

このセクションは有料ユーザー限定です。 アクセスするには、アップグレードをお願いします。

今すぐアップグレード関連動画をさらに表示

Eigen values and Eigen Vectors in Tamil | Unit 1 | Matrices | Matrices and Calculus | MA3151

MATRIKS RUANG VEKTOR | NILAI EIGEN MATRIKS 2x2 DAN 3x3

Short Trick - Eigen values & Eigen vectors | Matrices (Linear Algebra)

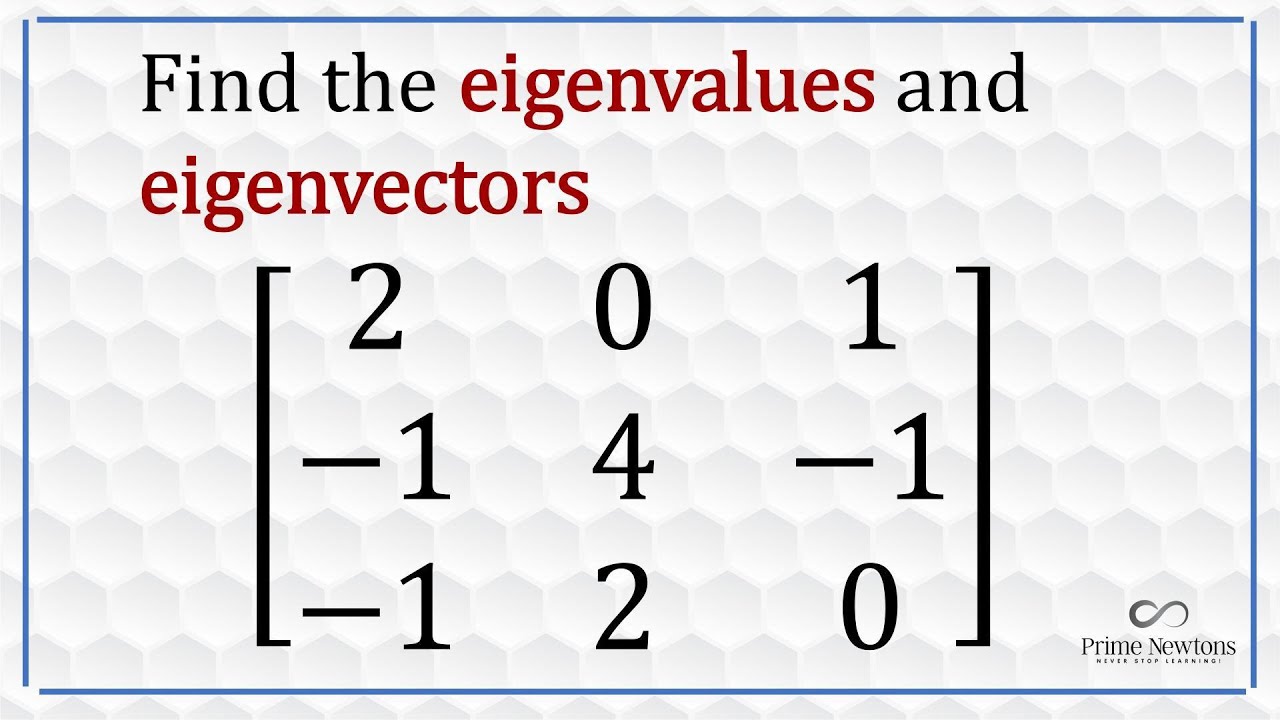

Finding Eigenvalues and Eigenvectors

3 x 3 eigenvalues and eigenvectors

Nilai dan Vektor Eigen part 1

5.0 / 5 (0 votes)