2. Three Basic Components or Entities of Artificial Neural Network Introduction | Soft Computing

Summary

TLDRThis video delves into the three fundamental components of artificial neural networks (ANNs): synaptic interconnections, learning rules, and activation functions. It explains various network models, including single and multi-layer feed-forward, single node with feedback, and single and multi-layer recurrent networks. The video also discusses learning methods, such as parameter and structural learning, and covers several activation functions like identity, binary step, bipolar step, ramp, and sigmoidal functions, which are crucial for ANN operations.

Takeaways

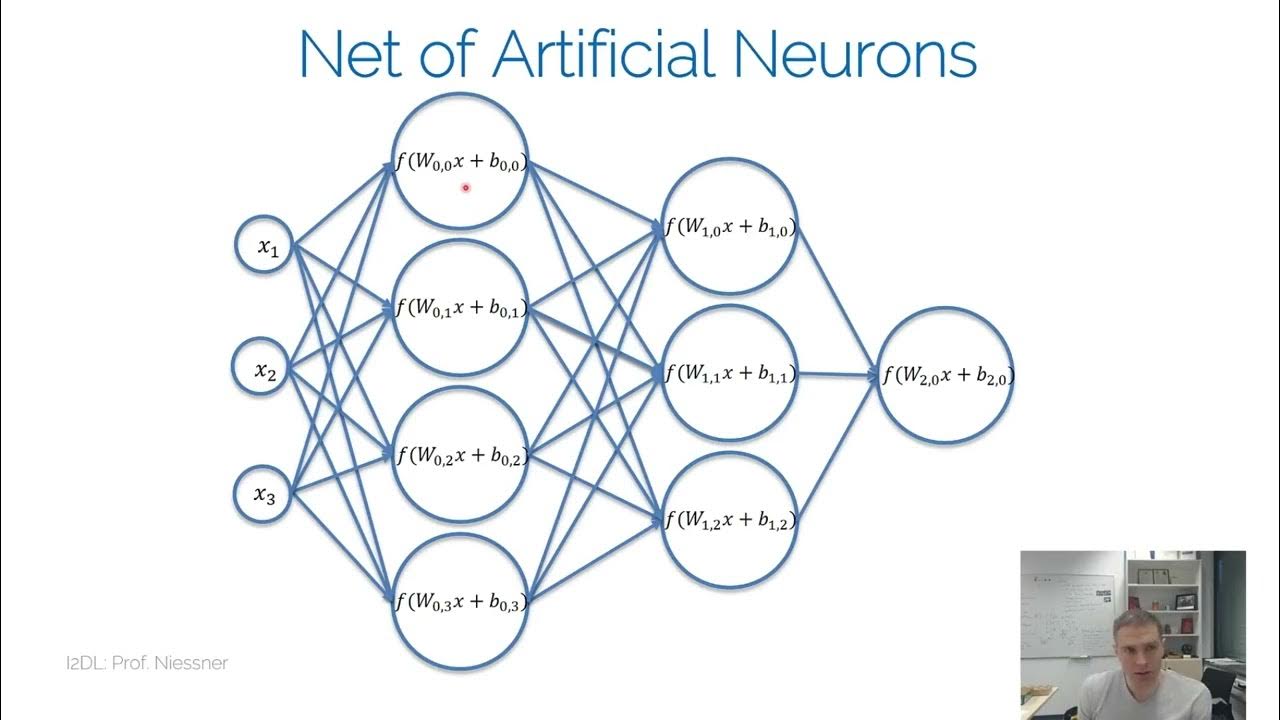

- 🧠 The models of artificial neural networks are specified by three basic components: synaptic interconnections, learning rules, and activation functions.

- 🔗 Synaptic interconnections can be of five types: single layer feed forward, multi-layer feed forward, single node with feedback, single layer recurrent, and multi-layer recurrent networks.

- 🌐 In a single layer feed forward network, each neuron in the input layer is directly connected to the output layer neurons.

- 🌀 Multi-layer feed forward networks include one or more hidden layers between the input and output layers.

- 🔁 Single node with feedback involves sending back the output as feedback to modify the computation of the input neuron for better output.

- 🔄 Single layer recurrent networks update weights considering both the current input and the previous output.

- 🎓 Learning in ANNs is categorized into parameter learning, which updates connection weights, and structural learning, which changes the network's structure.

- 📈 Activation functions determine the output of a neuron based on its input. Common functions include identity, binary step, bipolar step, ramp, and sigmoidal functions.

- 📊 The sigmoidal function, particularly the binary sigmoid function, is widely used in backpropagation neural networks due to its desirable mathematical properties.

- 🔍 The derivative of the binary sigmoid function is key for backpropagation as it helps in calculating the gradient during the learning process.

- 🔑 The video aims to clarify the concepts of the components of artificial neural networks and encourages viewers to engage with the content for further learning.

Q & A

What are the three basic components of an artificial neural network?

-The three basic components of an artificial neural network are the synaptic interconnections, the training or learning rules, and the activation functions.

What are the different types of interconnections in artificial neural networks?

-The different types of interconnections in artificial neural networks include single layer feed forward networks, multi-layer feed forward networks, single node with its own feedback, single layer recurrent networks, and multi-layer recurrent networks.

How does a single layer feed forward network differ from a multi-layer feed forward network?

-A single layer feed forward network has only an input layer and an output layer, whereas a multi-layer feed forward network includes one or more hidden layers in addition to the input and output layers.

What is the purpose of a single node with its own feedback in a neural network?

-In a single node with its own feedback, the output of the input neuron is used as feedback to validate and modify the computation of the input neuron to improve the output.

How does a single layer recurrent network differ from a multi-layer recurrent network?

-A single layer recurrent network updates weights considering the previous iteration's outputs along with the current input, while a multi-layer recurrent network has multiple layers, including hidden layers, and updates weights similarly across these layers.

What are the two main types of learning in artificial neural networks?

-The two main types of learning in artificial neural networks are parameter learning, which involves updating connection weights, and structural learning, which focuses on changing the network structure such as the number of processing elements or layers.

What is an identity function in the context of activation functions?

-An identity function is an activation function where the output is equal to the input, defined as f(x) = x, and it operates linearly on the input values.

What is a binary step function and how does it work?

-A binary step function is an activation function that outputs 1 if the input value is greater than or equal to 0, and 0 if the input value is less than 0, resulting in a binary output range.

What is a bipolar step function and how does it differ from a binary step function?

-A bipolar step function outputs 1 if the input value is greater than or equal to 0, and -1 if the input value is less than 0, providing a bipolar output range, unlike the binary step function which only outputs 0 or 1.

What is the range of output for the RAM function in activation functions?

-The RAM function outputs 1 for input values greater than 1, linearly increases from 0 to 1 for input values between 0 and 1, and outputs 0 for input values less than 0.

How is the binary sigmoid function defined and what is its range of output?

-The binary sigmoid function is defined as f(x) = 1 / (1 + e^-λx), where λ is the stiffness parameter and x is the summation term. Its output ranges from 0 to 1.

What is the derivative of the binary sigmoid function and what does it represent?

-The derivative of the binary sigmoid function is f'(x) = λ * f(x) * (1 - f(x)), which represents the rate of change of the function and is used in backpropagation for learning.

Outlines

このセクションは有料ユーザー限定です。 アクセスするには、アップグレードをお願いします。

今すぐアップグレードMindmap

このセクションは有料ユーザー限定です。 アクセスするには、アップグレードをお願いします。

今すぐアップグレードKeywords

このセクションは有料ユーザー限定です。 アクセスするには、アップグレードをお願いします。

今すぐアップグレードHighlights

このセクションは有料ユーザー限定です。 アクセスするには、アップグレードをお願いします。

今すぐアップグレードTranscripts

このセクションは有料ユーザー限定です。 アクセスするには、アップグレードをお願いします。

今すぐアップグレード5.0 / 5 (0 votes)