How to detect DeepFakes with MesoNet | 20 MIN. PYTHON TUTORIAL

Summary

TLDRIn this video, Kalyn from Kite explores deep fakes and introduces Mesonet, a neural network designed to detect them. The video discusses how deep fakes are generated using autoencoders and the creation of a dataset from existing deep fake videos. It then delves into Mesonet's architecture, a convolutional neural network, and its performance in identifying real and fake images. The script also highlights a data bias issue where the majority of deep fake images are pornographic, potentially skewing the model's predictions.

Takeaways

- 😲 Deep fakes are synthetic media created by deep neural networks, making them nearly indistinguishable from real images, audio, or video.

- 👀 The potential misuse of deep fakes for spreading misinformation and undermining trust is a serious concern.

- 🧠 Masonet is a convolutional neural network designed to identify deep fakes, trained on a dataset of real and fake images.

- 🎨 Deep fakes can be generated by training autoencoders on different datasets, allowing for the blending of distinct visual styles.

- 📸 The Masonet dataset was assembled by extracting face images from existing deep fake videos and real video sources.

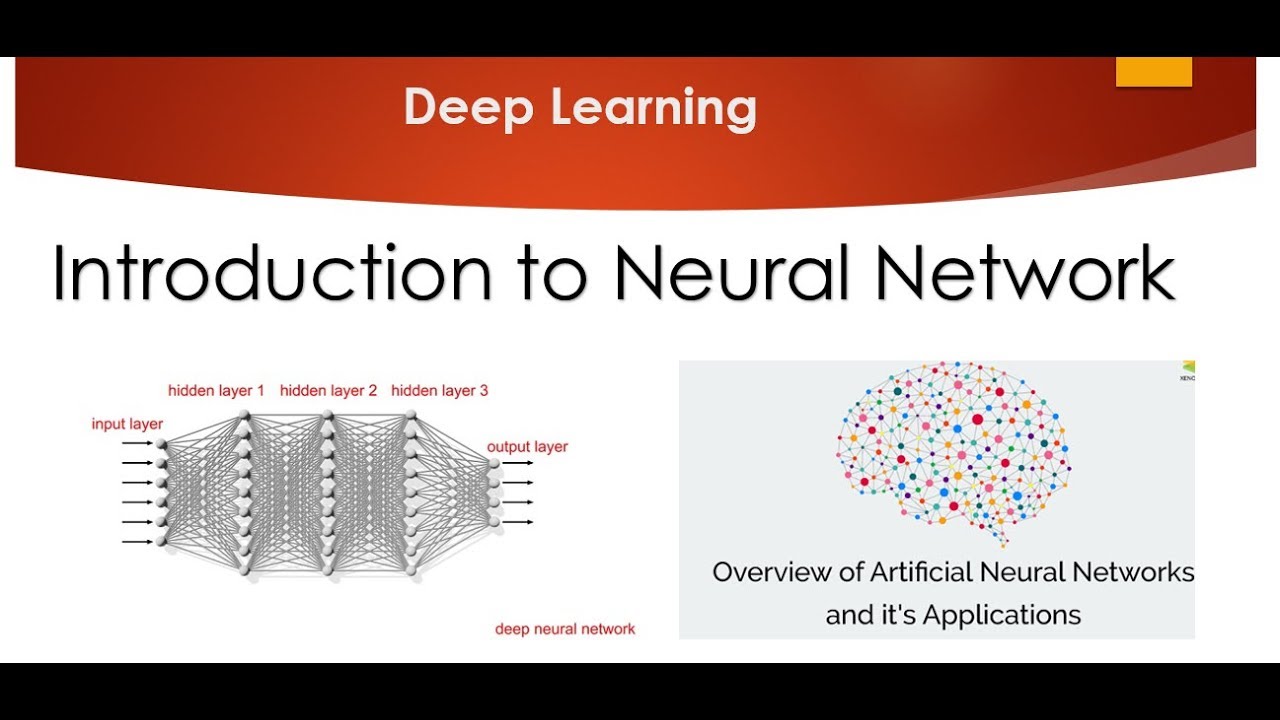

- 🤖 Masonet's architecture consists of four convolutional blocks followed by a fully connected layer, optimized for image feature recognition.

- 🔍 Batch normalization in neural networks helps improve training speed, performance, and stability by normalizing layer inputs.

- 📊 The model's predictions are influenced by the nature of the training data, which in this case includes a significant amount of pornographic deep fakes.

- 🔎 The video suggests a potential bias in Masonet's predictions due to the prevalence of pornographic content in the deep fake dataset.

- 💻 The video also promotes Kite, an AI-powered coding assistant that enhances programming efficiency through features like autocompletion and documentation.

Q & A

What is the main topic of the video?

-The main topic of the video is deep fakes and the exploration of a neural network called MesoNet designed to identify them.

What is a deep fake?

-A deep fake refers to images, audio, or video that are fake, depicting events that never occurred, created by deep neural networks to be nearly indistinguishable from real counterparts.

How can deep fakes be generated?

-Deep fakes can be generated using autoencoders, which compress and decompress image data, allowing for the blending of different images, such as merging Van Gogh's 'Starry Night' with Da Vinci's 'Mona Lisa'.

What is MesoNet and what is it used for?

-MesoNet is a convolutional neural network designed to identify deep fake images. It is used to make predictions on image data to distinguish between real and deep fake images.

How does MesoNet handle the data it is trained on?

-MesoNet handles data by extracting face images from existing deep fake videos and real video sources like TV shows and movies, ensuring a balanced distribution of facial angles and resolutions.

What is the structure of MesoNet's neural network?

-MesoNet's neural network consists of four convolutional blocks followed by one fully connected hidden layer and an output layer for predictions.

What is batch normalization and why is it used in MesoNet?

-Batch normalization is a technique that normalizes the inputs to each layer of a network to improve speed, performance, and stability. It reduces the interdependence between parameters and the input distribution of the next layer.

How does MesoNet make predictions on image data?

-MesoNet makes predictions by loading weights, processing image data through its convolutional and fully connected layers, and outputting a value that indicates whether an image is real or a deep fake.

What is a problematic data artifact mentioned in the video regarding deep fake data?

-A problematic data artifact is that an overwhelming amount of deep fake data is pornographic, which can lead MesoNet to use this statistical reality as a heuristic for its predictions.

What is Kite and how does it relate to the video?

-Kite is an AI-powered coding assistant that helps users code faster and smarter by providing autocomplete suggestions and reducing keystrokes. It is mentioned in the video as a tool that can be integrated into various code editors.

What is the significance of the data collection method for MesoNet's performance?

-The data collection method is significant because it affects the model's ability to generalize and make accurate predictions. The video discusses how MesoNet's training data, which includes a high percentage of pornographic deep fake content, could influence its predictions.

Outlines

このセクションは有料ユーザー限定です。 アクセスするには、アップグレードをお願いします。

今すぐアップグレードMindmap

このセクションは有料ユーザー限定です。 アクセスするには、アップグレードをお願いします。

今すぐアップグレードKeywords

このセクションは有料ユーザー限定です。 アクセスするには、アップグレードをお願いします。

今すぐアップグレードHighlights

このセクションは有料ユーザー限定です。 アクセスするには、アップグレードをお願いします。

今すぐアップグレードTranscripts

このセクションは有料ユーザー限定です。 アクセスするには、アップグレードをお願いします。

今すぐアップグレード関連動画をさらに表示

Welcome (Deep Learning Specialization C1W1L01)

Deepfakes | What is a Deepfake for Kids? | What is a deepfake and how do they work? | AI for Kids

Tutorial 1- Introduction to Neural Network and Deep Learning

1. Pengantar Jaringan Saraf Tiruan

1- Deep Learning (for Audio) with Python: Course Overview

Sequencing - Turning sentences into data (NLP Zero to Hero - Part 2)

5.0 / 5 (0 votes)